When it comes to the music production industry, machine learning and AI (artificial intelligence) is undoubtedly taking over.

As producers, knowing all the key differences between machine learning vs AI can seriously help you speed up your workflow and enhance your creativity.

As well as level up your mixes without burning out.

It’ll help you discover legendary new sounds, automate repetitive/boring tasks, and solve problems you didn’t even realize you had.

That’s exactly why I’m breaking down everything you need to know about machine learning vs AI, like:

- AI vs machine learning ✓

- How smart EQs and mix tools actually “learn” from your sessions ✓

- Plugins that mimic human intelligence to perform complex tasks ✓

- Tools that identify patterns in your melodies & basslines ✓

- How to use AI-generated song structures ✓

- Training custom machine learning models to sound like you ✓

- AI assistants that give you unbiased feedback ✓

- How AI and machine learning are closely connected yet very different ✓

- Dangers of over-relying on AI and machine learning ✓

- How it can help you fast-track manual processes/specific tasks ✓

- Advanced tips, tricks, and techniques ✓

- Much more about machine learning vs AI ✓

By knowing everything about machine learning vs AI, you’ll be able to completely rethink how you produce, finish more tracks, and sharpen your skills.

Plus, you’ll know exactly how to create, tweak, and control your sound with AI-enhanced precision, and unlock time-saving techniques that feel almost futuristic.

This way, you can learn how to take full advantage of artificial intelligence and machine learning like an absolute boss.

Table of Contents

- Machine Learning vs AI (Key Differences)

- AI (Artificial Intelligence) in Music Production

- Machine Learning in Music Production: How It Works Differently

- The Hybrid Zone: Combining AI and Machine Learning

- The Absolute BEST Artificial Intelligence and Machine Learning Plugins

- Latency, Glitches & AI Fails: Real-World Limitations

- Advanced AI + ML Techniques for Modern Music Producers

- Final Thoughts

Machine Learning vs AI (Key Differences)

A lot of people use the terms interchangeably, and while they’re closely related, machine learning vs AI are certainly not the same, especially in music production.

Artificial intelligence (AI) is a much broader concept.

It refers to any computer science system built to simulate human intelligence, like reasoning, problem solving, or understanding musical elements like groove or swing.

Machine learning, on the other hand, is a specific branch of AI — where a computer system learns from data input over time to perform tasks more effectively.

Think of it like an intern who studies your session files, figures out your style, and slowly becomes a clone of how you process vocals or mix drums.

For example, an AI plugin might use deep learning and a neural network to mimic a human mastering engineer, making tonal, spatial, and level-based decisions on its own.

But a machine learning model would need thousands of reference tracks and user interactions before making those same decisions.

- AI encompasses everything from virtual assistants to speech recognition to natural language understanding.

- Machine learning is more focused on math-based, pattern-driven systems that can analyze data and adjust over time.

This could be like an adaptive compressor that tightens up your kick after a few sessions of observing your moves.

So when we talk about machine learning vs AI in the studio, we’re talking about whether a plugin is following rules that were programmed (AI), or learning from experience and your workflow directly (ML).

Don’t worry if it sounds a little confusing right now because I’ll be breaking down everything you need to know about machine learning vs AI.

AI (Artificial Intelligence) in Music Production

Let’s kick things off by talking about what artificial intelligence and machine learning can already do. You’re probably already using artificial intelligence (AI) without realizing it, from plugin predictive modeling/intelligent behavior to song structure builders and even vocal FX. And the gap between machine learning vs AI is getting narrower every year. So, let’s break it down so you can be incorporating AI into your workflow in no time.

-

From Melody Generators to Full Song Structures

AI tools like AIVA, MuseNet, and Orb Producer Suite 3 can generate entire chord progressions, melodies, and transitions based on genre, mood, and BPM.

You can literally select a key, tell it you want a dark trap vibe at 140 BPM, and it’ll spit out an 8-bar loop with harmonic movement that sounds almost human.

And the best part is you can regenerate endlessly until one hits.

Some producers even use AI to build out full 3-minute song structures, including intros, drops, bridges, and outros, all mapped to energy curves and dynamic shifts.

-

AI Tools That Analyze Reference Tracks & Rebuild Their DNA

AI plugins like LANDR Mastering, iZotope Ozone 11, and HookTheory AI can now dissect your favorite track (say, Travis Scott or Fred Again..) and break down the:

- Frequency balance

- Stereo image

- Dynamics

- Arrangement

Then they rebuild that DNA into your own track with suggested changes to your low end, vocal brightness, or even drop length.

This is machine learning vs AI in full force 一 AI handles the analysis and suggestions, while machine learning (ML) fine-tunes those decisions over time as it learns your taste.

-

AI for Vocal Processing, Pitch Correction & Harmonization

Generative AI plugins like Sound Doctor, Waves Tune Real-Time, Antares Auto-Tune Pro X, and other intelligent systems now use AI to correct pitch based on:

- Emotion

- Phrasing

- Natural transitions

- Not just note grids

Some even use deep learning to mimic the human brain’s response to phrasing, letting you add harmonies that feel emotionally connected to the lead vocal.

It’s almost like singing with yourself, if that makes sense.

Others use natural language processing and speech recognition to understand where syllables begin and end, applying de-essers and compressors only when they need to.

So, instead of manually riding volume or EQ, these tools now perform complex tasks like dynamic formant shifting or harmony voicing automatically.

By incorporating machine learning/artificial intelligence into your vocal chain, you can keep things clean, expressive, and 100% in tune, without sounding robotic.

Machine Learning in Music Production: How It Works Differently

Now that we’ve broken down what AI can do, let’s talk about where machine learning fits in, and how it actually works behind the scenes. The truth is, when it comes to machine learning vs AI, the biggest difference is that ML needs training, more data, feedback, and time. It improves by analyzing your behavior, tracking your changes, and learning from patterns, instead of just spitting out pre-coded responses like basic AI tools.

-

ML-Powered Smart EQs, Limiters & Mix Assistants

One of the most common uses of machine learning in music production right now is in smart mixing tools, which are making major waves.

Plugins like iZotope Neutron 5, Gullfoss, and Sonible smart:EQ 4 use machine learning models to understand how frequencies interact across your entire mix.

For example, smart:EQ 4 uses an adaptive neural network that analyzes unstructured data from your audio in real time.

It can tell when your lead vocal is clashing with your pad’s midrange and suggests a 2.4dB dip around 1.2kHz, for example.

That’s something traditional AI wouldn’t even attempt without supervised and unsupervised learning baked into it.

And when you load in a mix assistant like Neutron 5’s Mix Assistant, it doesn’t just apply presets, it uses past mixes, data input, and genre-specific targets to perform complex tasks like:

- Dynamic EQ notching

- Stereo image optimization

- Spectral balancing

These are the kinds of tasks that would normally take a seasoned engineer at least 45 minutes per track (crazy, right?).

So when we talk about machine learning vs AI in this case, the ML model is actually learning from massive datasets and identifying patterns in your workflow.

AI alone, on the other hand, would just stick to its rule-based logic.

Honestly, when it comes to machine learning vs AI, this is one of the main things that you need to know.

-

How ML Learns Your Workflow & Adapts Over Time

Unlike fixed AI systems, machine learning models can evolve based on how you work as an individual, which might be insanely different from another producer.

That means if you tend to push your 808s around -4 LUFS and dip your hi-hats around 6.5kHz, a smart ML tool will eventually learn that and recommend similar moves in future sessions.

For example, Sonible smart:limit can analyze your loudness preferences across different tracks and adapt its ceiling and release times to match.

If you consistently finish your trap mixes around -8 LUFS integrated with a true peak of -0.3dB, it starts prioritizing those targets without you telling it.

That’s the beauty of AI and machine learning when they work together 一 the AI offers the base functionality, and the ML component personalizes it to match your vibe.

This is what separates a helpful tool from a real assistant.

And when producers like yourself compare machine learning vs AI, this adaptive behavior is a key difference worth paying attention to, big time.

The Hybrid Zone: Combining AI and Machine Learning

Some of the most powerful tools available on the market right now aren’t just using one or the other (machine learning vs AI).

They’re combining both artificial intelligence and machine learning (ML) to offer next-level assistance that evolves while you produce.

Take iZotope Neutron 5, for example…

It uses AI to make initial mix decisions, while its machine learning algorithms adjust those suggestions in real time based on your specific:

- EQ cuts

- Compressor ratios

- Loudness preferences

It’s all about simulating human intelligence and making it feel like you’re working with another experienced ear in the room.

Sound Doctor uses an AI-powered effects generator that pairs with ML-based style formulas to deliver fully customized chains that adapt to your genre, BPM, and specific sound source.

It can instantly load a multi-FX chain with saturation at 45%, tremolo set to 5.5Hz, and a stereo widener capped at 40%, all based on the mood you choose.

When we look at machine learning vs AI in these hybrid plugins, ML gives the plugin context (learning your taste, genre, and habits) while AI provides the immediate actions that save you time and brainpower.

The Absolute BEST Artificial Intelligence and Machine Learning Plugins

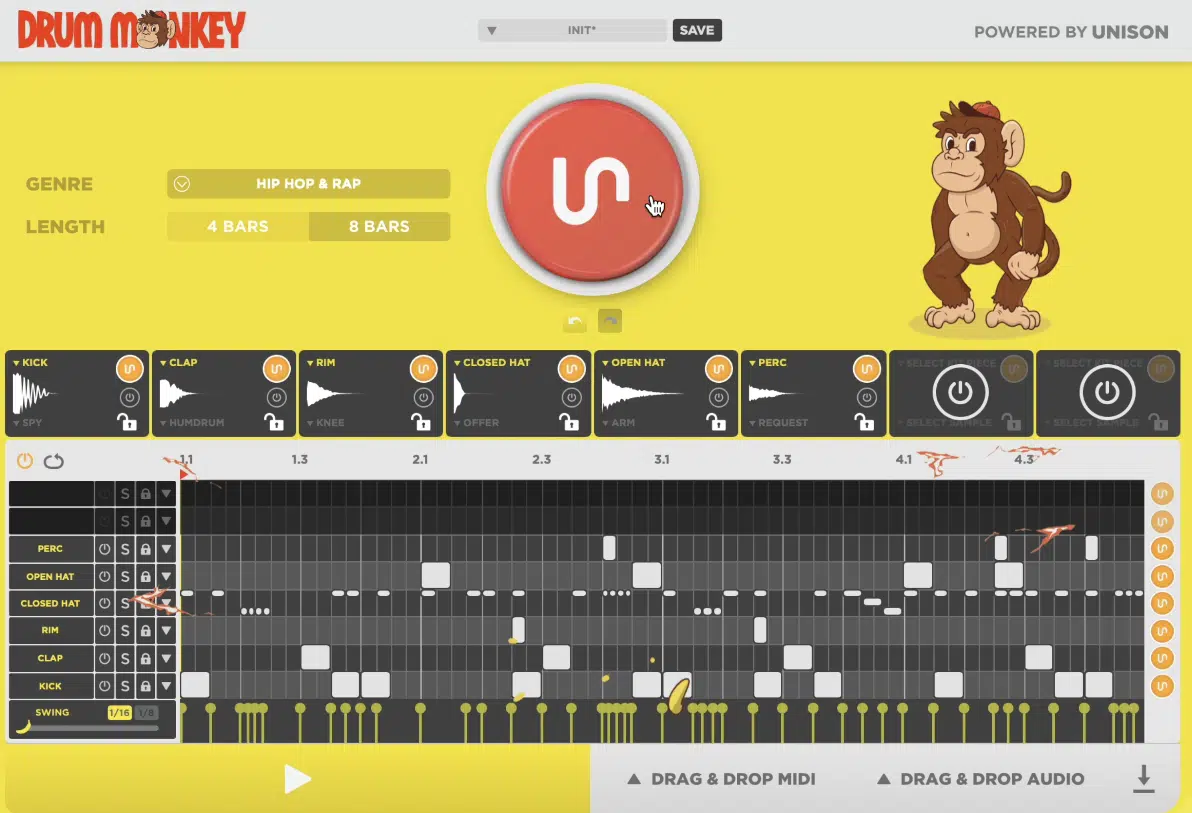

Drum Monkey is an AI-powered drum generator that uses trillions of rhythmic variations with built-in genre targeting.

So, when you lock in your tempo (say 145 BPM for trap), it generates full 8-bar loops complete with hi-hat swing, kick/snare variation, and mapped velocity for every hit.

And then you can tweak each of course, too, which you can tweak using ML-based “Style Profiles.”

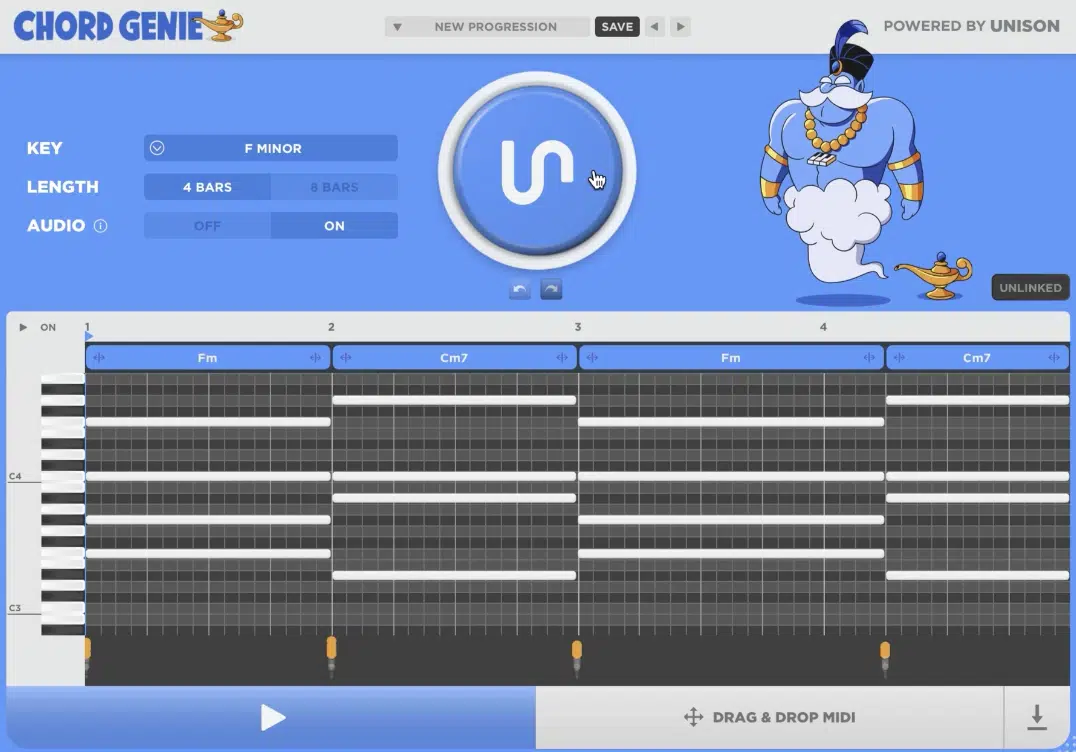

Chord Genie lets you select a key, scale, and vibe, like D minor, harmonic, moody, and instantly gives you 4- or 8-bar chord progressions using AI and ML models that replicate human reasoning behind harmonic movement.

You are also able to adjust voice leading, chord tension, and rhythmic timing with a click.

808 Machine is an AI-powered 808 creator that lets you instantly generate epic, genre-specific 808s by choosing a vibe like “Aggressive Trap” or “Dark R&B.”

For example, you might get a punchy sub tuned to C#1 with a 360ms tail, hard clipper at +6dB, and multiband saturation dialed in at 45%.

It’s all built using big data from industry-level basslines and frequency-mapped genre characteristics.

These plugins combine natural language understanding (when you search or describe your vibe), pattern recognition (to predict what FX chains or progressions you’ll need), and AI systems trained to perform complex tasks just like a top-tier engineer.

That’s why when we talk about machine learning vs AI, this bundle shows how powerful the combo becomes when you’re looking for speed, accuracy, and real creativity on tap.

Download All The Best AI Plugins In The Game

Pro Tip: AI-Integrated DAWs (FL Studio 21, Ableton 12, Logic Pro)

FL Studio 21 now offers integrated AI tools that use pattern recognition and data analysis to successfully:

- Recommend arrangement structures

- Auto-slice samples into new rhythms

- Adjust clip gain to match RMS levels across stems

For example, vocals around -16 LUFS and drums peaking at -0.5 dBTP for maximum punch, and stuff like that.

Ableton Live 12 brings in-session predictive suggestions based on deep learning, including clip follow actions and MIDI note alterations based on groove consistency.

As well as intelligent warp marker placement using computer vision trained on millions of professionally aligned stems.

Then you got Logic Pro’s AI system that uses ML-based behavior tracking…

So, if you consistently EQ your hi-hats around 8.5kHz with a Q of 1.7 and add a 5ms pre-delay on vocals, it will begin to suggest similar chains with a single click.

It’s the perfect example of machine learning vs AI working hand-in-hand.

Getting Unbiased Feedback from an AI “Second Ear”

When your ears are fried after mixing for 4+ hours, AI-powered analyzers step in and compare your current mix to industry-grade tracks.

They use mathematical models and training data, then recommend level, panning, and frequency tweaks without emotion or human error.

This kind of feedback, like noticing your vocals are consistently 1.5dB too hot or your 808 sub is masking kick punch between 55Hz–65Hz, gives you the equivalent of a second engineer on standby.

This makes the machine learning vs AI payoff crystal clear.

Pro Tip: Risks, Limitations & Things to Watch Out For with Machine Learning/Artificial Intelligence

One common issue is overprocessing because artificial intelligence (AI) or machine learning (ML) tools may recommend too many moves at once…

This can flatten dynamics and make your mix sterile if you’re not listening critically (like auto-EQ stacks that push three bands more than 4dB each).

Another thing to keep an eye on is session compatibility and plugin misreads.

For example, ML models can sometimes misinterpret a layered sound as a single source and suggest cuts that actually ruin the blend, especially when working with unstructured data or live-recorded loops.

Data Training Bias: Why Some Tools Don’t Understand Your Style

AI and ML tools are only as good as the training data they were built on, that’s a fact.

If that data didn’t include a lot of lo-fi, glitch-hop, or experimental trap tracks, and that’s what you’re producing, the plugin might totally misread your intentions.

This could be like applying a “clean mix” algorithm to something that’s meant to sound raw and gritty, which would be a total disaster.

That’s why you might notice plugins like LANDR or Ozone occasionally boost highs that don’t need it or crush the low end of a sub-bass that’s meant to feel uneven.

They’re trained on what’s “popular” or “safe,” not necessarily what’s right for your sound, and that’s one of the most real limitations in the machine learning vs AI debate.

So always double-check what these tools suggest, especially when working in genres that intentionally break the rules (and remember, AI can mimic human intelligence, but it still doesn’t have your ear).

Latency, Glitches & AI Fails: Real-World Limitations

Even though artificial intelligence and machine learning have come a long way, there are still noticeable flaws in real-world sessions.

The #1 issue in my opinion is latency, which can spike up to 95ms on certain ML-powered stem separation tools like Lalal.ai when working with high-resolution files over 96kHz.

Glitching is also a problem sometimes, particularly when an AI system misinterprets a transient or ambient tail.

For example, smart compressors might treat a reverb wash as a peak and trigger ducking, which can flatten your mix unintentionally.

And sometimes the tools just flat out fail, like AI mastering services that auto-boost sibilance or overly compress vocals, ignoring intentional dynamics…

It’s one of those times where the human brain still beats machine learning’s “best guess” if you look at the big picture.

PRO TIP: Always A/B your AI-enhanced version with a raw one, and never skip your own decision making, especially in genres where mimicking human intelligence can’t replace feeling what’s right.

Advanced AI + ML Techniques for Modern Music Producers

Now that we’ve covered everything you need to know about machine learning vs AI in the production world, let’s talk about some more advanced stuff. These techniques are where data science, human cognitive abilities, and smart plugins all come together to help us go from just producing to complete innovation.

#1. Training Custom ML Models on Your Own Tracks

You can feed your own MIDI files into a machine learning algorithm so it learns your melodic phrasing, chord tendencies, and timing habits.

For example, your habit of resolving loops on the 3rd beat of bar 8 or your use of parallel minor voicings.

You can even set parameters like max variation, style adherence (0 to 100%), and output length 一 letting the ML model generate a brand-new 16-bar progression that mirrors your past five sessions, all trained from just 5–10 inputs.

This is where AI and ML leave basic automation behind and enter the world of predictive modelling and personalized problem solving.

#2. Using AI to Create Genre-Bending FX Chains

With plugins like Sound Doctor, you can create multi-layered FX chains by combining data input presets with hybrid generators…

For instance, setting saturation to 58%, then layering a phaser modulated at 0.2Hz and sidechaining the reverb tail using an ML-learned transient detection model.

This kind of chain can take a chill R&B loop and inject it with elements of dub techno, glitch hop, and future bass.

And it’s all driven by AI and machine learning logic trained to perform specific tasks across multiple genres.

You’re basically using machine learning vs AI as a toolkit for genre fusion 一 one handles the logic, the other learns your taste.

#3. AI for Adaptive Arrangement: Intelligent Song Structures

With tools like Orb Producer Suite, AI can scan your track’s tempo, energy curve, and spectral balance, then recommend entire structure shifts based on hit songs.

For example, it might suggest your drop hits at bar 17 instead of 33, based on visual search models that track listener engagement dips.

Or, even recommend shortening your intro by 8 bars if your frequency energy is too low in the first 20 seconds.

And all the different settings let you tweak repetition probability, phrase complexity, and tension-release balance.

This way, you can shape a structure that mimics what real listeners expect without sounding robotic.

It does so using a computer system that mimics human cognitive abilities, using pattern recognition and machine learning models trained on thousands of radio-ready arrangements.

Just remember it’s not just about speeding things up like self-driving cars or increased operational efficiency or fraud detection.

It’s about using artificial intelligence and machine learning to solve problems like arrangement issues and help you stay inspired in real world environments.

This way, you don’t have to worry about beat block, track pacing, or where to start ever again.

Final Thoughts

And there you go: everything you need to know about machine learning vs AI.

With this new information, you can make smarter production decisions, streamline your workflow, and start using intelligent tools that actually understand how you work.

Plus, you’ll really understand what it takes to use AI in a way that feels natural, inspiring, and tailored to your sound, not just random tech buzz.

This way, you’ll be able to successfully lay down better tracks, finish your sessions faster, and sharpen your instincts with tools that adapt to you.

As well as create cleaner mixes, discover new ideas you wouldn’t think of alone, and avoid falling into repetitive or robotic patterns.

And again, if you want access to the absolute best AI plugins in the game, I got you covered, big time.

From hard-hitting drum sequencing to chord generation to the AI bassline generator that can literally match your mix energy with subharmonic precision, it’s all right there.

Just remember, it’s all about using these tools to enhance your production skills and sound design workflow, not replace your creativity at all.

And by taking advantage of machine learning vs AI, you’ll always stay ahead, stay inspired, and keep producing like a pro.

Whether it’s melodies, vocals, or your next favorite AI bassline generator, you can really blow the competition out of the water.

So get in there, test things out, and let your next AI bassline generator do some of the heavy lifting 一 just make sure you stay in control all day.

Until next time…

Leave a Reply

You must belogged in to post a comment.