One of the most common questions I’ve gotten lately is about the debate between AI vs human mixing.

As producers, knowing which way to go is an absolute must.

It’ll help you understand what’s best for your workflow, give you full control over your mix, and seriously improve your decision making as you build your track from scratch.

Plus, you’ll be able to spot when AI tools can actually help you, and when you’ll get better results from a human engineer.

It’s not just about automating routine tasks anymore 一 it’s about the sound quality, the creativity, and how your mix feels emotionally.

And trust me, in today’s age, that matters more than ever.

That’s exactly why I’m breaking down everything you need to know about AI vs human mixing, like:

- Speed and performance ✓

- Mixing quality across genres ✓

- Creative flexibility ✓

- Emotional connection ✓

- The importance of human judgement ✓

- AI algorithms and decision-making ✓

- Limitations of AI alone (pro insight) ✓

- Top artificial intelligence mixing tools/plugins ✓

- Real-world use cases & ethical concerns ✓

- When to use humans vs AI ✓

- Will AI be replacing humans? ✓

- The combination of AI and human edge ✓

- Much more about AI vs human mixing ✓

By the end, you’ll finally know all about AI vs human mixing so you can work faster, sound better, and stay ahead in today’s world of music production.

Plus, successfully balance your time, make better mixing decisions, and avoid common mistakes that AI systems tend to make.

This way, you can deliver high-level, pro-quality mixes like an absolute boss.

And, you’ll never have to worry about second-guessing your mixing setup ever again because you’ll have a super solid understanding of everything.

Table of Contents

- AI vs Human Mixing: The Ultimate Showdown

- AI vs Human Mixing (Key Differences)

- Pros & Cons of AI vs Human Mixing

- When Does AI Mixing Make the Most Sense?

- When Is Human Mixing the Better Call?

- The Hybrid Approach: Human Creativity Meets AI Tools

- Top AI Mixing Plugins

- The Future of AI Mixing for Producers

- Bonus: AI Mixing Tips & Techniques

- Final Thoughts

AI vs Human Mixing: The Ultimate Showdown

As the music production world evolves, the line between AI vs human mixing continues to blur. Both sides offer serious power, that’s for sure, but knowing how they stack up against each other will change how you mix forever. So, let’s start with the basics of AI vs human mixing so we’re all on the same page.

What Is AI Mixing?

AI mixing is when you use artificial intelligence to help do things like balance levels, EQ, compression, and even stereo imaging automatically across your track.

For example, you can drag in a vocal stem, and the AI system might recognize harshness around 3.2kHz, cut that, compress it with a 4:1 ratio at -18dB threshold.

And then apply light de-essing without you ever even touching a knob.

You’ll also notice some AI tools applying a low cut at 80Hz for vocals and tightening up a kick by compressing at 10ms attack and 100ms release.

Or, applying subtractive EQ to carve space for the bass.

It’s all based on machine learning, which means the plugin’s trained on thousands of professional mixes, pulling from large amounts of data to make fast decisions.

These AI tools are designed to handle routine tasks that take time to get right, like taming mud in the low-mids or catching overlapping frequencies between the bass and kick.

For instance, if your kick is clashing with the bass, some AI-driven plugins will auto-detect the masking and create sidechain compression at specific dB thresholds.

This will help you create instant clarity across the low end.

That said, while AI excels at fast processing and technical accuracy 一 it still struggles when human judgement and contextual understanding are needed.

Like knowing when to leave something a little imperfect for vibe, for example.

Maybe you want a dry vocal in the first half of the verse for intimacy, even if the AI wants to throw on reverb based on textbook settings.

So in the bigger picture of AI vs human mixing, AI helps you move fast, but the human still makes it feel right and emotionally on point.

Human Mixing: Breaking it Down

Human mixing is all about making choices by ear, not just based on numbers or graphs, but by feel, emotion, and real-world experience.

A human engineer might hear a verse and push the reverb tail longer during the last word to make it more dramatic, even if that choice technically “breaks” the mix.

You’re not relying on data analysis 一 you’re relying on your own ears, references, and creative decision-making to shape every move in the mixing process.

Maybe you pan the guitars wider in the chorus not because the frequency analyzer told you to, but because it makes the whole track explode with emotional impact…

Well, this is where human creativity, nuance, and vibe come into play 一 the things that AI systems still can’t replicate, even with generative AI.

In the ongoing AI vs human mixing discussion, this is what makes the human touch so valuable because it’s not about perfection, it’s about feel, quality, and connection.

AI vs Human Mixing (Key Differences)

Now that we’ve got a solid grip on what each one does, it’s time to really lay out where AI vs human mixing separates. And, how those differences can seriously affect your mix. Let’s break down everything you need to know about AI vs human mixing in regards to speed, consistency, creativity, and sound quality across different styles.

-

Speed & Efficiency

When it comes to speed, AI tools move insanely fast and that’s where AI vs human mixing starts to split.

For example, an AI engine might analyze your whole track, clean up low-mids at 250 Hz, add multiband compression, and set peak output to -1dB, all in under 15 seconds.

A human engineer, on the other hand, might take 45 minutes to get that same balance on the same precise level.

This is because they’re adjusting each element by ear and referencing a pro mix to guide the moves and make sure everything is on point.

If you’re tight on time or working through a big batch of songs, this kind of efficiency can help you create more and keep the workflow flowing.

Just don’t forget that speed sometimes trades off with emotional connection.

-

Consistency vs Flexibility

AI systems are consistent (like scary consistent) and that can be a blessing or a curse depending on the unique situation.

For example, an AI mix assistant will always balance vocals the same way if the data tells it that’s the optimal balance for pop music.

But sometimes you don’t want that… Maybe the artist’s vibe is a little raw or gritty, and a rigid preset would just perform worse.

That’s where human judgement steps in.

A human engineer might ride the fader manually during each hook to give it that push-pull tension that an AI alone wouldn’t even think to try.

Remember, it’s all about the little things that make a beat really hit people right in the feels, so always keep that in mind when debating AI vs human mixing.

-

Creative Intent vs Predictive Artificial Intelligence Algorithms

Here’s where the split in AI vs human mixing gets obvious because AI algorithms are smart, but they don’t actually feel the music.

Let’s say you’re working on a lofi track where the vocal intentionally clips a bit for aesthetic 一 an AI tool might see that as a mistake and try to “fix” it.

On the flip side, a human engineer knows when to leave things imperfect on purpose to preserve the emotional impact of the track.

That personal touch makes all the difference, especially in genres that thrive on vibe over polish (which, let’s be honest, is pretty much all of them).

That’s why, in my opinion, AI is great for certain tasks or foundations, but it shouldn’t be the end all be all.

-

Quality Across Genres & Mixing Styles

Across all popular genres right now, AI vs human mixing can be hit or miss as research shows.

This is because AI nails the predictable stuff, but often struggles when things get experimental or if you want to switch things up real quick.

For example, in EDM, where the kick and bass need to slam with exact clarity, AI can absolutely perform at a high level, no doubt about it.

But drop that same AI into a jazz fusion track or something with odd time signatures and shifting dynamics, and the results showed it’ll likely fall apart fast.

That’s where a human engineer shines — adjusting in real time, making decisions based on feel, not just pattern recognition or meta analysis.

But, remember you should be all about using AI technology to my advantage for remedial tasks, and I highly recommend you take advantage of it as well.

Pros & Cons of AI vs Human Mixing

One of the biggest upsides of AI mixing is how fast you can get a full mix going, especially when you’re juggling multiple tracks and trying to meet a deadline.

For example, certain AI plugins can give you a ready-to-go starting point in under a minute, which is wild when you’re on a tight schedule.

Plus, AI systems are great at automating routine tasks like:

- EQ cleanup

- Stereo width adjustments

- Balancing audio levels

This is especially true when the genre is predictable like pop or EDM.

But here’s the downside: AI alone doesn’t always get the emotional connection right 一 it can’t fully read the vibe of the track, so sometimes it ends up sounding flat or robotic.

That’s where human mixing holds the edge.

Any human engineer knows when to leave in the grit, push a vocal a little too loud for tension, or drop the bass slightly off-center for feel.

That kind of human creativity and personal touch can’t be replaced, not even by the most advanced AI tools.

So when you’re comparing AI vs human mixing, it’s really about knowing which trade-off (human judgment vs convenience) works for what you’re trying to create and the effectiveness of everything.

When Does AI Mixing Make the Most Sense?

If you’re in the middle of making an idea come to life and don’t want to lose the flow, AI mixing is a straight-up lifesaver.

This could be like the basic decision-making tasks we talked about earlier.

For example, let’s say you’re an independent artist trying to upload a demo to SoundCloud before midnight.

Dropping your stems into an AI system like Neutron 5 can get you a polished mix in minutes without opening a hundred plugins.

That kind of speed and automatic decision making can make all the difference when time is tight and your tasks are piling up.

AI excels at pulling from large data sets to handle basics like gain staging, EQ balance, and stereo field cleanup (especially when your track needs a solid starting point fast).

In the debate of AI vs human mixing, these tools shine brightest when you’re on a deadline and low on budget.

Or, just want to keep your process moving without overthinking every little move.

For example, you’ve got a full 10-song beat tape due tomorrow, right?…

Well, instead of manually EQing every snare and kick, an AI tool can auto-detect and apply different settings to each drum group using genre-specific presets, saving hours.

Another example would be if you’re producing content for clients, like a podcast or YouTube intro, and they want a final mix within an hour, AI can deliver all day.

NOTE: Some platforms now even offer real-time AI mastering with genre-matched loudness targets. For instance, a trap track might get a tighter 808 response and slightly higher LUFS (-9.5) compared to a chill R&B mix hitting around -13 LUFS.

And remember, you’re not giving up control, you’re just letting AI tools help with routine tasks so you can focus on creativity, vibe, and finishing the song.

So, in AI vs human mixing, that’s sometimes the only way to stay on top of both performance and progress.

When Is Human Mixing the Better Call?

There are moments in music production where you just can’t rely on presets or machine-driven decisions.

And that, my friends, is when human mixing wins in the AI vs human mixing debate, hands down.

For example, if you’re working on an emotional ballad or anything that leans on emotional impact, you’re gonna want a human engineer who understands how to:

- Build tension

- Make ample space

- Tweak the overall feel over time

These kinds of mixes often involve complex automation moves, subtle saturation, and tiny EQ shifts that would confuse even the best AI tools.

Not to mention, human mastering is still the go-to when you need custom detail on the final polish because, again, it’s about emotion, not just performance metrics.

In the bigger picture of AI vs human mixing, this is where the personal touch and human creativity matter more than any preset or machine learning model ever could.

That’s literally what researchers found in many kinds of studies/text documents, so keep that in mind.

And think about it: if you’re mixing a live indie band where the drums have natural bleed, the vocals have imperfect timing, and the entire vibe comes from organic interaction.

So, would you really trust an AI system to know what to keep and what to clean?

Well, that’s where human judgement comes into play, like using a 2.5:1 ratio on parallel vocal compression, not because it looks right, but because it feels right.

Or, maybe the pre-chorus needs a slight boost at 1.2kHz just on the hi-hat bus to add lift without actually increasing volume.

This is something an AI alone might miss because it’s not part of the typical dataset.

Even in the mastering process, an experienced human mastering engineer can hear when a stereo image feels too wide for vinyl or when the kick is just a little too front-loaded for a vocal-heavy mix.

Things that go way beyond the baseline performance of an algorithm.

So when the vibe is fragile, custom, or experimental, AI vs human mixing doesn’t even feel like a fair fight 一 humans working by feel still carry the edge every time.

The Hybrid Approach: Human Creativity Meets AI Tools

Now here’s where things get really powerful… when you start combining humans and AI tools into the same workflow.

You don’t need to decide either human or AI because you can always combine them.

I suggest you let AI handle the first pass of your mix, like setting audio levels, balancing the spectrum, maybe even suggesting panning setups based on data analysis.

And then, step in as the human to tweak the track until it feels right.

For example, you can use Neutron 5 to do the initial cleanup and gain staging, then start mastering (or bring in a human mastering engineer) to add final:

- Saturation

- Stereo width

- Vibe tweaks

- Etc.

That way, you’re using the technology for routine tasks, and leaving the art to the humans who know what emotion actually sounds like.

Bottom line, in the AI vs human mixing world, this kind of hybrid approach opens up new opportunities to work faster and smarter without losing that real sound quality that separates pros from amateurs.

Side note, if you want to learn more about AI in the music production world (in general), I got you.

Top AI Mixing Plugins

When you’re weighing out AI vs human mixing, it helps to know which AI tools are actually worth your time in the studio. These plugins don’t just guess — they analyze, process, and help shape your mix using AI algorithms, real-time adjustments, and built-in genre awareness.

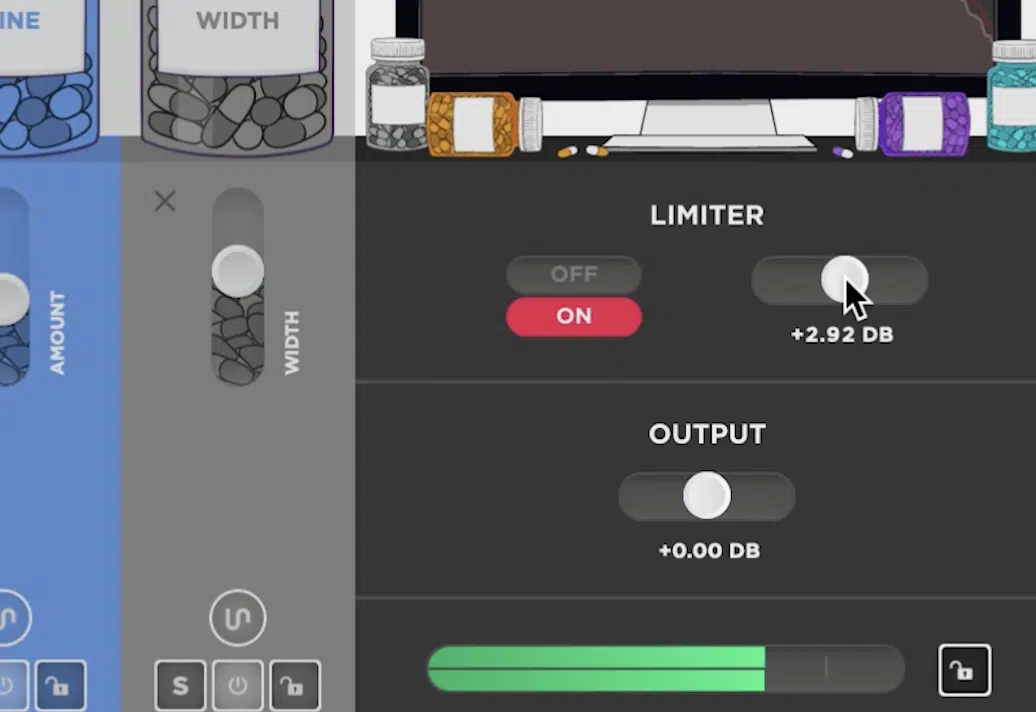

Sound Doctor

Sound Doctor by Unison Audio is a game-changing AI-powered plugin designed to streamline your mixing process by generating complex FX chains tweak to your track’s style.

With over 250 factory presets and the ability to create endless variations, it covers five distinct styles:

- Aggressive

- Clean

- Dirty

- Dreamy

- Lo-Fi

Each has eight unique formulas, which is awesome.

For example, selecting the “Aggressive” style might apply a chain that includes a 4:1 compression ratio, a +3dB boost at 60Hz for punchy bass, and a stereo widening effect to enhance the mix’s impact.

It also features 25 high-quality effects 一 including saturation, EQ, reverb, delay, and more, which can be stacked up to eight per chain.

You can easily customize these chains by adjusting parameters like dry/wet mix, effect order, and individual effect settings for both quick fixes and detailed sound shaping.

For instance, you might tweak the reverb decay time from 2.5 to 3.5 seconds to add more space to a vocal track, and things like that.

Sound Doctor also includes a master control center for global adjustments, such as final EQ tweaks and limiting.

This way, your track meets professional standards.

Its intuitive interface and AI-driven suggestions make it an invaluable tool for music producers looking to enhance their mixes efficiently while keeping creative control.

When it comes to the best AI mixing plugins, you definitely cannot miss out on this one, for real.

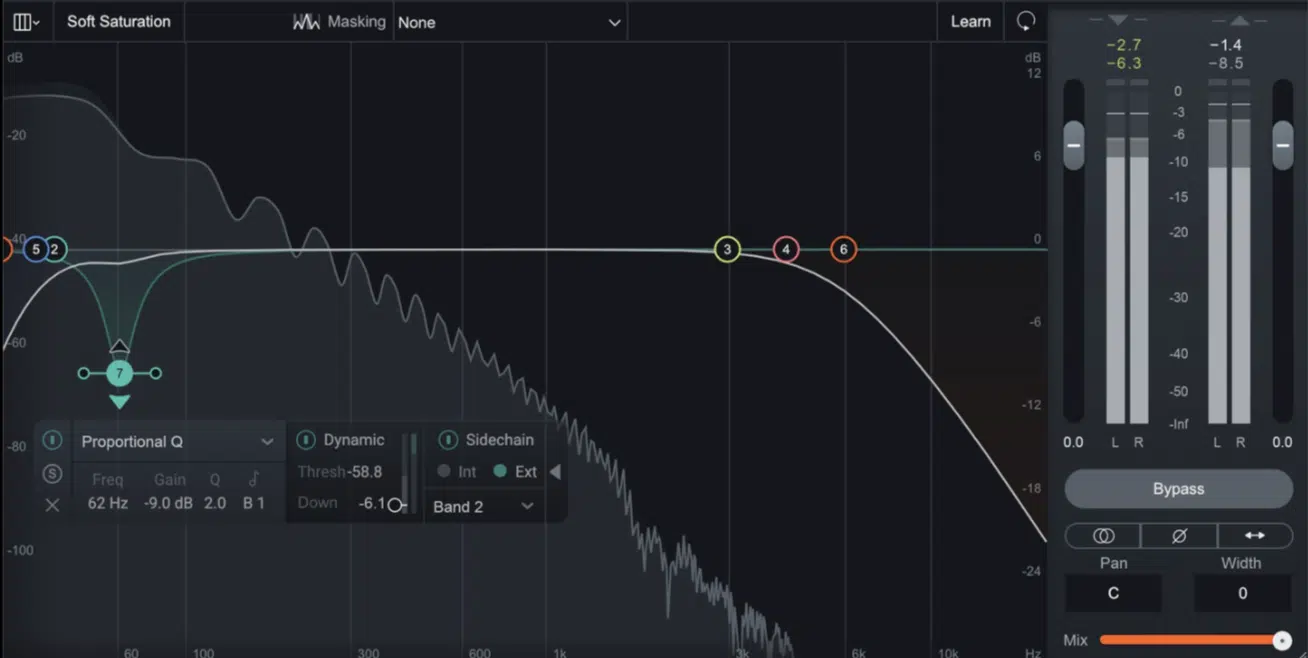

iZotope Neutron 5

Neutron 5 uses machine learning to deliver automatic mix suggestions, from EQ shapes to compression thresholds.

With a visual Mix Assistant that adapts based on your input signal as well.

You get tools like Sculptor, which applies dynamic spectral shaping across multiple bands, and Masking Meter, which shows overlapping frequencies between instruments in real-time.

It’s perfect for identifying balance problems quickly in a track.

And, in the AI vs human mixing conversation, it gives producers a clean starting point before applying more emotional or creative human adjustments.

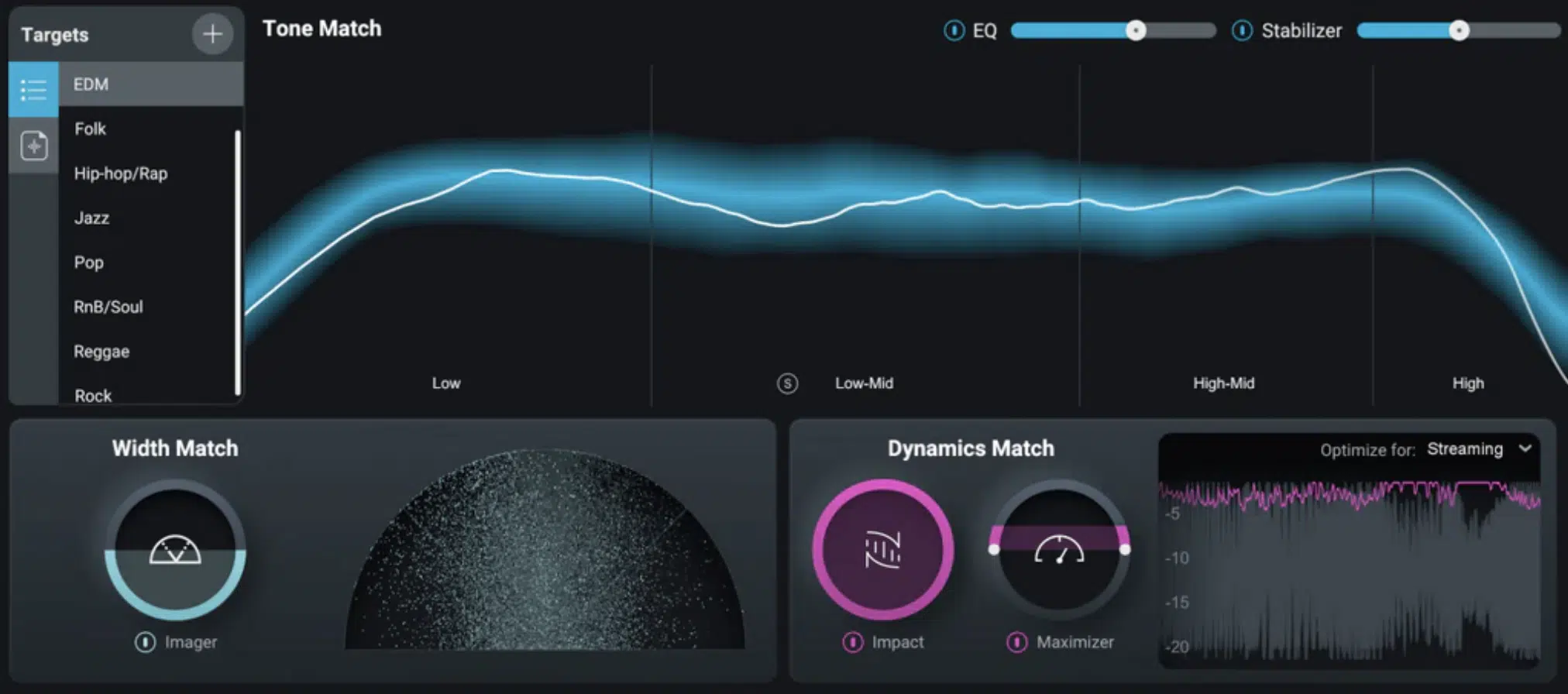

Sonible smart:engine Series

The Sonible smart:bundle analyzes your track and applies real-time mixing adjustments using genre-specific AI profiles, including:

- smart:EQ 4

- smart:comp 2

- smart:reverb

- smart:limit

- smart:deess

- smart:gate

For example, smart:EQ can instantly remove muddiness at 200Hz or harshness around 3kHz depending on the instrument.

Smart:comp, on the other hand, learns the envelope of your audio to apply intelligent compression curves.

These AI tools are precise and transparent, and when it comes to AI vs human mixing, they’re great for routine shaping.

But, they still need your human judgement when you want a mix that really connects emotionally, so always keep that in mind.

The Future of AI Mixing for Producers

In recent years, we’ve seen AI tools go from gimmicky add-ons to legit contenders in the music production space, and this shift isn’t slowing down anytime soon, trust me.

Developers are training AI algorithms using large amounts of data, pulled from high-end human mastering sessions and mix stems across every genre.

For example, in one case study, researchers ran experiments published on AI-generated mastering vs traditional human mastering engineer workflows.

And, while AI systems hit the right LUFS and frequency targets, they still performed worse in perceived sound quality and emotional tone.

That’s because emotional connection, human creativity, and decision making still matter more than just hitting loudness curves or RMS targets.

But with advances in generative AI, more plugins are starting to recognize intent, like when a track’s meant to feel aggressive or dreamy.

This shows real future potential for merging tech with vibe (almost scary, right?).

The real growth area is in combining humans and AI tools, not replacing them, so the mastering process stays flexible while the technical tasks get handled faster.

All-in-all, if the world keeps moving in this direction, AI vs human mixing won’t be a debate 一 it’ll be about building systems where both can create better beats together.

Bonus: AI Mixing Tips & Techniques

If you’re still figuring out where to start with your own mixes, these quick tips/techniques will help you get the most out of AI tools. So, when you’re trying to balance the whole AI vs human mixing situation, definitely keep these in mind. These aren’t just shortcuts 一 they’re part of a smarter process that keeps the performance high while still letting you lead the way to perfection with human judgement.

Start With AI-Generated Mixes/Data Analysis, Then Tweak By Ear

One of the best ways to use AI systems without giving up your own style is to start with an AI-assisted balance, then step in and make the emotional calls yourself.

For example, let AI tools handle your initial gain staging, low-cut EQ on vocals around 100Hz, and ducking reverb tails.

You could even let the AI tighten up the low end by sidechaining your 808 to the kick with a 10ms attack and -6dB threshold, or cut 400Hz from your instrument bus to clean up that muddy midrange 一 all while you’re still figuring out the creative flow.

Then, you should take over and decide if the chorus needs a 1.5dB vocal lift for emotional impact (whatever matches your unique vibe/genre/style).

You might also choose to widen the stereo field just during the drop, automate a slap delay in the bridge, or leave in a slight vocal crack that the AI would’ve erased.

Because those are the creative decisions that define your sound.

This saves time while keeping your personal touch alive, which is exactly how we stay creative without relying on AI alone.

In the AI vs human mixing world, this kind of split workflow makes sure the track stays professional, emotional, and still 100% yours.

Use Reference Track Matching (Human Mastering Must)

One other easy way to bridge the gap between AI vs human mixing is to feed your AI tools a reference track that matches the sound you’re aiming for.

For example, you can load in a professionally mastered hip-hop song, and the AI system will analyze the data with things like:

- EQ curve

- Loudness

- Width

- Transient response

Then, guide your mix to hit a similar baseline performance.

Some plugins even let you isolate certain sections (like the chorus or intro) and adapt the tone or compression style based on the specific energy of that moment.

This helps the AI understand where you’re trying to go sonically while giving you space to make creative tweaks along the way.

You might keep the same vocal brightness and kick punch from the reference, but then dial in a slightly darker snare or wetter reverb to match your own style.

That’s where the human creativity steps in my friends.

It’s a smart combo of data analysis and decision making that still leaves room for your own human creativity to really shine.

And when you’re balancing AI vs human mixing, this method lets the machine do the math 一 while you shape the soul of the track.

Always Recheck Dynamic Range (Not Just Loudness)

AI-generated mixes might hit the right LUFS or peak levels, but they can miss the mark when it comes to dynamic range, which is where sound quality really lives.

For example, a mix with vocals slammed at -6 LUFS might be loud, but it could also feel flat if the chorus doesn’t open up with enough headroom.

You might get a wall of sound that technically meets the loudness target, sure, but emotionally, it just doesn’t land…

That’s why it’s key to zoom in on the mastering process, recheck your track’s crest factor, and make sure your verse breathes more than the drop.

Side note, try looking for a 10–14dB difference between your peak and RMS levels in dynamic sections, or check that your sidechain compression isn’t choking your drums in quiet moments.

Yes, even if the AI already “finalized” it.

Sometimes that means going back in and manually automating vocal levels, or even bypassing the AI’s compression to let a moment feel more raw/more human.

In the AI vs human mixing debate, this is one of those tasks where human judgement adds something AI can’t always measure or live up to.

Use Genre-Specific AI Profiles/Custom Presets

Most modern AI systems now come with genre-specific profiles that adjust EQ curves, compression styles, and stereo width based on what works best for each style.

For example, a trap preset might boost the low-end at 50Hz and tame the highs at 8kHz to keep 808s punchy and vocals smooth, while a rock preset does almost the opposite.

Some AI tools even recognize tempo and rhythmic density, using that info to suggest different attack and release times on compressors.

This could be like tightening up a fast-paced DnB track with 20ms attack and 100ms release, versus softening a ballad with slower dynamics.

These kinds of tools speed up your mixing process and give you a high level starting point that’s actually built for your sound (especially useful for independent artists).

You could be working on a deep house track, for example, and the AI might widen the synths and attenuate kick spill in the side channel.

As well as give you instant tonal balance without ever pulling up a spectrum analyzer.

Still, even with smart presets, it’s up to humans working behind the screen to make the final decisions, especially when emotional impact is on the line.

That’s the difference between something that sounds “correct,” and something that feels right in the context of your track’s story, energy, and emotion, which is what the AI vs human mixing conversation always circles back to.

Final Thoughts

At the end of the day, AI vs human mixing isn’t about picking a side 一 it’s about knowing when to lean on the right tools for the right reasons.

As producers, what matters most is creating something that moves people, and sometimes that takes speed and automation.

While other times it takes patience and feel.

The real power comes when you understand how to combine your instincts with smart technology for the perfect balance.

So, whether you’re using AI to automate routine tasks or trusting your ears for that final decision, the key is staying in control of the process.

AI vs human mixing is just one of many decisions we face, but when you make the right one, your music ends up exactly where it needs to be.

So keep experimenting, trust your gut, think outside of the box, always get involved with new AI technology, and let the mix serve the song every single time.

Until next time…

Leave a Reply

You must belogged in to post a comment.