AI (artificial intelligence) is basically everywhere you look in the music industry, whether it’s something you want to see or not.

From AI-generated vocals to AI tools that help produce music faster than ever, it’s all being done.

But the real question I get asked all the time is, what is the future of AI in music production?…

Well, I’m here to break it all down for you, including things like:

- Personalized AI trained on your style ✓

- AI’s role today and where it’s headed ✓

- Emotion-aware music creation ✓

- AI music that evolves with your sessions ✓

- AI-generated music for the metaverse ✓

- Next-level vocal cloning (and any legal concerns) ✓

- Music generation must-knows ✓

- Real-time AI reaction to listener data ✓

- Royalty splits powered by AI ✓

- AI-powered tools for digging samples ✓

- Fully autonomous music studios ✓

- Mood-based workflow suggestions ✓

- Much more ✓

As music producers, knowing all about the future of AI in music production will give you a serious edge (and advantage) in the music industry.

Plus, you’ll be able to speed up our workflow, play around with new creative possibilities, and push past creative blocks with AI-generated music.

This way, not only will your tracks always be on point and your sessions flow smoother 一 you’ll also be able to stay ahead in a music industry that’s shifting fast.

Bottom line, you’ll never have to worry about falling behind or missing out on next-gen AI music technology ever again.

Table of Contents

- The Future of AI In Music Production: Breaking it Down

- #1. Personalized AI Trained on Your Sound

- Bonus: Unison Audio’s AI Plugins Are Already Leading the Charge

- #2. Next-Gen AI Sample Digging

- #3. Real-Time Emotion-Based Music Composition

- #4. Fully Autonomous AI Music Studios

- #5. Adaptive AI That Evolves With The Music Created

- #6. Neural Interfaces for Direct Brain-to-DAW Music Creation

- #7. AI That Rewrites Music Based on Live Feedback

- #8. AR + AI Integration for Immersive Music Creation

- #9. Personalized Listener-Based Song Customization

- #10. Next-Level AI Vocal Replication

- #11. AI-Driven Contracts and Royalty Splits

- Tips For Using AI In Music Production (Your Unique Creative Process)

- Final Thoughts

The Future of AI In Music Production: Breaking it Down

Now that you know the benefits of knowing the future of AI in music production, let’s actually get into it. The following sections are expert-predicted trends for AI in the music industry, music business, and music production world. So, let’s break it down.

#1. Personalized AI Trained on Your Sound

Let’s kick things off with something that’s already becoming a big deal: personalized AI models that adapt to your unique sound and workflow.

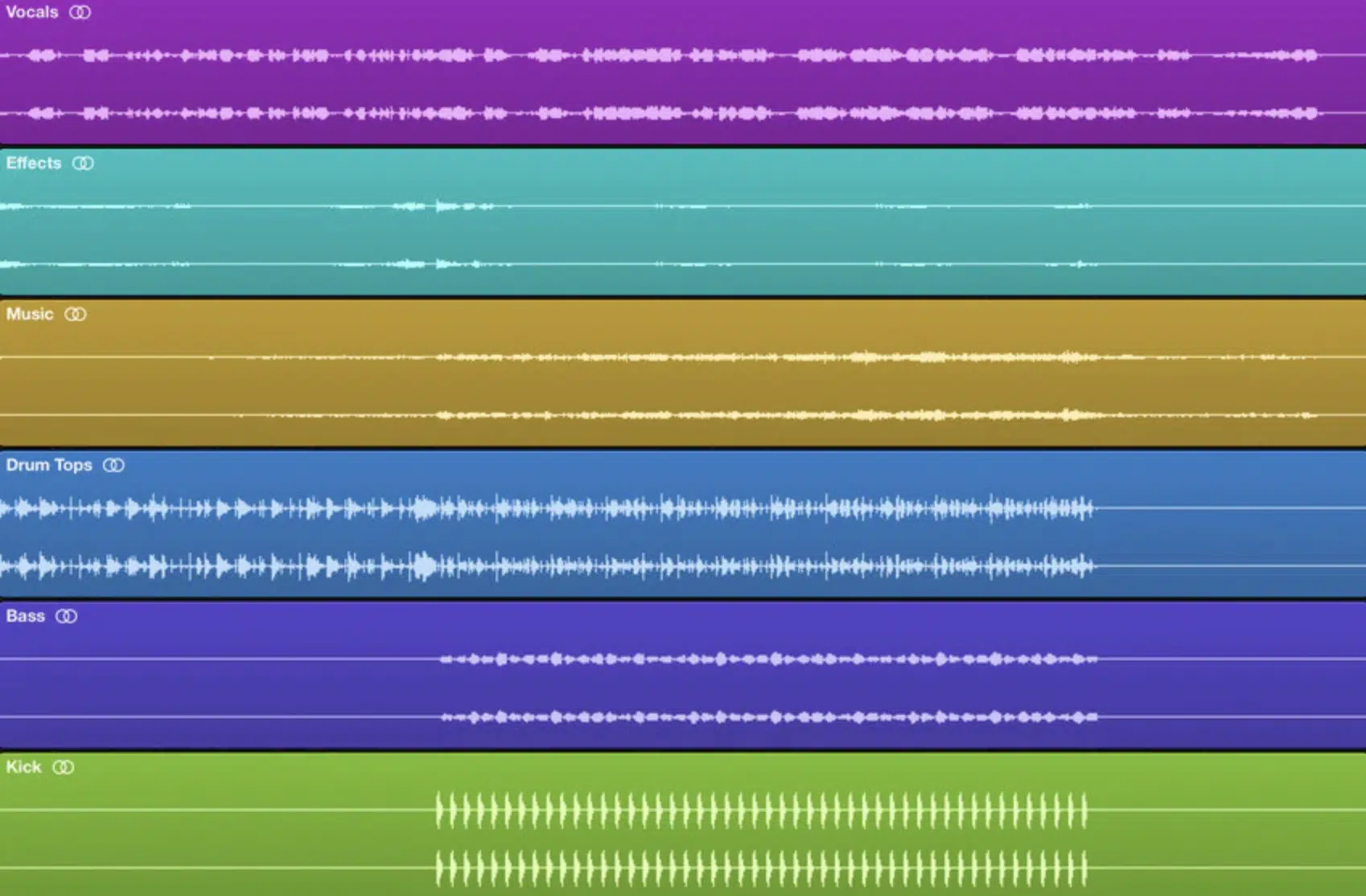

Instead of using generic presets, these AI systems actually learn how you like to produce music over time, picking up on things like:

- Your go-to synth patches

- Mixing and mastering decisions

- Even how you automate effects across different music genres

For example, you could feed your AI engine 200 tracks you’ve already made, and it would recognize your drum patterns, harmonic choices, and vocal chains.

And, even your signature bass tones and other signature moves.

It will help you create music that stays consistent with your brand, which is key when it comes to music promotion.

This kind of AI in music production unlocks way more than just speed because it also offers creative control that still supports human creativity rather than destroying it.

And as these AI tools continue to get smarter, they’ll be able to suggest changes in real time, which keeps the human experience on point while removing busywork.

That’s the beauty of the future of AI in music production 一 it’s about enhancing human creativity, not removing it (it’s an art form really, so practice makes perfect).

Bonus: Unison Audio’s AI Plugins Are Already Leading the Charge

If you’re ready to experience the future of AI in music production firsthand, Unison Audio’s AI plugins are some of the most powerful tools you can use.

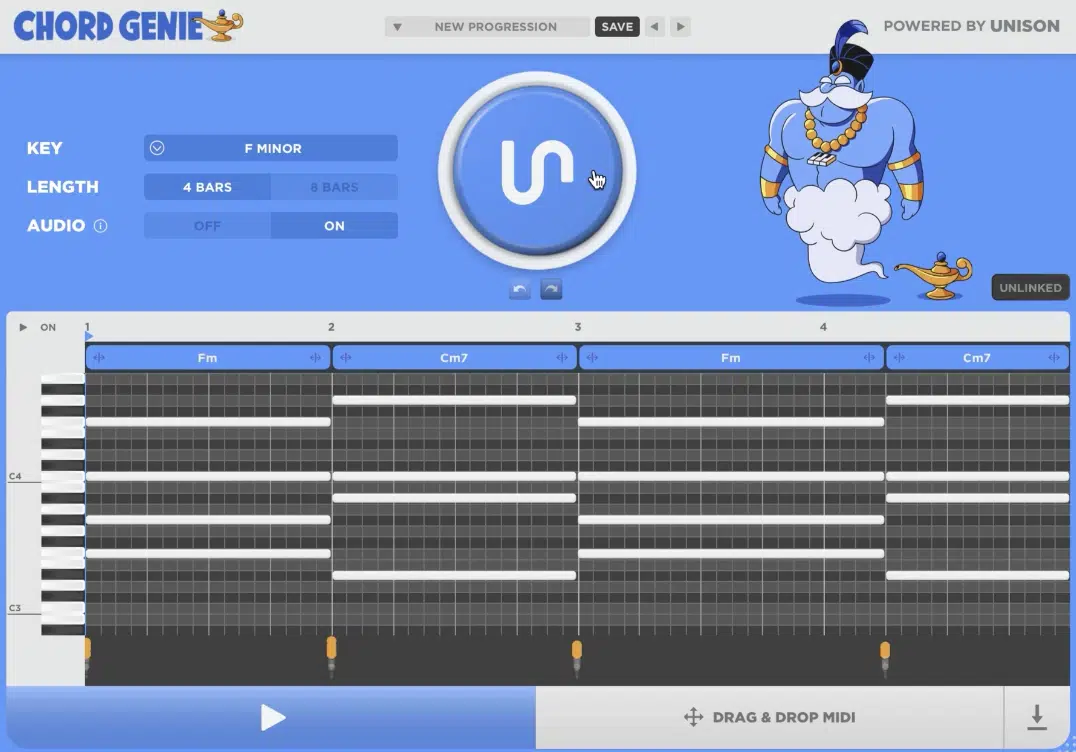

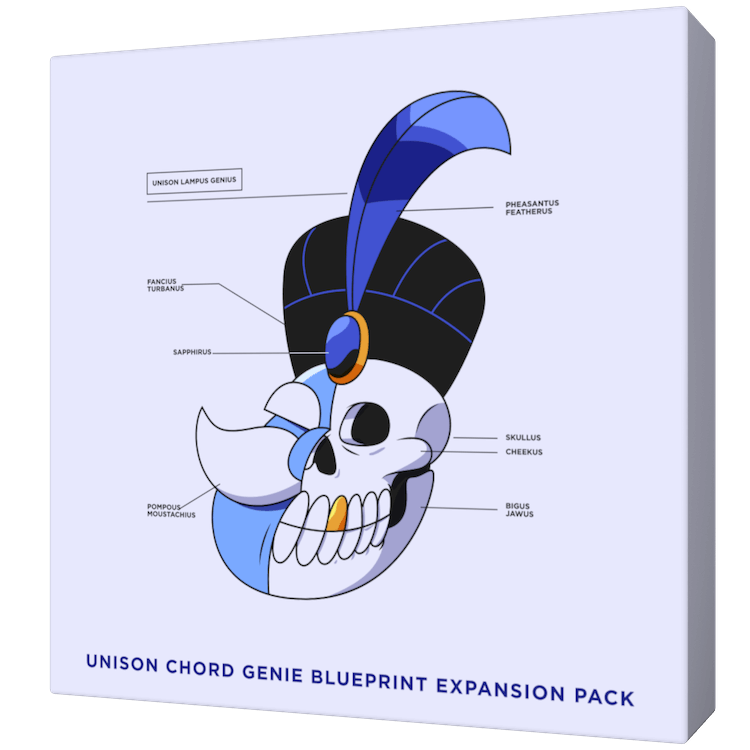

Chord Genie

Chord Genie instantly generates over 2.2 million custom chord progressions based on the exact key and emotion you select.

It lets you play around with endless harmonic options without needing to overthink theory or manually test voicings all day long.

Bass Dragon

Bass Dragon analyzes your chord progression and generates a perfectly locked-in bassline every time, complete with options to adjust:

- Rhythm

- Octave range

- Note density

- Even how much swing you want in your low-end groove

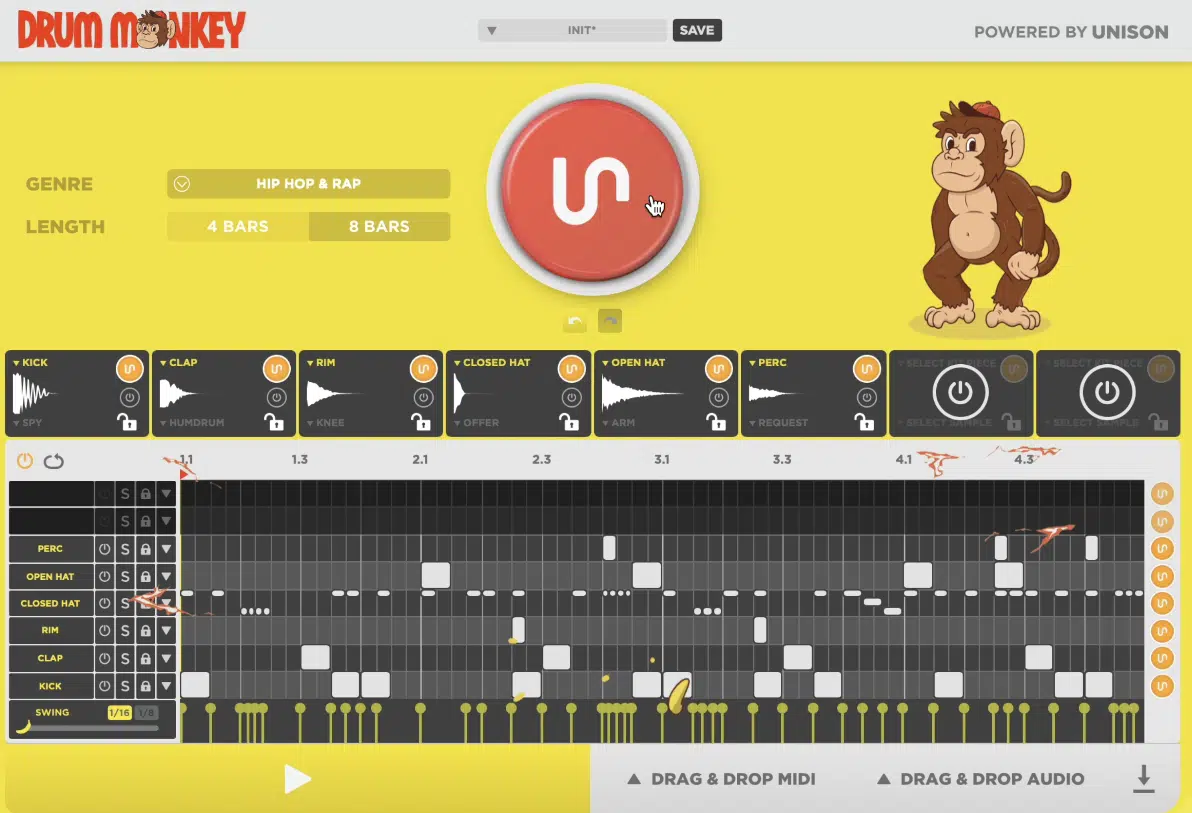

Drum Monkey

Then there’s Drum Monkey, which creates full drum patterns using AI-generated music intelligence trained on top-performing songs.

You can generate hard-hitting, mix-ready drums in any style with just a few clicks.

All three plugins are built to enhance human creativity and speed up the music creation process, not replace it, giving you more time to bang out killer beats.

So, if you’re serious about staying ahead, these AI-powered tools from Unison Audio are already showing exactly what the future of AI in music production looks like.

#2. Next-Gen AI Sample Digging

Sample digging has always been part of the music creation process, especially in genres like hip-hop where flipping obscure sounds is a skill in itself.

But now, AI-powered tools are changing the game by scanning vast amounts of music data (sometimes thousands of files per second) and picking out royalty-free music clips based on:

- Emotion

- BPM

- Specific genre

- Even texture

Let’s say you’re working on a moody trap beat and want it to be perfect…

Your artificial intelligence assistant could pull in a dark reversed vocal from a 2007 Eastern European acapella archive in under three seconds.

Maybe one that’s drenched in reverb, bitcrushed at 12-bit, and pitched down -4 semitones to give it that haunted, late-night bounce.

And if you want something more experimental, it might offer up a dusty koto loop filtered through a low-res VHS emulator 一 sitting at exactly 67 BPM to match your half-time groove without touching your grid.

The future of AI in music production means you’re no longer limited to scrolling through 400 snares to find “the one.” Talk about time-saver, am I right?.

Now it’s about AI technology understanding your vibe and narrowing it down instantly, whether you’re after dirty vinyl hiss or a fluttering Rhodes lick.

This also opens up new music discovery possibilities for aspiring musicians and professional music producers alike, especially when AI tools start pairing the right sounds with your intent (without any formal music training required).

So whether you’re layering chopped vinyl textures or searching for glitchy background music, AI-generated content is making the creative process smoother than ever.

#3. Real-Time Emotion-Based Music Composition

One of the most exciting things about the future of AI in music production in the music industry is how it’s starting to tap into real-time emotional input.

Then, it uses that to guide the overall music creation process, which is awesome.

We’re talking about AI technology that actually reads your mood through:

- Facial expressions

- Heart rate monitors

- Even just your playing style

And based on that stuff, it actually has the ability to adjust melodies, harmonies, and rhythm to match that emotional energy on the fly.

For example, if you’re laying down a moody progression and your expression tenses up, AI’s role might be to pull in a darker chord voicing.

Or, it might drop the key down a half-step and shift the tempo slightly to build more emotional depth 一 whatever works best.

And if you’re producing with a vocalist in the booth, the system can auto-adjust reverb and saturation based on the intensity of the take.

This will make the whole session feel more alive and responsive (kind of like having a mood-aware co-producer by your side).

What’s wild is that this isn’t sci-fi anymore…

Companies actually working with machine-learning algorithms are already developing AI-powered software that can interpret the emotional tone of a track and make compositional decisions in real time.

This type of emotionally intelligent AI is a huge step toward enhancing human creativity in music production, and it’s one of the clearest signs of where AI in music is headed.

Side note, if you want to learn everything about AI in the music production world right now, I got you covered.

#4. Fully Autonomous AI Music Studios

Now let’s talk about something that used to sound impossible…

Fully autonomous AI music studios that can generate, mix, master, and even promote a track from scratch without a human ever touching the mouse.

Imagine walking into your studio, giving a voice command like “Make me a synthwave track with a halftime groove.”

And within minutes, the AI has assembled a 3-minute demo using AI-generated music that fits your exact mood and target vibe.

Then, it will successfully:

- Run the stems through automated EQ and compression chains

- Apply harmonic balancing

- Align everything to a specified LUFS level

- Export high-quality music that’s literally ready for upload

And the craziest part is that these AI systems can even analyze current trends in the music industry (let’s say what’s poppin’ on Apple Music or TikTok) and adjust the mix or arrangement to match what’s getting the most traction.

While that might sound intimidating to some, the future of AI in music production doesn’t mean replacing human musicians or sound designers.

It means giving us new ways to create professional-grade music faster, without losing creative control (which I’m going to say a few more times because it’s key).

This kind of automation allows us to spend less time on repetitive tasks and more time pushing the boundaries of the music world in ways that were never possible before.

#5. Adaptive AI That Evolves With The Music Created

Another part of the future of AI in music production that’s really changing the game is how adaptive these systems are becoming.

They’re starting to learn and evolve alongside us as we produce music.

Instead of static plug-ins or templates, these AI-driven tools actually study our habits, our musical preferences, the types of projects we work on, and tweak to perfection.

For example, if you regularly add a +3dB boost at 8kHz to vocals during mixing, the AI will eventually recognize that pattern and start doing it for you (or at least suggest it).

This will save you all those steps without getting in your way.

And keep in mind that the more you work with these AI-powered tools, the smarter they get 一 so your reverb settings, chord voicings, sample types, and even arrangement tendencies can all be analyzed and applied across future sessions.

With AI models learning our individual styles in this way, music producers gain tools that not only respond to their process but actually grow with them.

It’s a big part of what makes AI in music production feel less like a machine and more like a real-deal collaborator.

#6. Neural Interfaces for Direct Brain-to-DAW Music Creation

One of the wildest developments coming to the music industry in my opinion is neural interface technology.

It lets you create music directly from thought, without ever touching a single controller.

With brain-to-DAW connections being developed by music industry companies like Neuralink and Cognixion, the idea is that your brain signals could trigger AI systems that understand intent.

This means you could literally imagine a melody or rhythm and watch it appear inside your DAW timeline (unbelievable, but true).

For example, think about designing an 808 pattern in your mind, and within seconds, the AI tools translate that brainwave activity into a fully quantized MIDI sequence.

And, even routes it to your favorite drum machine VST, which is a significant transformation, I know, but it’s pretty cool when you think about it.

This isn’t just about convenience either 一 the creative possibilities here go way beyond what’s physically possible with a MIDI keyboard or mouse.

Especially for music producers who want to tap into subconscious ideas or achieve total creative control effortlessly.

Remember, the future of AI in music production isn’t just about speeding things up.

It’s about unlocking parts of your mind that traditional music technology could never reach, making the music creation process more direct and personal than ever before.

Once this type of interface becomes reliable (and affordable), you’ll be able to play around with different musical ideas faster than you can explain them out loud.

It could completely change what it means to produce music in the modern music world, and where that might be scary, you definitely don’t want to get left behind.

#7. AI That Rewrites Music Based on Live Feedback

Imagine finishing a track, playing it live, and having your AI system tweak the mix, swap out drum fills, or stretch out a chorus in real-time based on how the crowd reacts.

Well, that’s exactly where the future of AI in music production is headed.

This new wave of adaptive AI models can read live feedback, like:

- Decibel levels

- Facial expressions

- Even biometric data

And then, it will automatically adjust the music being played or generated on the spot to match listener energy (mind-blowing, right?).

Let’s say you’re DJing a set and the crowd starts to lose energy…

AI could detect a dip in movement, then shift the tempo +6 BPM, introduce a riser, and transition into a more energetic breakdown without you touching a thing.

It’s like having a co-producer monitoring the room, using AI-generated music as a tool to maintain emotional depth and dynamic flow in every situation.

Whether it’s a live set, a Twitch stream, or a private listening session, it’s on point.

The power behind this isn’t just in the AI’s ability to respond, that’s a fact.

It’s all about its understanding of music data patterns, genre expectations, and how different musical styles and genres impact an audience’s emotional state.

This type of innovation is one of the most exciting indicators of where AI in music is going, giving you tighter control over performance without sacrificing spontaneity or your personal sound with fully AI-generated music.

#8. AR + AI Integration for Immersive Music Creation

One area you’ll definitely want to keep your eye on is the integration of AR (augmented reality) with AI music technology.

Especially when it comes to immersive music creation environments.

Instead of working with a flat screen, imagine producing a track by placing virtual synth modules around your room, where you can literally reach out and tweak filters or stretch audio waveforms in mid-air.

All powered by AI-driven tools that predict what you want to do next.

For example, you could look at a vocal layer, and the system points out problem frequencies in your AR field of view, while suggesting EQ moves/dynamic adjustments.

Or, even suggest alternate takes pulled from AI-generated content based on your previous sessions (super innovative, right?).

The future of AI in music production is moving toward these hybrid environments where your physical space becomes part of the music production process.

It perfectly blends human creativity with next-gen interfaces in a way that feels completely natural, not forced or too robotic.

And beyond just flashy visuals, this kind of setup will actually help boost your workflow.

You’ll get real-time suggestions from AI systems while staying locked into a creative headspace that feels more like performance than editing.

With platforms like Apple Music and others already investing in immersive formats, you’ll start to see AR + AI become essential for producers who want to stay ahead in the music industry, sound design, and visual experience.

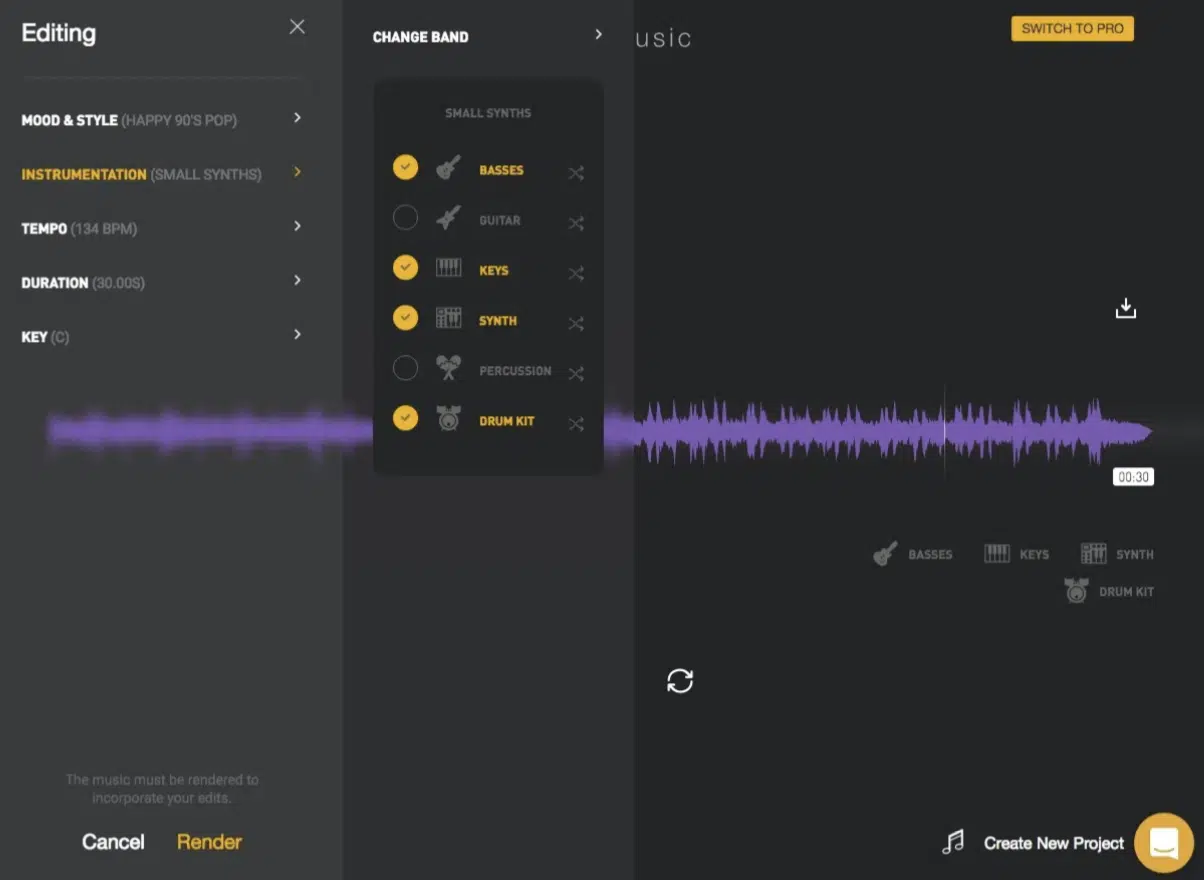

#9. Personalized Listener-Based Song Customization

This next evolution is something that’ll completely change the way your audience hears your music 一 personalized AI-driven songs that change based on who’s listening.

Think about this: the same track could sound more energetic for a gym listener, more mellow for someone studying, and more cinematic for someone driving.

This is possible with generative AI because it’s trained to adapt songs to match real-time user behavior.

For example, a listener in the UK playing your track on Apple Music in the morning could get a slightly brighter mix with boosted mids.

While, on the other hand, a U.S. user listening at night hears more low-end emphasis and a relaxed tempo.

This kind of personalization isn’t limited to EQ and levels…

AI tools can now adjust song structures, instrument choices, or even lyrics in ways that preserve emotional depth while still offering a unique version of the song every time.

The future of AI in music production is pointing toward a time where AI-powered tools tweak the listener experience so well, the concept of a “static” version of a track might eventually fade out.

Especially for background music, playlist content, and high-engagement formats.

If you’re trying to build a deeper connection with your audience or give fans a more interactive listening experience, this type of song customization will be invaluable.

#10. Next-Level AI Vocal Replication

Now let’s get into vocal territory, because the future of AI in music production is seriously redefining what’s possible with AI-generated vocals and vocal cloning.

Instead of working with traditional vocal samples or hiring session singers, you can now train AI models on a vocal dataset (even if it’s just 30-40 minutes of material) and generate new lyrics, melodies, or ad-libs.

Ones that sound like that original voice in different emotional styles and languages.

For example, you could replicate a late-90s R&B tone for one hook and then switch to a distorted punk rock scream for the verse.

And this is done all without needing to re-record anything, and without killing sound quality (because the music industry is not forgiving when it comes to imperfection).

What makes this even more powerful is the ability to control delivery parameters, like:

- Breathiness

- Vibrato depth

- Pitch deviation

- Phrase timing

This means you can fine-tune the emotional expression of your AI-generated vocals with just a few slider tweaks.

These tools are super valuable when you’re trying to create professional-grade music on a tight budget or when you need to test out multiple vocal styles before locking in a final direction.

The ability to experiment with different vocal textures while maintaining your unique creative control is just another example of how AI in music production is empowering your sound without replacing your vision.

Side note, since we’re talking about sick vocals, if you want access to the best vocal samples in the game, I got you.

#11. AI-Driven Contracts and Royalty Splits

One part of the music business that’s finally catching up with AI is how contracts and royalty splits are handled, which is a big deal.

AI tools are helping music producers avoid messy paperwork and protect their intellectual property (rightfully so).

Instead of hiring a lawyer every time you collab or sample something, new AI-driven platforms are starting to generate auto-split contracts in seconds by analyzing who contributed what.

Whether it’s vocal takes, drum programming, or AI-generated content, it’s all about making things fair for human musicians in the music industry.

For example, let’s say you use AI-generated music to create the hook, then a vocalist adds a top line and another producer handles the mix…

The AI system can assign weighted percentages based on time spent, project layers used, and final arrangement influence.

On top of that, some AI systems integrate directly with music distribution platforms and blockchain-backed publishing tools.

It helps with automatically registering those splits and making sure every contributor gets paid in real time when the track/music composition is streamed.

The future of AI in music production isn’t just about what happens inside your DAW…

It’s also about reducing legal concerns and simplifying how you handle your rights in an industry that’s getting more complex by the day.

And if you’re producing high-volume AI-generated content or working with collaborators all over the world, this kind of automated royalty tracking will save you hours and eliminate a ton of guesswork in the backend of your career.

Tips For Using AI In Music Production (Your Unique Creative Process)

Now that you’re seeing how deep this rabbit hole goes, let’s switch gears and talk about how you can start using AI in music production right now without losing your edge or overcomplicating your sessions. The tips below are all about helping you make the most of AI-powered tools in the music industry while still staying true to your sound, your process, and the creative vision that makes your music stand out.

-

Use AI Music Technology To Discover New Musical Ideas

One of the best ways to use AI in music production is as a creative jumping off point — especially when you’re stuck or looking to push your ideas into new territory.

For example, you can load a melody into a generative AI plugin like Amper Music, and instantly get 10 alternative versions in different musical styles and genres.

From orchestral film-score textures to chill lofi bounce, it can give you variations you might never have come up with on your own.

These tools can even bring some extra emotional depth to the table by:

- Shifting key signatures

- Reharmonizing progressions

- Suggesting rhythmic flips

It’ll help you create music that feels dynamic and unique without losing your original vibe, which is the future of AI in music production, hands down.

This kind of musical collaboration between human creativity and AI-generated content is what makes the future of AI in music production so powerful.

It doesn’t replace your input, it multiplies it.

And when you combine this approach with AI-powered software that predicts where your ideas are going, you’re not just speeding up the music creation process.

You’re unlocking creative potential that used to take human musicians hours of trial and error to reach.

-

Train AI (Artificial Intelligence) on Your Own Tracks

If you’re serious about building your sound in the music business, one of the smartest moves you can make right now is training AI tools using your own:

- Finished tracks

- Stems

- Sessions

By feeding AI (artificial intelligence) systems data from your personal catalog, you allow them to learn your exact sound design tendencies…

Whether that’s your vocal EQ curve, your fav 808 layering technique, or how you like to automate your risers across genres like hip-hop or electronic.

The future of AI in music production is moving toward personalized AI models that function like virtual clones of your workflow.

Meaning, you can offload tedious mixing setups or session routing to an assistant that knows your music better than some collaborators do.

These AI systems can also generate new music ideas that sound like you, like background music for sync, stems for beat packs, or AI-generated music loops that match your sonic identity/unique signature style.

Plus, once you start refining AI-generated content using your own presets and templates, you gain complete creative control over the final product.

This ensures you keep things professional, consistent, and 100% aligned with your vision.

-

Blend AI Generated-Music with Traditional Music Techniques

To really get the most out of the future of AI in music production/the music industry, you’ll want to blend your AI tools with traditional music skills.

That’s where things start sounding truly next level.

For example, you can use generative AI to lay down a chord progression, then apply classic voice-leading rules from traditional music theory to tighten it up.

Or, switch out the voicings with analog synth patches to add warmth and human expression 一 dealer’s choice.

You could also start with an AI-generated vocal melody and then track a human vocalist on top, which is pretty intriguing.

It will successfully combine the mechanical precision of AI with the raw texture of human-created music for emotional depth that hits on multiple levels.

This fusion of music generated with AI/automated music creation and traditional music is great for when you’re working across different creative industries like:

- Film scoring

- Music education

- Even interactive sound design for games

Any process where flexibility, realism, and style accuracy all matter.

And at the end of the day, no matter how much AI technology evolves, producers, beatmakers, and human artists like you will always have the final say.

This is why embracing AI as part of your workflow, not a replacement for it, is the smartest move you can make right now.

-

Let AI Handle Repetitive Tasks

When you’re deep in a session, nothing kills the vibe faster than repetitive technical work, am I right?

Well, that’s exactly where AI-driven tools come in to save your time and headspace.

Instead of manually labeling stems, organizing tracks, cleaning up silences, or slicing up loops, you can let artificial intelligence handle those tasks in seconds.

You can do so using things like iZotope RX 11 or smart DAW assistants that learn your routine and make the process easier than you ever imagined.

For example, if you typically remove low-end rumble with a high-pass filter at 40Hz on all your FX channels, an AI system can identify that pattern and apply it across every future project without asking.

This will help keep your mix clean while boosting overall sound quality.

This kind of automation is part of what makes the future of AI in music production so game-changing because it’s about taking the tech load off your shoulders so you can focus more on:

- Musical composition

- Emotional depth

- Human expression

And once you integrate AI-powered software into your workflow 一 everything from comping vocals to exporting trackouts gets way faster.

It’ll free up your energy for what really matters: enhancing human creativity and building high-quality music that feels real.

And that, my friends, is the future of AI in music production in a nutshell.

Final Thoughts

And there you have it: a full look into the future of AI in music production and how it’s already starting to change the game.

Whether you’re using AI tools to speed up your workflow, play around with new musical ideas, or explore completely new creative possibilities, it’s all right here.

You’re literally stepping into a whole new era of music creation.

The future of AI in music production isn’t something to fear 一 it’s something to embrace, refine, and shape into your own unique sound.

And now, you’ve got access to technology that can enhance human creativity, simplify the technical grind, and give you more control over how you produce music.

As long as you keep learning, experimenting, and evolving, the future of AI in music production will only make your process sharper, faster, and more limitless.

Now’s your moment, so tap in, level up, and stay ahead of the curve.

Until next time…

Leave a Reply

You must belogged in to post a comment.