There’s been a lot of talk lately about where the future of music production is going (and for really good reason).

And honestly, as music producers, staying on top of it all isn’t just helpful, it’s necessary if you want to keep up and leave your competition in the dust.

That’s why I wanted to break it all down in a super real way to show you what’s already changing, new emerging technologies, and what’s around the corner.

Plus, how you can actually use all of this to your advantage, like:

- AI composition tools that build progressions for you ✓

- Drum sequencing that sounds human and hits hard ✓

- Smart 808s and basslines that follow your chords ✓

- Effects that change based on what’s playing ✓

- Mixing plugins that literally think for you when you create music ✓

- Mastering tools that get things release-ready in minutes ✓

- Vocal cloning, auto-tune, and lyric-based generation ✓

- Tools built for Dolby Atmos and spatial audio ✓

- Latest machine learning algorithms/technological advancements ✓

- Metadata optimization for streaming services ✓

- Where the music industry is heading ✓

- How to enhance your creative process ✓

- New music composition technology ✓

- Much more about the future of music production ✓

If you know where this is headed, you can move faster, finish more tracks, and use new technologies to level up your sound.

I mean, think of all the new possibilities and exciting opportunities with each new track; like how students learn to read and write a different language.

You’ll also have an easier time staying competitive, discovering new sounds, and unlocking much more creative freedom.

And most importantly, you won’t get stuck in outdated workflows or left behind while the rest of the music world speeds forward.

Table of Contents

- What is the Future of Music Production?

- AI Composition: Breaking it Down

- Drum & Bass Creation

- AI Sound Design: Human Creativity Meets Machine Learning

- Mixing & Mastering With Artificial Intelligence

- AI Vocal Technology

- The Future Format of Music Production: AI Meets Spatial Audio, Immersive Tech & Streaming

- Bonus: 3 Pro-Level Techniques For Music Producers to Stay Ahead in the AI Era/New Music Industry

- Final Thoughts

What is the Future of Music Production?

So what exactly is the future of music production?… Well, it’s not just about new software or updated plugins.

It’s about the way we build, finish, and release music completely evolving using AI-generated content and new technologies.

We’re talking AI-generated music, real-time arrangement tools, adaptive mixing, mastering workflows that meet streaming service standards, etc.

And believe me, this is just the beginning 一 some of the most exciting developments are still yet to come, so make sure to stay tuned the whole way through.

AI Composition: Breaking it Down

Before we talk about drums, mixing, or vocals — we’ve gotta start with ideas. This is Because the future of music production is already making it way easier to get melodies, chords, and full song layouts down without feeling stuck. So, let’s get into it real quick.

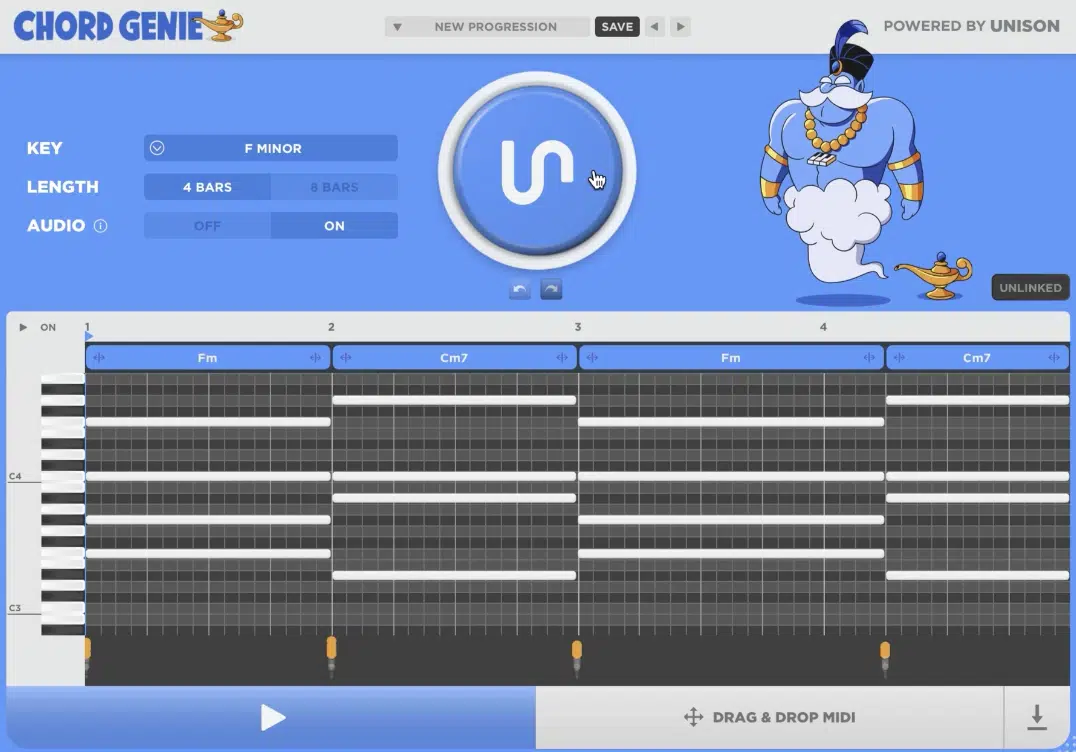

Chord & Melody Generation with Plugins Like Chord Genie

Coming up with great chords and melodies has always been one of those things that either completely works out or completely trips you up.

Sometimes you’ve got something fire in your head, and other times you’re just dragging random notes into the piano roll and hoping for the best.

But luckily, with artificial intelligence and plugins like Chord Genie, that part of the production process doesn’t have to slow you down anymore.

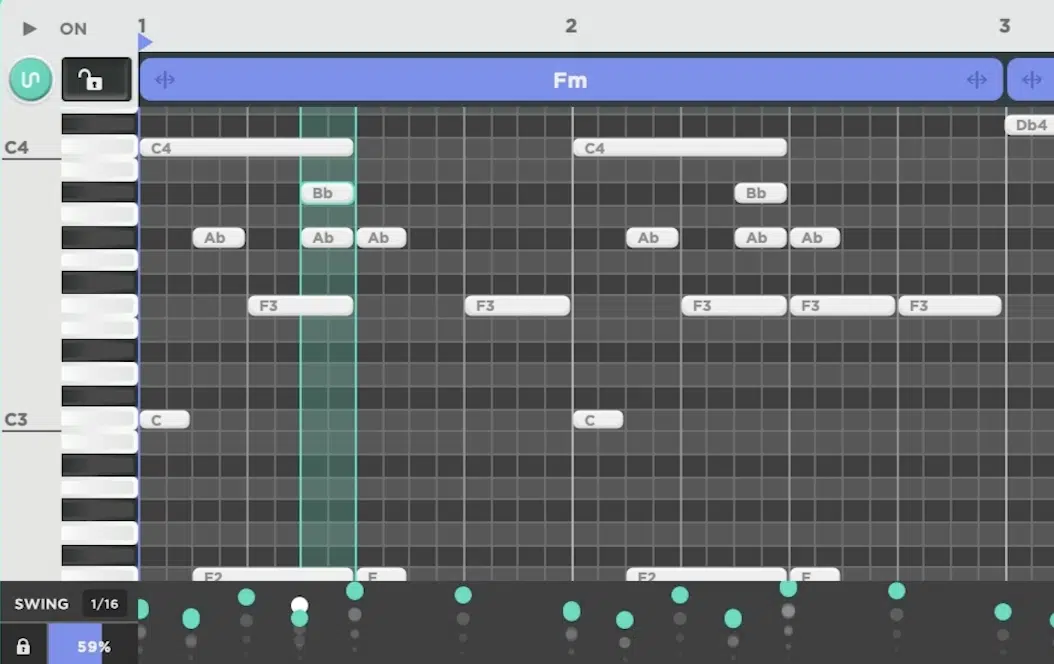

For example, say you’re working in D minor…

You just load up Chord Genie, choose your emotion (let’s say “dark and moody”), hit generate, and you’ve got a 4-bar progression instantly.

One that actually sounds like something you’d hear in a Travis Scott or The Weeknd record, not just another boring, basic loop.

It gives you full control too, from chord inversions and voicings to timing, swing, and note lengths, so you’re not just stuck with a cookie-cutter idea.

You’re building off something usable and already proven to work.

It’s especially sick for bedroom producers or artists who aren’t super deep into music theory because you can still build super emotional progressions and create music that feels professional, fast.

Structure & Arrangement Automation

Getting a loop going is one thing, but turning that loop into a real song with an intro, verse, chorus, drop, and everything in between is where most producers hit a wall.

The good news is, structure doesn’t have to be a guessing game anymore.

With arrangement tools powered by machine learning technology, you can generate song layouts that actually follow patterns from real-world hits.

Take MIDI Wizard 2.0, for example…

You choose your genre (say melodic house), your key, and even the emotional curve you want — and it’ll lay out a track in different sections.

Maybe 8 bars of intro, 16 bars of verse, 8 bars of chorus, 4-bar bridge (dealer’s choice), all ready to be filled in.

What makes it powerful is that it’s not random, it’s based on data from hundreds of successful songs across all the most popular genres.

From hip hop and trap, to more experimental styles, it’s all right here.

It identifies patterns and applies those in a way that saves time and keeps your workflow smooth and fluid.

NOTE: If you want to switch things up, you can adjust each section’s length, reorder the flow, or even re-harmonize a part on the fly, all without breaking your momentum.

Prompt-Based Track Creation

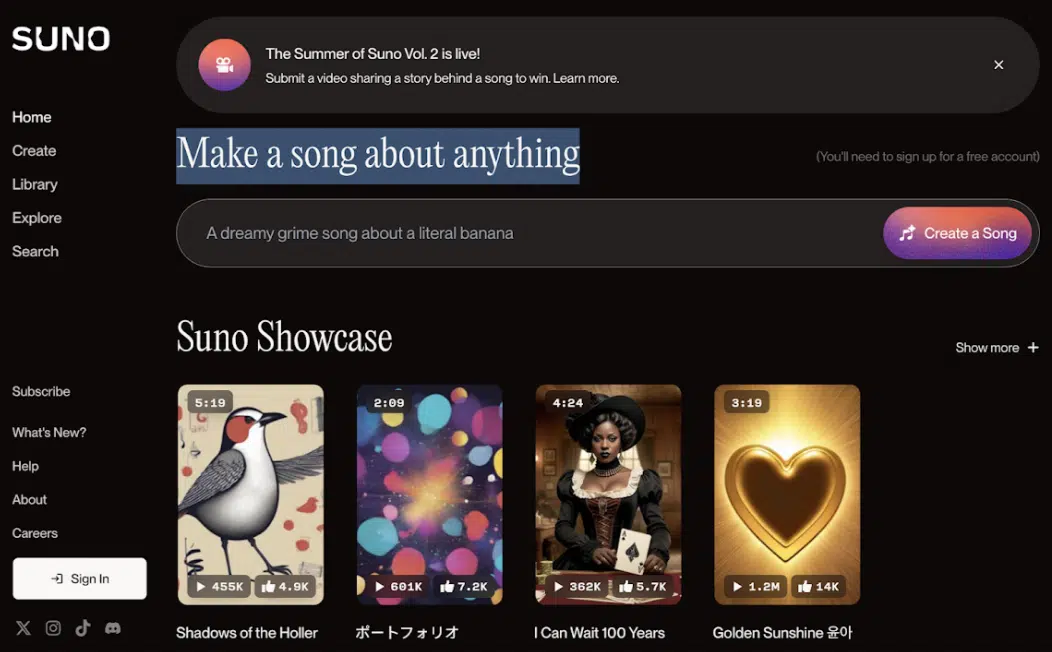

When inspiration runs dry and beatblock is hitting you full force, one of the fastest ways to break through that wall is with prompt-based AI generators like Suno and Udio.

And yes, this is already a huge part of the future of music production.

You can literally type something like, “spacey 85 BPM chillhop beat with vinyl textures, soft guitar chords, ambient vocal chops, no snare.”

And then, within two minutes, you’ll get a 60–90 second fully-formed idea (with stems).

Once you have those stems, it’s about flipping them 一 maybe you keep the chord loop, time-stretch it by 50%, pitch it down 4 semitones, and reverse the tail.

Or you take the bassline and run it through Zen Master to give it more grit.

NOTE: Udio even lets you enter advanced tags like “dark – ambient – 808-driven – no vocals – halftime feel,” and its models generate original tracks based on real training data from similar-sounding pieces 一 not just randomized audio.

It’s perfect for working on collaborative projects or scoring background music, since you can generate vibe-based demos without having to overthink production techniques.

And when you start manipulating the AI material with your own tools, that’s where human creativity kicks in and puts the cherry on top perfectly.

This is exactly how you stay original in the future of music production.

Drum & Bass Creation

Drums and bass have always been the heartbeat of any track, and now they’re one of the areas where AI is stepping up in a major way.

The future of music production is giving us tools that don’t just spit out loops 一 they actually understand groove, energy, velocity, and rhythm on a deeper level.

AI now lets you generate drum patterns that:

- Swing like a real drummer

- Adjust dynamics in real time

- Detect when your kick and bass are fighting so it can fix masking on the fly

For low-end, AI tools are now knocking out basslines and 808 patterns that react to your chord progressions, match your key, and stay tight with your drums.

No more trial-and-error guessing for music creation anymore.

This means you’re not just speeding up your production process, you’re building tighter, cleaner, and more emotionally accurate rhythm sections.

And when you combine that with real-time layering and smart processing, it’s easy to see why drum and bass creation is evolving fast as a cornerstone of the future of music production in the music industry/music business.

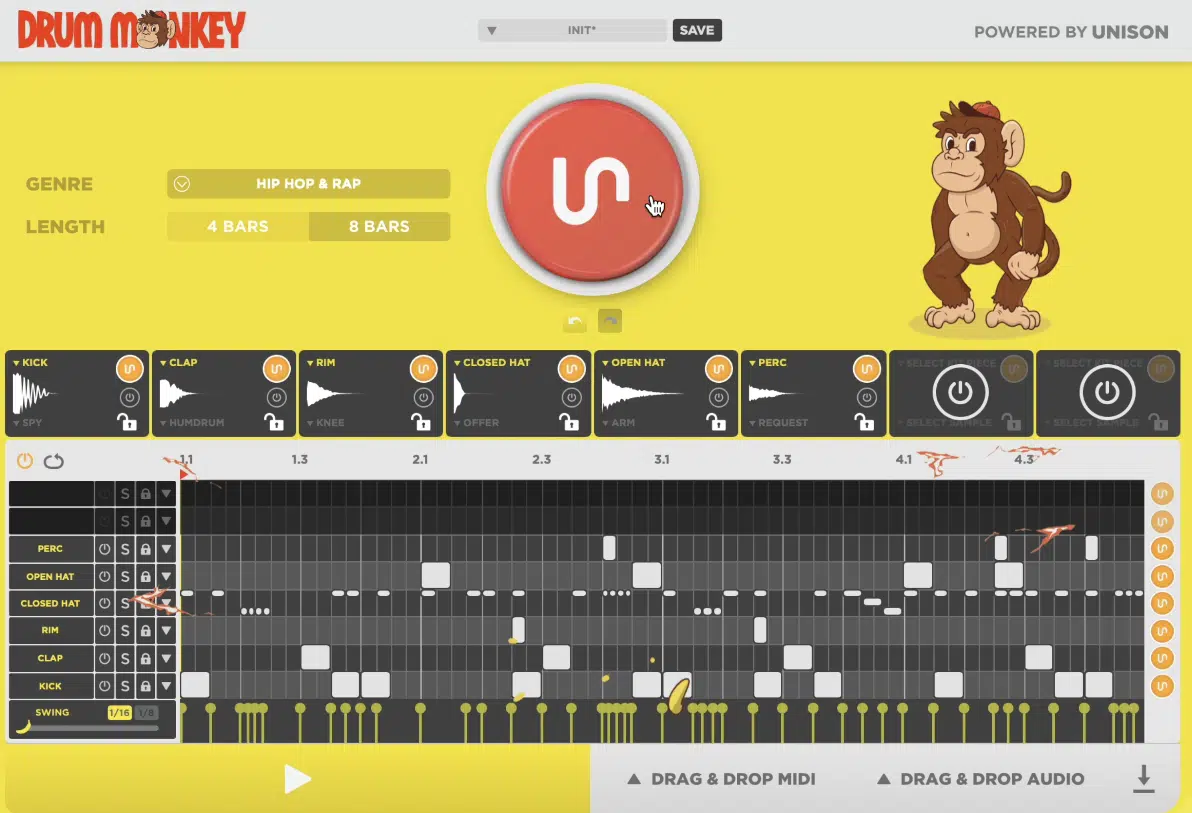

Drum Monkey: Human-Like Rhythms, Built in Seconds

One of the most legendary AI drum generators in the game right now is Drum Monkey, which will absolutely blow your mind, guaranteed.

I mean, think about it, laying down drums that actually groove used to mean a lot of clicking, layering, and trial and error, right?

Well, Drum Monkey flips that completely because it generates full drum patterns in seconds, based on the genre, BPM, and energy level you choose.

Let’s say you’re working on a 150 BPM trap beat 一 you load up Drum Monkey, pick “Hard Trap” as your style, and hit generate.

You’ll get a 16-bar pattern with perfectly timed hi-hats, swung snares, and fills that don’t sound robotic or mechanical at all.

From there, you can tweak velocity randomness, control ghost note frequency, and even dial in shuffle and accent strength so it grooves like a real drummer laid it down.

And because it uses AI-generated content trained on thousands of commercial tracks, the results feel tight, musical, and ready to go without having to tweak every little hit.

Bass Dragon & 808 Machine: Intelligent Low-End Creation

When your drums are locked in, the next move is getting your low-end to sit right, and that’s where tools like Bass Dragon and 808 Machine really shine.

Both of these world-renowned plugins are built to analyze your existing melody or chord progression and generate a bassline or 808 pattern that complements it.

That includes not just root-note following, but groove, note length, rhythmic pocket, and even octave placement, which most basic generators completely overlook.

Both tonally and rhythmically, it’s on point 100%.

For example, if you’re working in D minor with a dark melodic trap vibe, Bass Dragon will recognize the harmonic vibe and might generate a syncopated 808 run that hits off the downbeat 一 adding space and tension without stepping on your kick.

All you have to do is load in your chord MIDI, choose your style (let’s say “West Coast bounce” or “UK Drill”), and set the rhythm density (like 1/4, 1/8, or triplet feel)

Then, boom, it’ll spit out a locked-in pattern that fits perfectly, and you can even choose between mono and stereo-friendly versions.

All depending on whether your bass will sit dead-center or spread subtly across the field with chorus or width plugins downstream, of course.

With 808 Machine, the customization goes even deeper.

You get full control over:

- Glide time (anywhere from 5ms to 300ms)

- Saturation curve (soft clip, tube, tape)

- Sub-frequency tuning (e.g. locking your sub to 40Hz or 55Hz based on genre)

- Even transient snap to make sure your 808s punch through the mix

You can also shape attack/release curves, automate pitch envelopes, or enable auto-key detection so the plugin locks every 808 hit to your scale.

This means you’ll never have those weird off-key hits that mess with your chord progression or throw off your groove, which is awesome.

It’s all about speeding up the production process while still giving you room to experiment and play around however you want.

You can resample, stretch, reverse, and layer these AI-generated patterns to taste, which is where the future of music production is excelling right now (that perfect balance).

AI Sound Design: Human Creativity Meets Machine Learning

Once you’ve got your drums and low-end in place, the next step is tweaking the textures and effects that give your track its actual vibe. And that’s where the future of music production really shows off, because AI is now handling FX chains, instrument layering, and tonal shaping in ways that used to take hours.

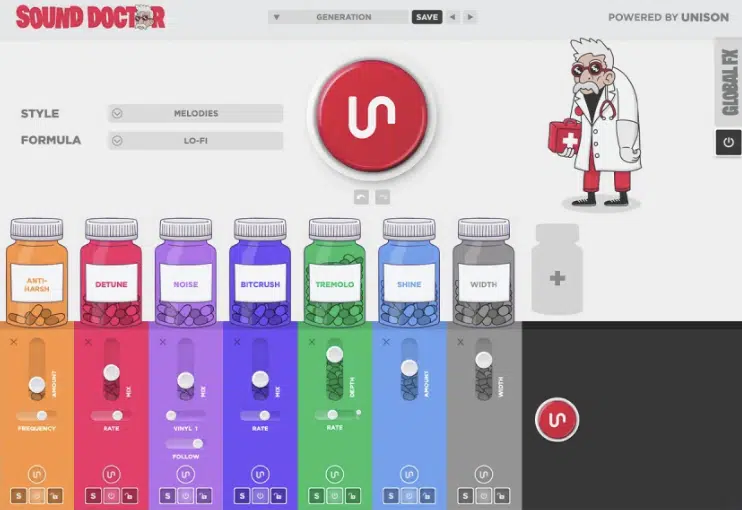

Smart FX Chains That React to Audio in Real Time

Traditional effect chains are static, you load up a reverb, tweak it manually, maybe automate some parameters, but it’s still you doing all the work.

Now with tools like Unison Sound Doctor, you’re working with AI-powered tools that actually respond to your audio input in real time, based on what’s happening in the track.

That means your FX chain isn’t locked; it breathes with your beat.

For example, if your vocal starts getting harsher in the upper mids during a chorus, it might automatically adjust a dynamic EQ to tame that 3.2kHz range by -2.5dB.

And then, properly ease off when the verse hits.

It does this by analyzing your stems, genre, frequency balance, and even the evolution of sound waves over time, detecting things like:

- Tonal shifts

- Amplitude spikes

- Build-up across buses

Then, it adapts the chain live, bar by bar 一 like having a dedicated mix assistant adjusting EQ, delay send levels, and transient shaping for you as the vibe changes.

You can even set target moods like “aggressive,” “spacey,” or “emotional depth,” and Sound Doctor will reshape your entire chain.

For example, softening transients with upward compression for a dreamier feel, widening reverbs while taming highs above 10kHz.

Or, pushing saturation in the 1kHz–3kHz range to add bite and intensity to your snare group (whatever works out best for your unique track).

This kind of audio-reactive processing is exactly where the future of music production is headed, so I definitely recommend you checking it out.

It’s not just about adding effects; it’s about giving producers the tools that listen, adapt, and evolve with the track, which is invaluable.

Layer Matching & AI-Based Instrument Selection for AI-Generated Music

Getting layers to sit well together is one of the hardest parts of production, especially when you’re blending multiple melodic elements or building big soundscapes.

AI tools are now helping match layers in terms of frequency spread, tonal character, and stereo width, and in the process, they’re improving workflow and sound quality.

Let’s say you’ve got a synth lead that lives mostly around 1kHz to 2.5kHz…

An AI layer-matching plugin might recommend a pad that fills out the 200Hz–800Hz range, and a top texture like a reversed pluck that rides the 6kHz+ area for air.

Some plugins even give you spectral visualizations and let you filter out options by genre or emotional tone.

So if you’re working on a chillwave track, it might suggest soft analog textures, while a drill beat would trigger darker bells or glides.

This is the kind of stuff that used to take years of ear-training or endless trial and error as music producers 一 and now it’s just part of the workflow, built directly into the tools.

That’s the future of music production in action, and it’s only getting smarter.

Mixing & Mastering With Artificial Intelligence

Now that your ideas, rhythm, and textures are locked in, it’s time to bring everything together and polish the full track. And honestly, the future of music production wouldn’t be what it is without AI mixing and mastering tools that are pushing audio quality, streaming quality, and workflow speed to new levels, so let’s dive in.

AI Mixing Plugins That Adapt EQ, Compression & Panning in Real-Time

Mixing used to mean hours of fine-tuning EQ curves, trying different compressor settings, and nudging things left or right until they sat right.

You’d sweep frequencies by ear, layer sidechains manually, and hope the energy didn’t fall apart as soon as the hook hit.

But now, with AI tools like iZotope Neutron 5 and Sonible smart:EQ 4, that process is starting to shift (super fast).

These epic AI mixing plugins are built on machine learning technology that listens to your mix and adjusts in real time.

These plugins actually listen to your mix, identify tonal buildup, dynamic imbalances, masking, and stereo conflicts, and then make changes in real time based on context.

For example, smart:EQ can scan your vocal and immediately cut 400Hz by 2.7dB to clean out boxiness while boosting 8kHz slightly for air.

No manual sweeping, no second-guessing.

Neutron goes even deeper with its Mix Assistant, which doesn’t just look at the track solo’d — it compares your vocal to your full instrumental mix.

It’ll automatically apply subtle midrange dips in clashing areas, dial in multi-band compression, and even adjust stereo width around your lead to carve space properly.

It also handles sidechain routing on its own too, which is cool.

So, let’s say you’ve got a kick peaking around -6dB and an 808 hitting around -8dB…

Neutron can detect frequency masking between 50Hz and 120Hz, and apply a dynamic EQ cut on the bass only when the kick hits.

That’s sidechain ducking with tonal awareness and no need for extra routing or third-party plugins at all.

You can even set genre targets like “Mainstream Hip-Hop,” “Ambient Pop,” or “Hard Trap.”

Then, the system pulls from spectral reference profiles to get your tonal balance close to major-label mixes.

For example, if you want something in the ballpark of Travis Scott’s “SICKO MODE” or Doja Cat’s “Vegas,” you just load in the track, and the assistant starts shaping your EQ, compression ratios, and stereo placement based on that style.

That level of automation doesn’t remove your control, it just clears the friction between what you hear and what you want to adjust.

And that’s exactly what the future of music production looks like in real time 一 less resistance, more precision, and a smoother path to pro-level sound quality.

So, for aspiring musicians, producers, and sound designers trying to push their mixes to the next level without second-guessing every move, this is the way to go.

Mastering Tools That Hit Streaming Standards Without Guesswork

Mastering has always been that final step most producers either fear or rush, where one bad move can blow out your dynamics or flatten your stereo field altogether.

But with modern AI-powered mastering plugins, that whole process is starting to feel less mysterious and way more controlled.

And I’m not talking just presets — they actually analyze your track’s tonal balance, genre, loudness, and energy curve and adapt the entire chain around that.

Let’s say you’re uploading a track to Spotify, which uses loudness normalization capped at around -14 LUFS integrated with peaks ideally hitting no higher than -1.0 dBTP.

Ozone 11’s Master Assistant can scan your track, detect that you’re working on something in the “Modern Hip-Hop” lane, and suggest a tonal balance curve with slightly scooped mids.

As well as a multi-band compressor with a 2:1 ratio on the lows, and a soft-knee limiter that brings you to spec without crushing your transients.

And if you’re aiming for Apple Music or Amazon Music HD, which prioritize sound quality and dynamic range over sheer volume, the AI will adjust its mastering chain to give you more breathing room.

Typically keeping your track closer to -16 LUFS and using less limiting so it sounds cleaner and more open on high-resolution platforms.

You can also A/B against any track using tools like REFERENCE.

This will show you if your high-mids are peaking too hard, your low-end rolls off too early, or your stereo image is too wide in mono-incompatible zones.

Which can mess with playback on small speakers, of course.

Plus, many of these AI mastering plugins now come with streaming previews so you can hear how your final WAV will sound on Spotify, YouTube, club systems, or even cheap earbuds before you export, which is epic.

Combine that with real-time loudness metering, dynamic range displays, and spectrum analyzers, and you’re no longer guessing, you’re golden.

And for bedroom producers who aren’t working in high-end treated rooms (or engineers delivering Atmos-ready masters for film or gaming) this level of intelligent, reference-aware mastering is a massive shift.

It’s making high-quality finalization way more accessible, and that’s a huge part of why the future of music production isn’t just faster or more creative, it’s key.

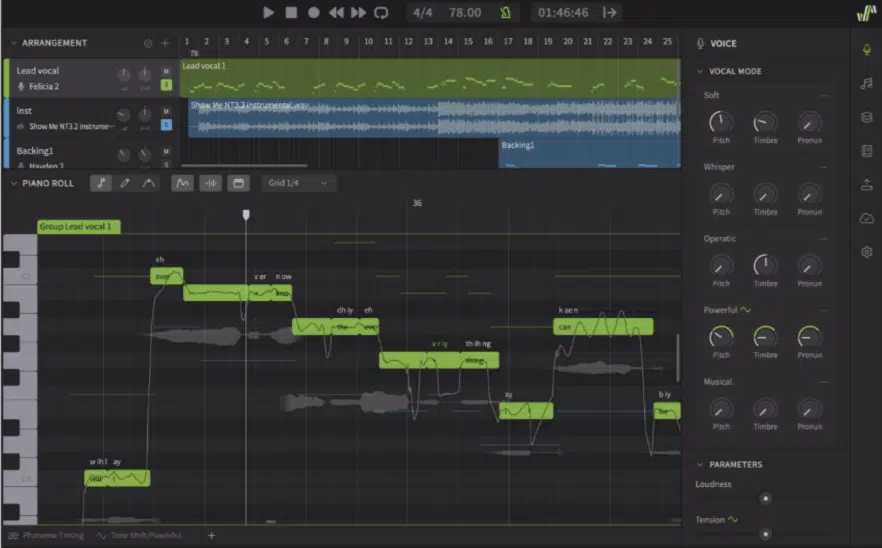

AI Vocal Technology

When you don’t have a vocalist on hand, or just want to experiment with ideas, AI voice tools are giving us a serious edge.

Right now, with platforms like Synthesizer V or Voicify.AI, you can clone a voice from a real artist or select a pre-trained virtual singer, and then feed it lyrics and MIDI to generate full vocal lines.

Say you want a female R&B-style vocal in the style of someone like Jhené Aiko…

All you have to do is choose the model, paste in your lyrics, import your melody MIDI, and the system renders a realistic vocal take with:

- Natural pitch bends

- Breath control

- Even vibrato settings

You can adjust everything down to the vowel transitions or consonant emphasis.

This isn’t just useful for demos 一 you can bounce full vocal stems, tweak them with formant shifting, or use them as textures behind a real lead.

Tools like these are a big reason the future of music production is letting more independent artists compete with industry-level vocal production without needing a full vocal chain or a top-tier singer.

Side note, if you want to learn all about vocal processing with AI or the most realistic AI singing voice generators, I got you.

The Future Format of Music Production: AI Meets Spatial Audio, Immersive Tech & Streaming

Now that the mix and vocal’s locked in, let’s talk about where all of this is heading, and that’s straight into immersive formats and adaptive delivery. Because the future of music production isn’t just about what happens in the DAW 一 it’s also about how the track gets heard, shaped, and streamed.

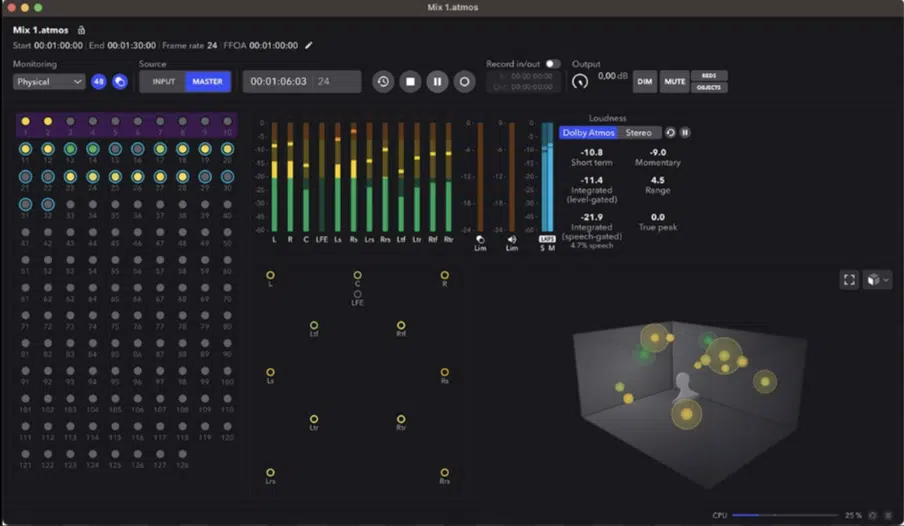

AI-Driven Mixing for Spatial Audio & Dolby Atmos

With spatial audio becoming standard across Apple Music, Amazon Music HD, and even gaming platforms, we’re not just mixing left and right anymore…

We’re mixing in 360°.AI-driven Atmos tools like Dolby’s Renderer AI and DearVR PRO 2 now help position stems inside a 3D field using automation that’s based on:

- Frequency content

- Energy

- Lyrical intensity

For example, say you’ve got layered synth pads…

It might pan one 30 degrees above the listener’s head and another 20 degrees behind their right shoulder 一 automatically adjusting reverb depth to avoid smearing.

That kind of spatial placement used to take hours of mapping, at least.

Producers working in genres like cinematic trap, ambient pop, or electronic hip hop can take advantage of this to create full mixes that wrap around the listener.

And even bounce them out in ADM format for direct upload to Dolby-enabled streaming services, which is very beneficial.

And since spatial audio is becoming a key part of playlist placement and music discovery on major platforms, knowing how to shape immersive mixes is one of the smartest moves you can make to stay ahead in the future of music production.

Predictive Streaming Optimization Using AI Metadata (How People Will Consume Music)

Now here’s something a lot of producers overlook: how your tracks are actually being processed once they hit streaming services.

Right now, AI-driven tools like Musiio by SoundCloud or Loudly’s Metadata AI are helping producers tag and prep songs with the right energy levels, mood descriptors, and waveform signatures for better performance on streaming platforms.

For example, let’s say your track is 145 BPM, key of F minor, emotional tone “dark / cinematic…”

AI tools can automatically detect all of that and inject metadata that helps algorithms place you in more relevant playlists, which boosts your chances of getting discovered.

NOTE: We’re already seeing artists in Latin America and South Korea gain traction using metadata-based submission systems that boost streaming quality and match to trending content categories 一 without them even having big label support.

And since streaming is such a big part of the future of music production, knowing how to set up tracks to optimize for AI recommendation systems is 100% essential.

Bonus: 3 Pro-Level Techniques For Music Producers to Stay Ahead in the AI Era/New Music Industry

Before we wrap up, I want to leave you with 3 of the most important strategies to keep your edge sharp as the future of music production keeps evolving. Because even though AI is changing everything, it’s how we use it that separates the average person from the producers who really make noise.

Use AI for Speed, Then Sculpt With Your Own Human Touch

One of the biggest mistakes new producers make is just accepting whatever the AI spits out and calling it a day.

Instead, you should take advantage of AI tools like Bass Dragon, Chord Genie, or Sound Doctor as a starting point by going in and tweaking:

- Note lengths

- Adjust reverb tails

- Fine-tune saturation

Or, even completely resample the result through a granular synth.

For example, you can take a clean 808 pattern generated by AI, distort it through FabFilter Saturn, resample it with 30ms attack and 55% glide in 808 Machine, and pitch-bend the tail to land with more emotional depth.

That’s how you blend automation with intention.

This is what makes the future of music production so powerful 一 we get the speed of AI, but we still keep that human touch that gives the music soul.

PRO TIP: After generating a chord progression with Chord Genie, route it through a MIDI FX chain where you randomize velocity (between 62–94), quantize with 15% swing, and add micro pitch drift (±10 cents) using Zen Master.

Then bounce that MIDI to audio, reverse one chord stab, automate reverb throws with 25% pre-delay, and sidechain that to a mid-kick.

This process turns something generic into something vibey and full of human character.

Master Prompting & Curation Like a Boss

AI tools are only as good as the way we feed them.

If you’re typing generic prompts like “make a chill beat,” you’re going to get generic results, but instead, you should think like a sound designer.

Prompt with something like: “Lo-fi R&B groove, 85 BPM, soft Rhodes, vinyl crackle, minor 7th chords, chorus-heavy guitar loop, no snares.”

That gives tools like Suno or Udio something real to work with, and from there, don’t just accept the output; curate.

Make sure to pick apart the chord progression, swap out the bassline, reverse the melody, and layer your own percussion to bring some originality to the table.

Again, the future of music production isn’t just about generating music with AI, it’s about knowing how to take advantage so it works like an extension of your creative process.

PRO TIP: If you’re using Udio, try prompting with multi-layered intent: “140 BPM Detroit-style drill beat with flanged hi-hats, reverse snare ghost hits, and melodic sample chopped from jazz trumpet.”

Once generated, take only the top melody, stretch it to half-time, export that to audio, and chop it into 8 slices.

Then load those slices into Serato Sample, pitch them up +3 semitones, and rearrange to fit a new key.

Now you’ve got a flipped idea with your own style layered on top of AI-generated music.

Play Around with Adaptive AI/Learning-Based Plugins & Other Emerging Technologies (Without Any Legal Concerns)

Some of the most exciting developments in music production right now are plugins that evolve based on how you use them.

These tools “learn” from your mix decisions — if you keep taming 200Hz in vocals, they’ll start suggesting that cut earlier next time.

If you add parallel compression at 30% dry/wet to every kick, they’ll store that preference and apply it automatically.

This is super helpful in long-term sessions or collaborative projects, where consistency matters and you don’t have time to explain everything.

You might be working with a co-producer from North Africa or someone doing sessions out in the Middle East, and having a smart plugin that remembers all your moves helps keep things solid and tight.

And since technology continues to evolve, this kind of machine learning behavior will become standard.

This is why learning to adapt with these tools now will put you ahead of the new generation of music producers/human artists stepping into this new musical landscape.

Final Thoughts

The future of music production isn’t something we’re waiting on, it’s already here 一 shaping how we work, think, and produce music every single day.

Whether you’re using AI to lay down ideas faster, shape cleaner mixes, or prep your tracks for streaming, the new AI tools are now powerful enough to support your creativity, not replace it.

What matters most is how you choose to use them because as shortcuts, starting points, or even collaborators in your process.

The producers who win won’t be the ones who fight the shift, they’ll be the ones who know how to guide it (make sure to be one of them).

So, stay curious, stay adaptable, and most importantly, keep creating and knocking out innovative, motivational beats all day long.

Until next time…

Leave a Reply

You must belogged in to post a comment.