As music producers, vocal processing is one of those things we just can’t cut corners on, no matter how bad we want to.

You’ve gotta lock in the pitch, clean up the timing, get the tone sitting right, smooth out the harsh spots, and still find a way to keep it feeling emotional and alive.

Plus, make sure your vocal track punches through the mix without sounding over-processed.

But now that artificial intelligence is part of the game, things have changed big time.

You can now use vocal AI to tighten up tuning, create full harmonies, reshape timbre, and even generate entire vocals from scratch if you need to.

It’s not just cool 一 it actually speeds up the workflow and helps you get more creative in the booth or in post.

That’s why I wanted to break down the most cutting-edge techniques for vocal processing with AI, so you’ve got everything you need right at your fingertips.

In today’s article, I’ll be breaking down things like:

- Real-time harmony generation with AI vocal tools ✓

- Transforming vocal tone with AI voice changers ✓

- Clean, natural-sounding pitch correction ✓

- Building full vocal doubles from one take ✓

- Intelligent de-essing for harsh syllables ✓

- AI-driven timing and alignment for stacked vocals ✓

- Emotion-aware tools to enhance the vocal performance ✓

- Fully custom vocal effects chains ✓

- Ultimate music creation processes to boost your creativity ✓

- Building ambient vocal textures and soundscapes ✓

- Full melody creation and AI voice generators ✓

- Vocal production tips tricks, and techniques ✓

- Much more about vocal processing with AI ✓

By the time we’re done, you’ll have endless AI-powered vocal techniques to play around with and perfect.

You’ll know how to process vocals like a pro, fix issues fast, and create music that actually stands out in the music industry.

Whether you’re recording a brand-new session or flipping a pack of royalty-free vocal samples into something fire, your overall sound will be much cleaner.

Table of Contents

- Free Vocal Samples by Unison Audio (The Best in the Business)

- Vocal Processing with AI: The Best Tips, Tricks, and Techniques

- 1. AI for Real-Time Vocal Harmonization

- 2. AI-Based Vocal Timbre Transformation

- 3. AI for Precise Pitch Correction/Pitch Shifting

- 4. Creating AI-Generated Vocal Doubles

- 5. Enhancing Vocal Clarity with AI De-Essing

- 6. Automating Vocal Alignment with AI

- 7. Applying AI for Dynamic Vocal EQ Adjustments

- 8. Generating AI-Based Vocal Reverb Tails

- 9. Synthesizing AI-Driven Vocal Textures

- 10. AI for Vocal Breath Control

- 11. AI-Enhanced Vocal Transitions

- 12. AI for Vocal Noise Reduction

- 13. Designing AI-Generated Vocal Effects Chains

- 14. Utilizing AI for Emotion Detection and Vocal Enhancement

- 15. Automating Vocal Gain Staging with AI

- 16. Creating AI-Powered Vocal Stutter Effects

- 17. AI for Vocal Time Stretching

- 18. Enhancing Vocal Presence with AI Exciters

- 19. AI for Adaptive Vocal Compression

- 20. AI-Generated Vocal Soundscapes

- 21. AI for Vocal Melody Generation

- 22. Taking Advantage of a Stem Splitter

- 23. Generating Entire Vocals with AI Voice Generators

- 24. Voice Cloning for Replicating Real Artists or Yourself

- Final Thoughts

Free Vocal Samples by Unison Audio (The Best in the Business)

If you’re starting with low-quality vocals, even the best vocal processing with AI won’t save your mix, that’s a fact.

That’s why grabbing the Unison Free Vocal Sample Pack is such a no-brainer.

It gives you 20 high-quality vocal loops, chops, and one-shots that are proper, perfectly processed, and super professional.

Plus, they’re 100% royalty-free and were created by the best producers and vocalists in the entire music industry.

And trust me, these aren’t filler samples either 一 they’ve got character, vibe, and they sit right in the pocket, regardless of what genre you’re working with.

Pro Tip: Try loading a chopped phrase into a stem splitter first, then run it through your AI plugin chain. Pitch correct it, reshape the formants, throw some stutter automation on it, and you’ve got a completely unique vocal sound to build around.

Bottom line, starting with a legendary pack like this makes the AI processing way more effective, and the best part is, it’s completely free.

Vocal Processing with AI: The Best Tips, Tricks, and Techniques

Now that you know just how much vocal processing with AI can benefit your unique music production workflow and style, let’s get into the good stuff: the most epic vocal processing with AI tips, tricks, and techniques. They’ll help you save time, level up your sound, and unlock creative options that just weren’t possible before. Your vocals will be clean, modern, and ready for any genre or streaming platform.

1. AI for Real-Time Vocal Harmonization

Real-time vocal harmonization with vocal AI allows you to instantly build layered harmonies that follow your lead vocal in scale, rhythm, and formant character.

All without extra vocal recordings, mind you.

With cutting-edge plugins like Antares Harmony Engine, Nectar 4, or Dubler 2, you can generate up to 4 harmony parts that adapt dynamically to the pitch and phrasing of your lead.

For example, you can use Harmony Engine to set one harmony to a 3rd above, one a 5th below, and a final one a 7th below with slight pan spreads (+40%, -40%).

And, formant shifts at ±2 to give each part its own voice.

I recommend humanizing the timing by at least 10–20 ms so the harmonies feel less robotic and more like real singers blending together.

Try automating the mix blend between sections 一 for instance, 30% wet during a verse and up to 65% wet in the hook to bring out your backing vocals more dynamically.

This technique can drastically speed up your vocal production/music production workflow, especially when you need a super smooth, layered sound for various genres like R&B, gospel, or even drill.

2. AI-Based Vocal Timbre Transformation

Timbre is the tone color of a voice 一 it’s what makes a voice sound breathy, nasal, dark, airy, that kind of thing.

With vocal processing with AI, you can reshape it without changing the pitch or rhythm.

Using Kits.AI, VocalSynth 2, or Respeecher, you can modify the harmonic structure and formants of a vocal while preserving the emotional performance.

For example, if you want a female lead vocal to sound younger and lighter, you can shift the formant up by +2.5 semitones.

And apply a high-shelf EQ boost around 10 kHz to add sparkle, of course.

I’ve also used AI timbre reshaping to make vocal samples blend better in lo-fi or synth-heavy tracks by darkening the midrange and introducing slight harmonic saturation.

Just don’t forget to reference the tone of your instrumental first…

If it’s warm and vintage, lean toward subtractive tone shaping with saturation instead of boosting harsh frequencies.

This is great for matching vocal styles to different genres, or even making multiple singers sound like the same person when building chorus stacks (super dope).

3. AI for Precise Pitch Correction/Pitch Shifting

Pitch correction has been around forever, but vocal processing with AI makes it feel invisible and natural.

This is especially true when it comes to working with expressive or emotional performances that really need that extra push.

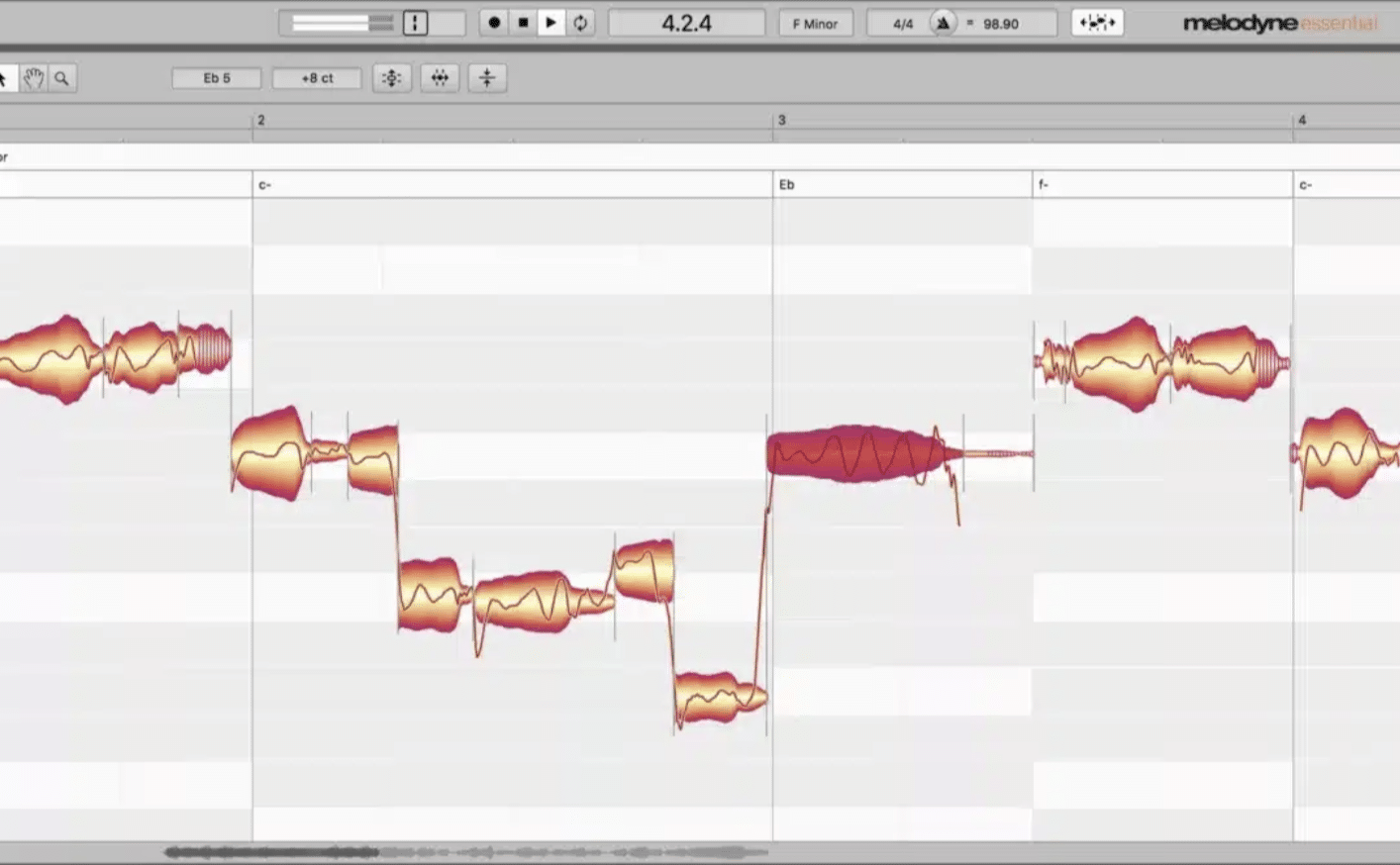

Plugins like Melodyne 5, Auto-Tune Pro X, and Waves Tune Real-Time use AI voice modeling to keep the character of the performance intact while tightening pitch issues.

For example, I’ll usually set Melodyne’s pitch drift control to 25%, pitch modulation at 30%, and only correct the worst 3–5 notes per phrase for a more human result.

If you’re doing pitch shifting for creative purposes (say, moving a vocal up 300 cents for a sped-up hook), make sure to use formant preservation or shift the formants independently to avoid chipmunk artifacts.

You can also automate pitch shifts between lines or words for creative transitions.

In one song, I shifted the final word of a verse down by -4 semitones to emphasize a moody drop, and it came out awesome.

My only advice would be to apply pitch correction before applying saturation or time-based effects.

This way you’re not amplifying tuning issues in the rest of your vocal chain.

4. Creating AI-Generated Vocal Doubles

Doubling vocals is one of the fastest ways to give your vocal track some extra width, power, and attitude, but tight doubles aren’t always easy to perform.

With AI vocal tools, you can generate realistic doubles with adjustable:

- Timing

- Formant

- Pitch variation

For example, I’ll often create two doubles 一 one shifted +8 ms forward with a formant shift of -1.5, and one delayed -12 ms with a +2 formant shift.

Then, pan them about 60% left and right.

This creates a stereo field that feels wide and full without clashing with the center lead, and avoids phasing issues you’d get from copy-pasting takes.

You can also apply subtle chorus or microshift effects to the doubles if you want an even more spaced-out feel in your mix.

It’s an easy way to enhance the vocal performance and make your studio-quality vocals sound larger-than-life without relying on take after take.

5. Enhancing Vocal Clarity with AI De-Essing

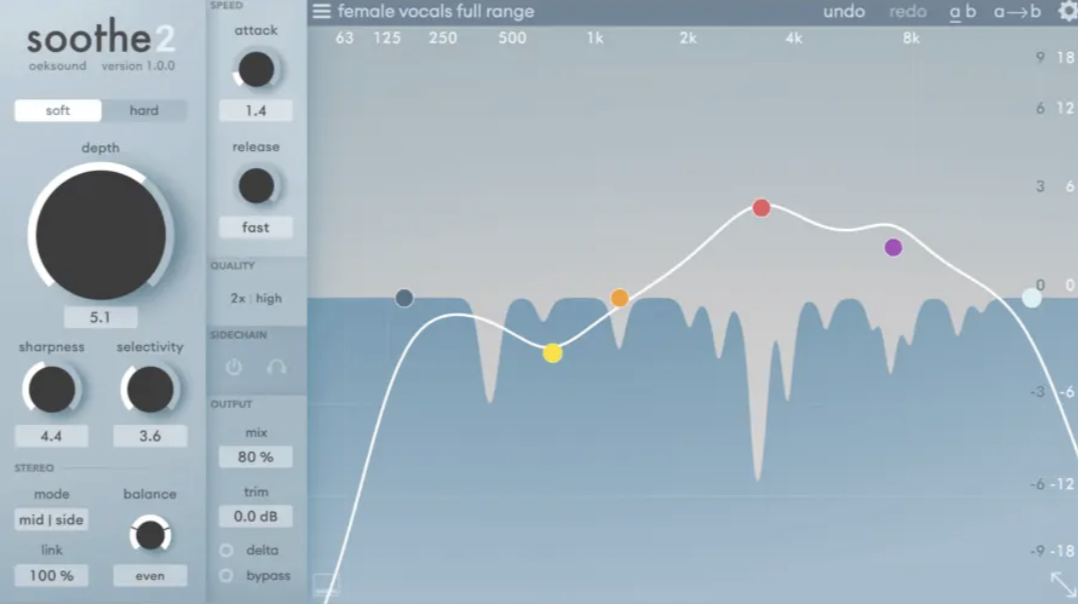

Sibilance (those harsh “S,” “T,” and “CH” sounds) can wreck an otherwise great vocal take, especially when layered with vocal samples or bright synths.

With vocal processing with AI, plugins like iZotope RX 11, Sonnox De-Esser, and Oeksound Soothe2 use frequency-dependent AI filtering to isolate and tame harsh sibilants in real-time.

For example, in RX 10’s Spectral De-Esser, I’ll typically set a sensitivity threshold around -28 dB and set the reduction to about -10 dB in the 6–9 kHz range.

This removes the harsh edge while preserving the airiness and detail in the voice, especially on breathy or whisper-style vocal styles.

A trick I love: automate the de-esser mix down in verses (where things are chill) and up in the chorus when the vocals are pushed harder or layered with cymbals.

It’s a small move, but it can completely clean up a mix and give your track that polished shine, so when people hear it, they instantly think “professional.”

Side note, if you want to learn everything about de-essing or discover the absolute best de-esser plugins, I got you.

6. Automating Vocal Alignment with AI

When you’re stacking multiple vocal stems, like lead, doubles, and backing vocals, timing can become a huge mess fast…

Luckily, with vocal processing with AI, plugins like Synchro Arts VocAlign Ultra, Revoice Pro, or Aligner by Hit’n’Mix let you auto-align all your layers based on the timing.

As well as the pitch contour of the lead.

For example, I’ll take a double that starts 60 ms late and ends 90 ms early, and VocAlign will match it to the lead within ±10 ms while keeping natural drift and vibe intact.

I always align my layers before EQ or compression to avoid locking in phase problems (trust me, aligning after processing is a total nightmare).

You can even set custom alignment strength curves in Revoice Pro 5 if you want the chorus tight but let verses breathe a bit more.

If your creative vision includes stacked, wide vocals, alignment is what separates a clean mix from a sloppy one, so don’t skip it!

7. Applying AI for Dynamic Vocal EQ Adjustments

Every voice shifts tonally depending on the emotion, register, and volume, and that’s where static EQ can fall short.

With vocal processing with AI, you can apply dynamic EQ curves that react in real-time to the vocal performance 一 catching harshness only when it flares up.

For example, Gullfoss and Neutron 4’s AI-powered EQ let you set frequency ranges like 2.5kHz–5kHz to soften when the vocals get aggressive.

Or, boost low-mids only when a section lacks body.

This kind of intelligent tone-shaping keeps your vocal track polished across every phrase without over-processing or killing the energy of the sound.

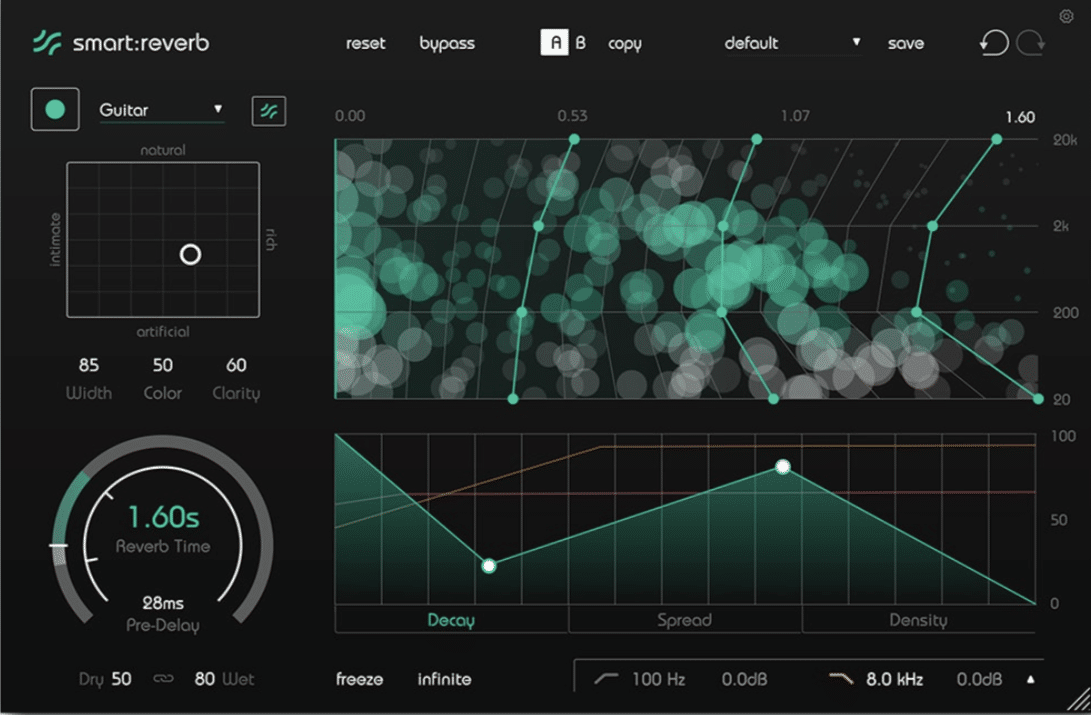

8. Generating AI-Based Vocal Reverb Tails

Adding reverb is about more than picking a preset 一it’s about matching the decay and tonal shape of your vocals so they feel embedded in the track.

AI-powered reverb plugins like Neoverb and Sonible smart:reverb analyze your vocal recordings and create custom-tailored reverb tails based on:

- The voice

- The instrumental

For example, you can use Neoverb’s Reverb Assistant to generate a 2.2-second plate-style tail that hugs a fast-paced hook, while avoiding frequency masking in the 400–800 Hz range where most of your beat lives.

My tip is to automate the AI’s Wet/Dry blend during verses to keep things dry and focused, then crank it for impact when the chorus drops.

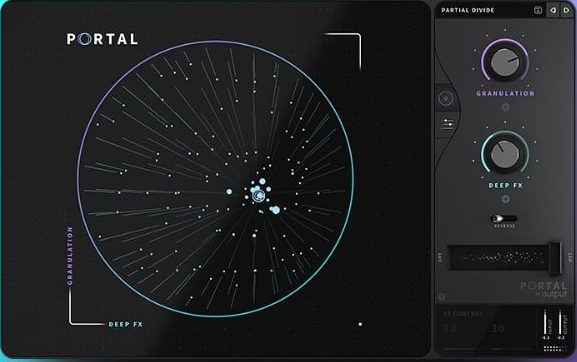

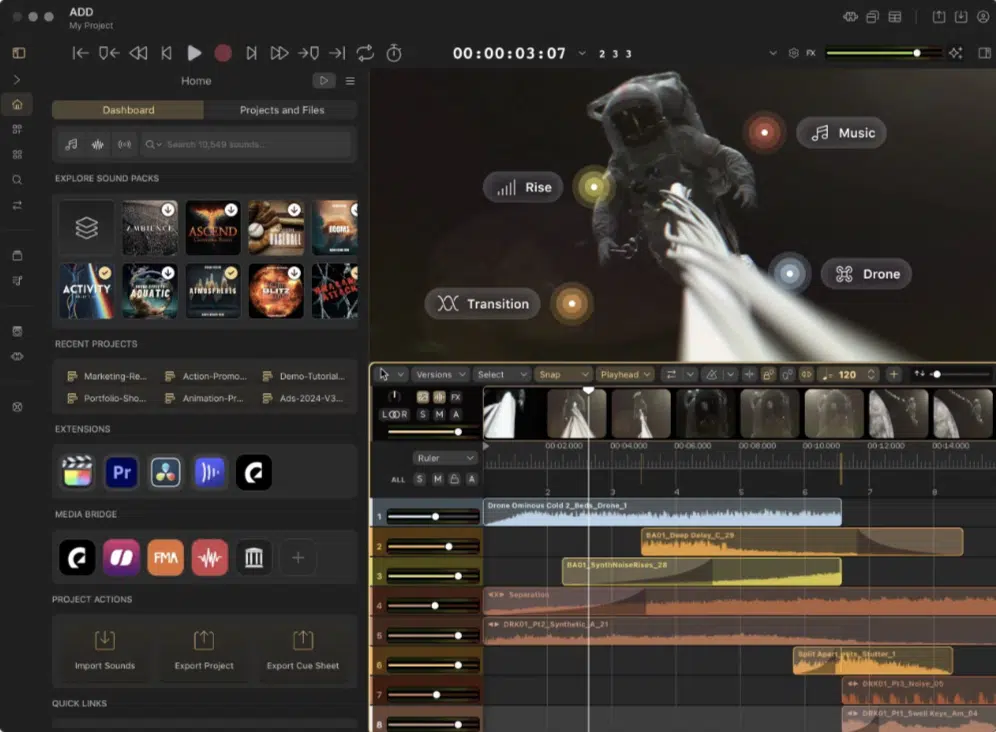

9. Synthesizing AI-Driven Vocal Textures

AI-driven vocal textures are layered, atmospheric versions of a voice that aren’t meant to be front-and-center but can totally shape the overall sound of your track.

You can use plugins like Output’s Portal, VocalSynth 2, or even Adobe Enhance Speech + resampling chains to stretch, granulate, and manipulate vocal samples into ambient textures.

For example, try duplicating a dry lead vocal, applying heavy formant shifts with AI voice changers, and running it through granular delay with randomized pitch modulation.

This will give you a sick synth-like drone behind your chorus.

This is one of my favorite techniques when you’re working on cinematic intros, ambient bridges, or transitional builds that need depth without new melodic elements.

10. AI for Vocal Breath Control

Breath sounds can either add realism or clutter your mix, and that balance is super hard to nail manually, let’s be honest.

With vocal processing with AI, tools like iZotope RX 10, Sonnox Claro, or Cedar Studio use AI voice analysis to isolate breaths and either:

- Reduce them

- Reshape them

- Remove them completely

For example, set RX’s Breath Control module to reduce breaths by 12–15 dB instead of muting them outright.

This will keep the human voice vibe intact without overpowering quiet verses.

A pro tip I suggest is to leave slight breaths in during emotional sections to enhance the vocal performance, and automate breath removal more aggressively in fast, tight sections with lots of backing vocals.

11. AI-Enhanced Vocal Transitions

Vocal transitions are all about smoothing jumps between phrases, switching sections, or moving between different vocal styles, and AI can help you blend those like butter.

You can use Devious Machines Texture, Accentize VoiceGate, or Neutron’s Transient Shaper with Mix Assistant to give you smart transient and tone smoothing.

So if you want some super clean transitions, this is how you get them.

For example, if your vocal track has a clipped word at the start of a hook, AI can identify the transient mismatch and fill in a smooth transition tail, even adding synthetic texture that matches the singer’s voice.

You can also use AI to morph long sustained notes into vocoded trails that bridge into a drop, especially when paired with AI-generated vocal textures underneath.

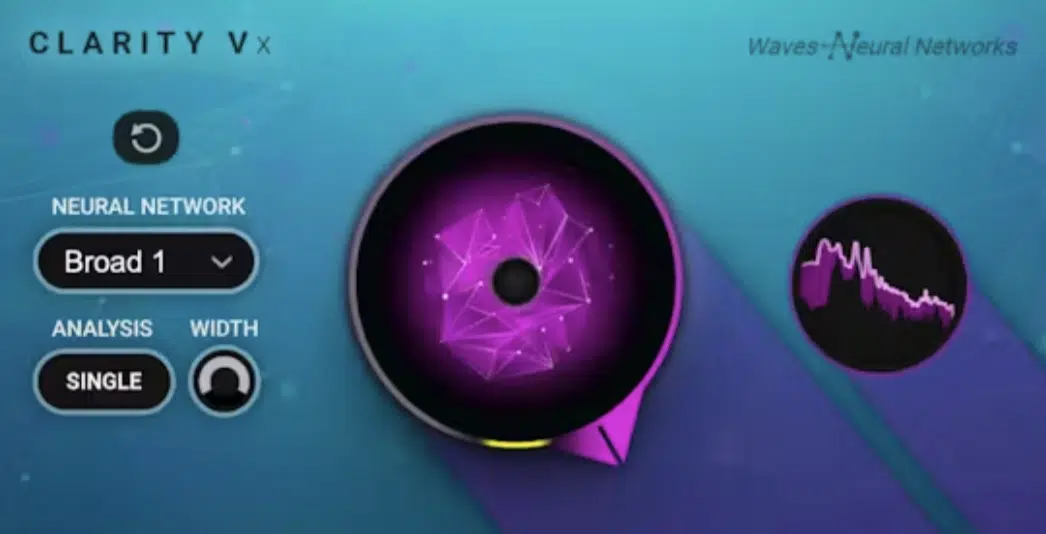

12. AI for Vocal Noise Reduction

Recording at home or in untreated spaces usually means dealing with background hiss, hum, or room reflections in your vocal recordings.

Vocal processing with AI tools like Cedar DNS One, iZotope Voice De-noise, or Accusonus ERA Noise Remover lets you isolate and remove noise.

And it’s all based on AI voice modeling rather than just frequency gating, which is cool.

For example, set the threshold at -30 dB with a reduction range of 18 dB, and you’ll keep the natural top-end of your vocals while completely removing air conditioning hum or computer fan noise.

My advice? 一 Always clean your vocal samples early in the chain so you’re not EQing or compressing unwanted audio artifacts deeper into the mix.

Remember, when it comes to vocal processing with AI, it’s all about the littlest details that can make the biggest differences.

13. Designing AI-Generated Vocal Effects Chains

Instead of building your vocal chain from scratch every time, vocal processing with AI lets you generate effects chains based on the unique:

- Tone

- Pitch range

- Delivery of the vocal recordings

The absolute best plugin for the job, hands down, is Sound Doctor, which analyzes your take and suggests precise combinations of EQ, compression, reverb, and saturation.

For example, it might suggest an EQ cut at 350 Hz to clean up muddiness and apply a multiband compressor with different thresholds for loud and soft sections.

As well as add a harmonic exciter set to “Retro” with a 30% blend to lift the highs.

Once the AI gives you a starting point, disable auto-learn, then tweak based on the style of your track, don’t just leave it static.

Keep in mind that AI gets you 80% of the way there in seconds, but your creative vision should still guide the final polish (that’s the key).

Also, try running your backing vocals through a duplicate chain with more aggressive saturation or stereo widening for better separation in the mix 一 especially when you’ve got dense arrangements.

14. Utilizing AI for Emotion Detection and Vocal Enhancement

Some AI vocal tools now go beyond basic pitch and timing… They’re analyzing the emotional content of a performance and helping you shape it.

This way, you’ll be able to create tracks that can hit artists right in the feels and excel when it comes to vocal enhancement.

Cutting-edge plugins like Audio Design Desk’s Emotion FX, Moises AI, and Descript Overdub use machine learning to identify:

- Energy shifts (flat or robotic delivery)

- Subtle dynamic inconsistencies

For example, you can use Emotion FX to detect when a line lacks emotional presence.

Then, apply targeted formant shaping, air band EQ, and subtle compression boosts only on those syllables.

This makes a huge difference on vocals where the delivery feels too sterile or underperformed without needing to re-record.

I recommend using this after comping but before mastering your chain, so the AI’s energy mapping reflects the actual structure of the full track, but dealer’s choice.

You’ll notice it instantly boosts your ability to enhance the vocal performance, especially in emotional genres like pop ballads, R&B, or cinematic scores.

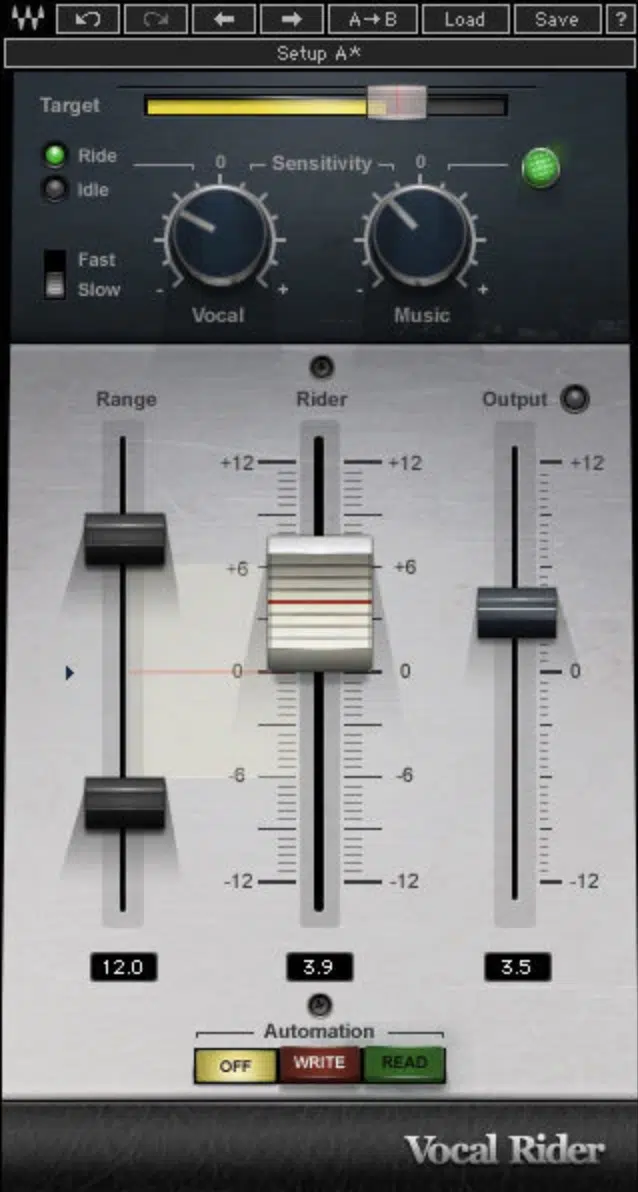

15. Automating Vocal Gain Staging with AI

Getting your vocal levels consistent across a song is super important, even before you even hit compression, and AI voice tools make it automatic.

With professional-quality plugins like Waves Vocal Rider, iZotope Nectar, or Synchro Arts Revoice Pro, you can detect and adjust gain dynamically.

And it’s all based on perceived loudness, not just raw peaks.

For example, set Vocal Rider’s target range between -9 dB and -6 dB RMS, and it’ll subtly ride the fader in real time to keep your vocal track sitting perfectly in the mix.

This removes the need for heavy compression later, which helps you keep more of the original vocal dynamics intact.

It’s great for all genres, but especially acoustic, soul, or melodic drill where too much squash kills the emotion.

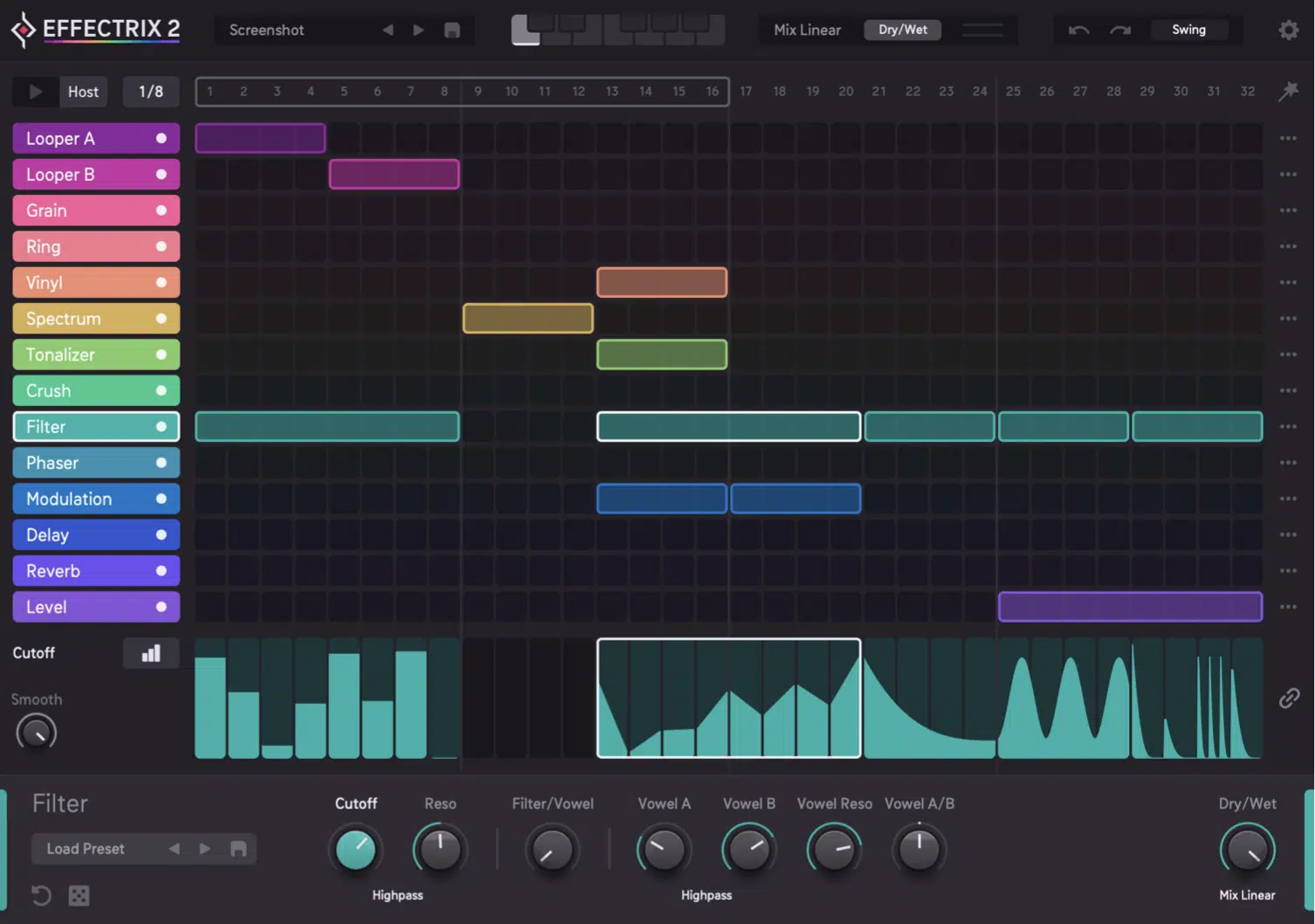

16. Creating AI-Powered Vocal Stutter Effects

Stutter effects are great for adding rhythm and glitch energy to vocals, especially in electronic or hyperpop music, but it really works great anywhere.

Luckily, vocal processing with AI makes them smarter and way easier to control.

Instead of slicing waveforms manually, you can use tools like Effectrix 2, Stutter Edit 2, or RipX DAW Pro, which apply stutters intelligently based on:

- Transient detection

- Pitch tracking

For example, you can grab a phrase like “don’t go” and apply a 1/16 stutter on the word “don’t,” pitched up by +3 semitones using AI voice formant shift, and layered with a widened delay throw.

PRO TIP: Automate the vocal AI plugin’s dry/wet control so the stutter moment pops out, then tucks itself back in for seamless track flow.

17. AI for Vocal Time Stretching

Time stretching has come a long way, and vocal processing with AI now lets you stretch or compress vocal recordings by huge amounts without adding artifacts or weird robotic tones.

Instead of traditional elastic audio, tools like Zynaptiq ZTX Pro, Elastique Pro, and Ableton Live’s Complex Pro Warp use AI voice modeling to preserve (independently):

- Formants

- Breathiness

- Transients

For example, you can take a 1.5-second phrase and stretch it to 3.2 seconds for a halftime vocal moment without changing the pitch, and still keep it sounding human.

My go-to trick is to create a slow-down effect during pre-choruses, where you stretch the last word by 200% while adding reverb automation to let it bleed into the drop.

You can also use AI tools to compress backing takes by 15–20% to tighten up delivery without losing tone, which helps vocal layers sit more tightly in your mix.

Just make sure to always bounce to audio after stretching and apply a short fade at the tail 一AI is powerful, but clicks can still sneak in without it, so watch out.

18. Enhancing Vocal Presence with AI Exciters

When your vocal track feels dull or buried (especially in a crowded mix) a vocal AI plugin with an exciter module can help bring back clarity and sparkle without harshness.

Vocal processing with AI lets you dial in harmonic content that follows the tone and range of your voice, instead of applying generic brightness across the board.

For example, Neutron 4’s Exciter, Oeksound Spiff, and smart:EQ 3 can isolate frequency zones like 5kHz–9kHz and apply subtle saturation or harmonic shaping.

But only when your vocals dip in clarity, which is key.

I usually aim for a 15% blend with “Warm” mode active in Neutron’s Exciter when working with airy R&B leads.

Just enough to enhance the vocal performance without competing with cymbals or synths is usually where it’s at.

19. AI for Adaptive Vocal Compression

Standard compressors respond to level, but AI voice compressors respond to energy and tone.

I know it sounds like a little thing, but it really makes a huge difference when processing emotional, expressive vocals.

With vocal processing with AI, tools like Neutron 4’s Compressor, T-De-Esser Pro, or Waves Clarity Vx can automatically adjust attack and release based on the:

- Phrasing

- Delivery

For example, if a singer pushes hard on a word like “falling,” the AI adapts the release time so the compression breathes naturally around the next syllable.

This avoids the over-squashed feeling you sometimes get with static settings, especially in dynamic ballads or melodic hip-hop tracks.

Start with a 3:1 ratio, threshold at -20 dB, and let the vocal AI plugin shape everything else 一 then tweak your output gain to match the original perceived sound level.

20. AI-Generated Vocal Soundscapes

Vocal soundscapes are ambient, layered, and often ethereal textures created from vocals, designed to fill space and build emotion behind a lead or instrumental section.

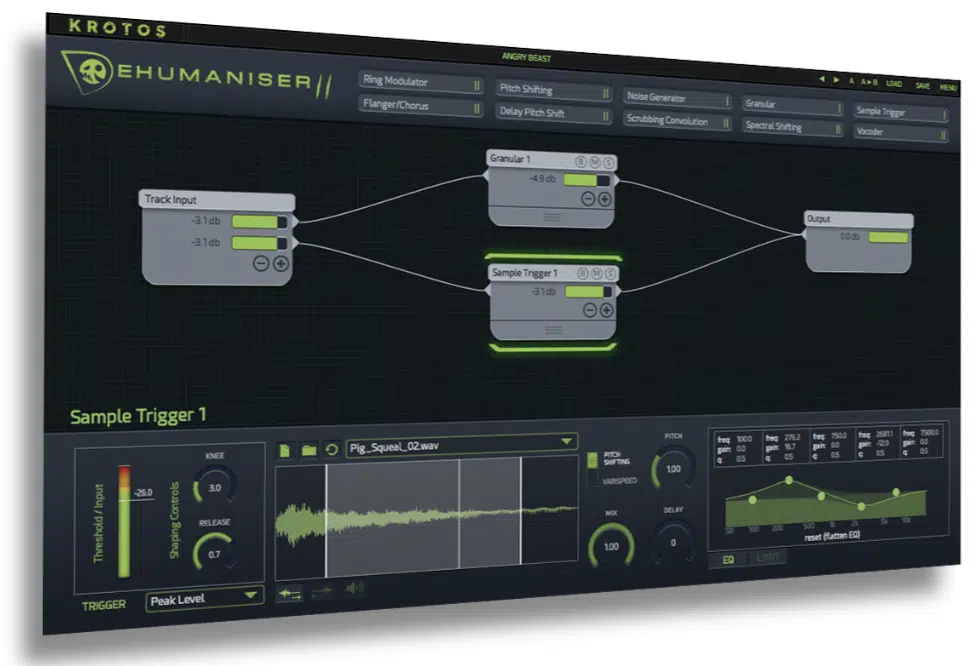

With vocal processing with AI, you can generate these from scratch using cutting-edge plugins like Output Portal and Dehumaniser 2.

Or, even AI voice changers combined with heavy reverb and granular delay.

For example, take a single breathy ad-lib and process it through Valhalla Shimmer with a 100% wet signal and +12 semitones pitch shift.

Then, bounce and reverse it for an ambient rise.

Try building a stereo pad from chopped vocal samples pitched an octave apart and layered with AI-generated shimmer tails.

It’s especially powerful under bridges or between hooks.

This technique is especially good for music producers working in cinematic, chillwave, or ambient pop (super popular in the music industry right now).

It can help your track feel layered and emotionally rich without needing more instruments.

21. AI for Vocal Melody Generation

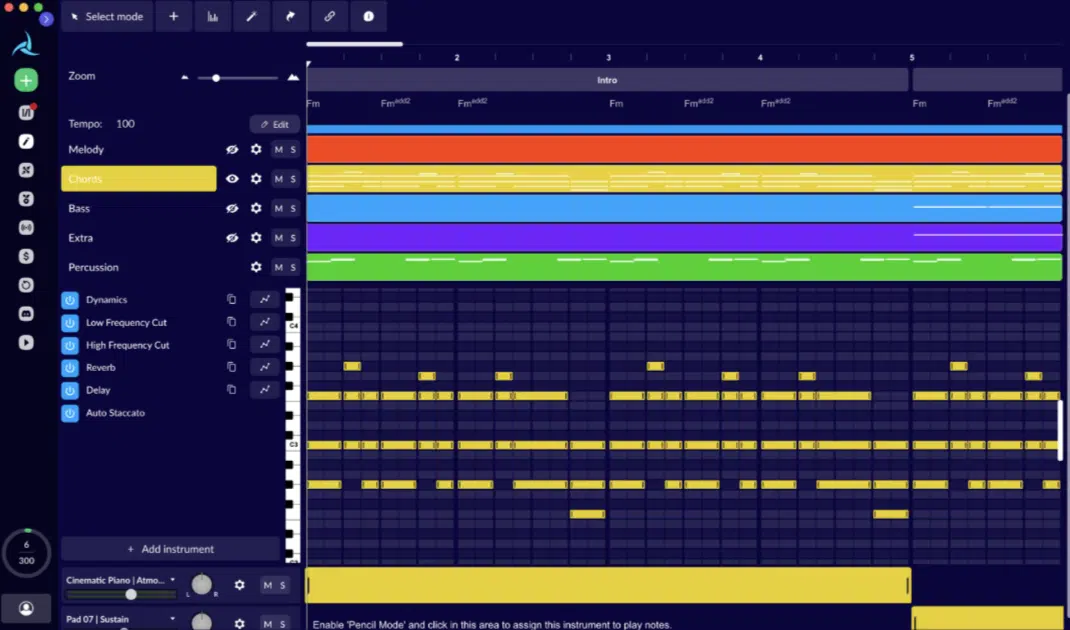

When your creative process is lacking, AI can actually help you create original vocal melodies from scratch.

Or, help you lay down new ones based on your chords or reference tracks.

With plugins like Orb Producer Suite, Hookpad AI, or AIVA, you can generate melodies that follow your key, tempo, and even suggested vocal styles.

It’s incredibly inspiring to be honest, and it’ll help you with your overall vocal production workflow/skills.

For example, say your chorus needs a melodic lift…

Simply feed in your chord progression, select a “pop female vocal” tone, and the vocal AI plugin can spit out 4–5 variations with rhythm and phrasing already mapped.

One trick I use is to isolate just the first or last note of a generated line, then manually write in between to make it feel more original.

You can also generate multiple takes, export the MIDI, and run it through AI voice generators like Emvoice One or Synthesizer V for immediate playback.

This will help your vocals stand out, especially when your artist hasn’t quite landed on a memorable hook yet.

22. Taking Advantage of a Stem Splitter

A stem splitter lets you break any full track or vocal recordings into isolated elements, like lead vocals, backing vocals, drums, instruments, and so on.

AI tools make this faster and more accurate than ever.

Whether you’re remixing, extracting a clean acapella, or salvaging a rough bounce, vocal processing with AI lets you get pro-level isolation with little effort.

For example, RX 11 Music Rebalance, Spleeter, or LALAL.AI use deep neural networks to identify and split sources 一 with the right settings, you can extract vocal stems with less than 2% bleed.

Once you’ve isolated your vocals, you can rebuild the backing tracks or add royalty-free samples/layers underneath to completely flip the vocal production.

This is great when working with old demos, stems from clients, or even tracks downloaded from streaming platforms.

Just don’t forget to always check the export level of each stem to avoid clipping when rebuilding the full track (aim for -6 dB headroom per stem if you’re mastering later).

This is a music production must, so don’t overlook it.

23. Generating Entire Vocals with AI Voice Generators

If you don’t have a singer on hand or you want to create something totally custom, you can easily generate entire vocal performances using AI voice generators.

There’s an abundance of plugins (Synthesizer V, Voicemod Text-to-Song, and Emvoice One) that let you input your lyrics and melody and choose from dozens of pre-trained voice models.

For example, you can write a topline, select a male falsetto AI voice, and have it sung back in minutes with full dynamics, vibrato, and phrasing.

I usually tweak the MIDI velocity to shape the emotional intensity 一 lower velocities for softer verses, higher for power in the chorus.

The best part is any of these offer full ownership of your generated audio, so you can release it commercially on streaming platforms without dealing with session singers or licensing issues.

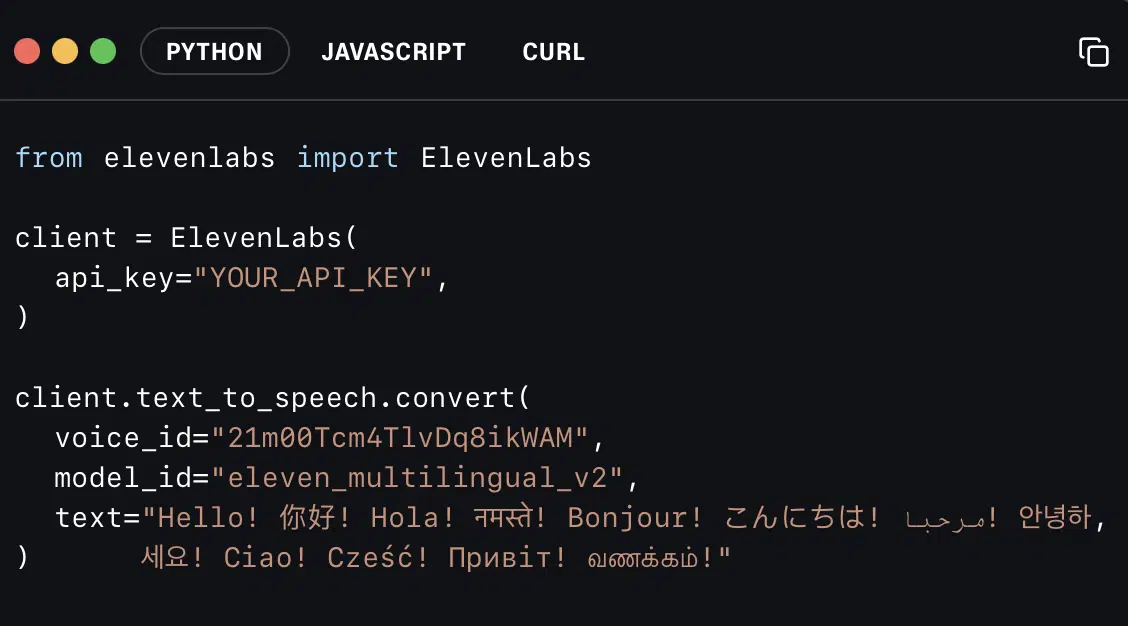

24. Voice Cloning for Replicating Real Artists or Yourself

Voice cloning takes vocal AI to the next level by letting you recreate a real person’s voice, whether it’s a famous artist, a featured collaborator, or even your own.

ElevenLabs, Respeecher, iSpeech, and Voicery, you can train a model with as little as 60–120 seconds of clean vocal recordings and generate any phrase with their:

- Tone

- Pacing

- Inflection

For example, clone your own voice and use it to quickly sketch out demo ideas with full emotion and phrasing.

This way, your artist can simply sing once, and you can reuse the same tone for alternate takes or language versions.

And if you want a famous-style delivery without hiring a celebrity vocalist, that’s now right within reach (just be mindful of legal use if you don’t have permission).

Bottom line, vocal processing with AI here becomes a powerful tool for expanding your branding, speeding up your AI music production process, and keeping your sound consistent.

Final Thoughts

And there you have it: absolutely everything you need to know about vocal processing with AI (artificial intelligence).

With these epic tips, tricks, and techniques, you’ll be able to streamline your workflow, seriously up your creativity, and produce vocals that truly stand out.

Plus, you’ll save time, maintain consistency, and unlock new creative possibilities like a professional 一 things you might not have even dreamed of.

Just remember that vocal processing with AI is all about starting with high-quality vocals and applying the right techniques to enhance them.

So, always make sure your source material is top-notch and experiment with different AI tools to find what works best for your unique style.

Otherwise, you might not achieve the ultra polished sound you’re aiming for.

As a special bonus, you’ve got to check out the legendary Unison Vocal Series – Aaron Richards “Talk About It” Vol. 1.

I thought since we gave you the best free vocal samples in the beginning, you should check out the best premium vocal samples at the end.

This invaluable vocal sample pack includes 80 unique vocal lines, 137 ad-libs, and 120 custom-made vocal chops (available in both dry and wet versions).

They were all performed by the insanely talented Aaron Richards.

With over 10 million plays and collaborations with artists like Helberg and Ephixa, Aaron’s vocals bring unparalleled quality to your tracks.

It also features two in-depth vocal processing video tutorials and three valuable bonuses: the Unison Theory Blueprint PDF, Unison Vocal Secrets PDF, and a Unison Loop Pack with 200 WAV loops spanning 20 genres.

These resources are designed to help you integrate professional vocals into your tracks in seconds (super beneficial).

Bottom line, when it comes to vocal processing with AI, you have to seriously invest in quality source material and stay open to experimenting with various techniques.

This way, you’ll never have to worry about your vocals sounding amateur or out of place ever again.

Just don’t forget to really take advantage of all the things we’ve talked about today and never be afraid to think outside the box, because that’s where the magic lies.

Until next time…

Leave a Reply

You must belogged in to post a comment.