Audio latency is the delay between when an audio signal is input into a system and when it emerges from speakers or headphones.

This delay, if not managed properly, can disrupt the recording process, affect live performances, and ultimately compromise the quality of your music.

As a music producer, it’s important to understand all about latency in order to optimize your tracks and maintain the highest sound quality possible.

In today’s article, we’ll break down:

- What is audio latency ✓

- Causes of audio latency & how to avoid these major problems ✓

- The impact of audio latency on music production ✓

- Technical aspects of audio latency ✓

- Tips, tricks, and techniques to reduce latency ✓

- The role of your audio interface ✓

- Buffer size and sample rate effects ✓

- Common audio latency myths debunked ✓

- Much more about latency ✓

After reading this article, you’ll have a strong understanding of managing latency.

It will help you streamline your workflow and seriously enhance your music production process, quality, and appeal.

With the insights and strategies provided, you’ll be equipped to tackle latency issues head-on and avoid all major problems.

It will ensure your creative process remains uninterrupted and your final output is pristine and professional.

So, let’s dive in…

Table of Contents

What is Audio Latency? Breaking it Down

Audio latency is a term that frequently comes up in the world of digital music production. Understanding this concept is how you can ensure the quality and synchronization of your tracks. So, let’s break down what is audio latency, so you understand and manage it properly.

-

The Basics of Audio Latency

Audio latency refers to the delay between when an audio signal is generated and when it is ultimately heard.

This sneaky little delay can be caused by various factors in the digital recording, music production, and audio engineering processes.

For instance, when you record a guitar part, there’s a slight delay from the moment you strum the strings to when you hear the sound through your headphones.

This delay, often measured in milliseconds, is super important for music producers (like yourself) to understand and manage.

Managing audio latency effectively ensures that recordings are in sync with each other and with the musicians’ performances.

Digital audio workstations (DAWs) and audio interfaces play a significant role in this process, offering different settings to adjust buffer sizes and sample rates.

This can help significantly reduce latency.

Additionally, the choice of audio drivers, such as ASIO driver for Windows or Core Audio for Mac, can significantly impact the latency time.

NOTE

If you’re not sure what an audio driver is, it’s simply a type of software that enables the operating system and a computer program to communicate with audio hardware.

An audio driver basically acts as an intermediary…

Meaning, audio drivers translate your software’s audio output commands into a language that your audio hardware can understand.

In turn, it plays a key role in the efficiency of audio processing and the minimization of latency (which is the goal).

-

Causes of Audio Latency

Latency occurs when the buffer size, sample rate, and the quality of the audio interface are out of whack.

Buffer size determines how much audio data your music production computer processes at one time.

- Larger buffer sizes 一 Can handle more data but increase latency

- Smaller buffer sizes 一 Reduce latency but may put more strain on the computer’s CPU, potentially leading to audio dropouts or glitches.

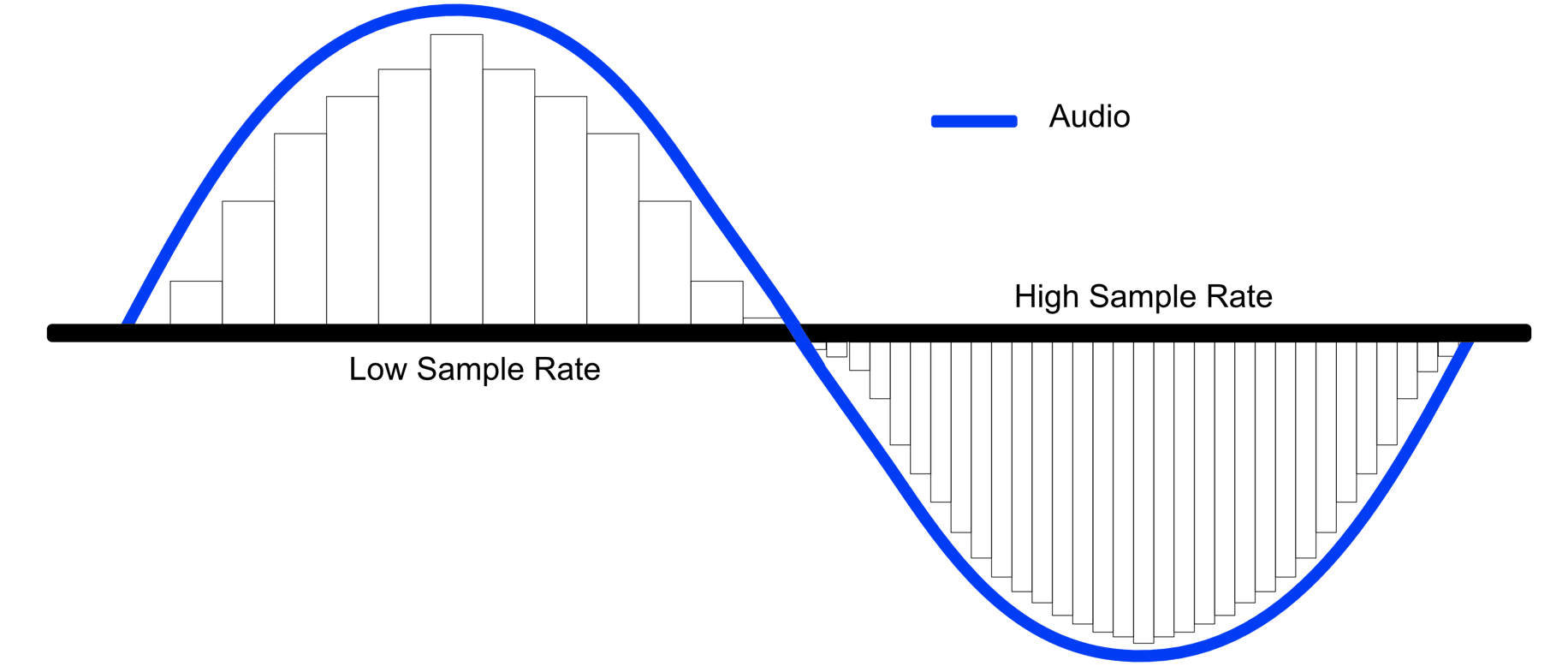

Another contributing factor is the sample rate, which is the number of samples of audio carried per second.

Higher sample rates provide better audio quality but can also increase the amount of data processed, leading to higher latency.

The efficiency of the audio interface and its drivers also plays a critical role.

High-quality audio interfaces with optimized drivers can significantly reduce audio interface latency, ensuring smoother recording and playback.

The last thing you want is audio interface latency, believe me.

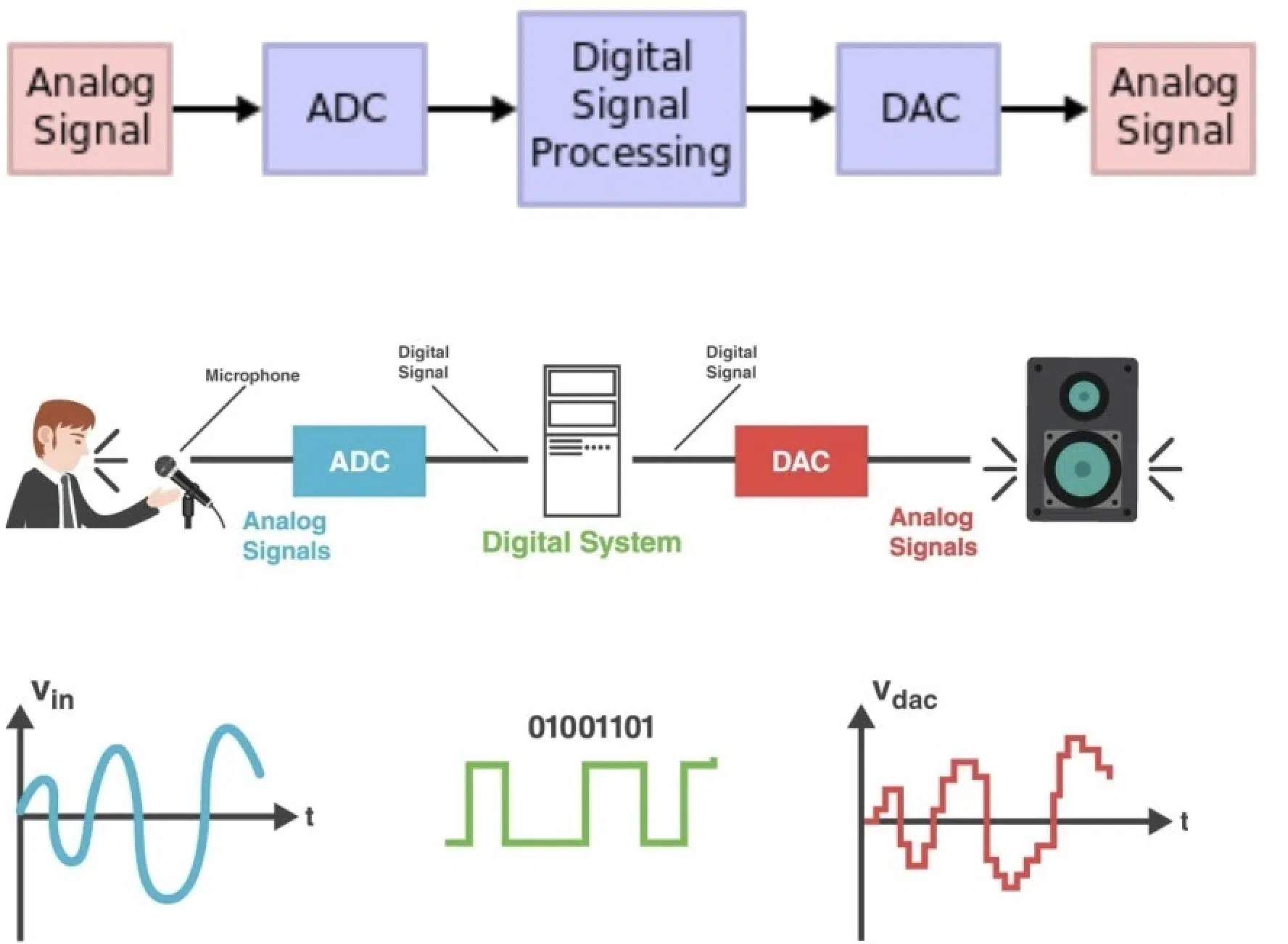

NOTE: Converting analog to digital signals introduces extra latency in the signal chain, especially when the input and output process relies on the analog to digital conversion to process audio in real-time.

-

Audio Latency in Recording (Recording Latency)

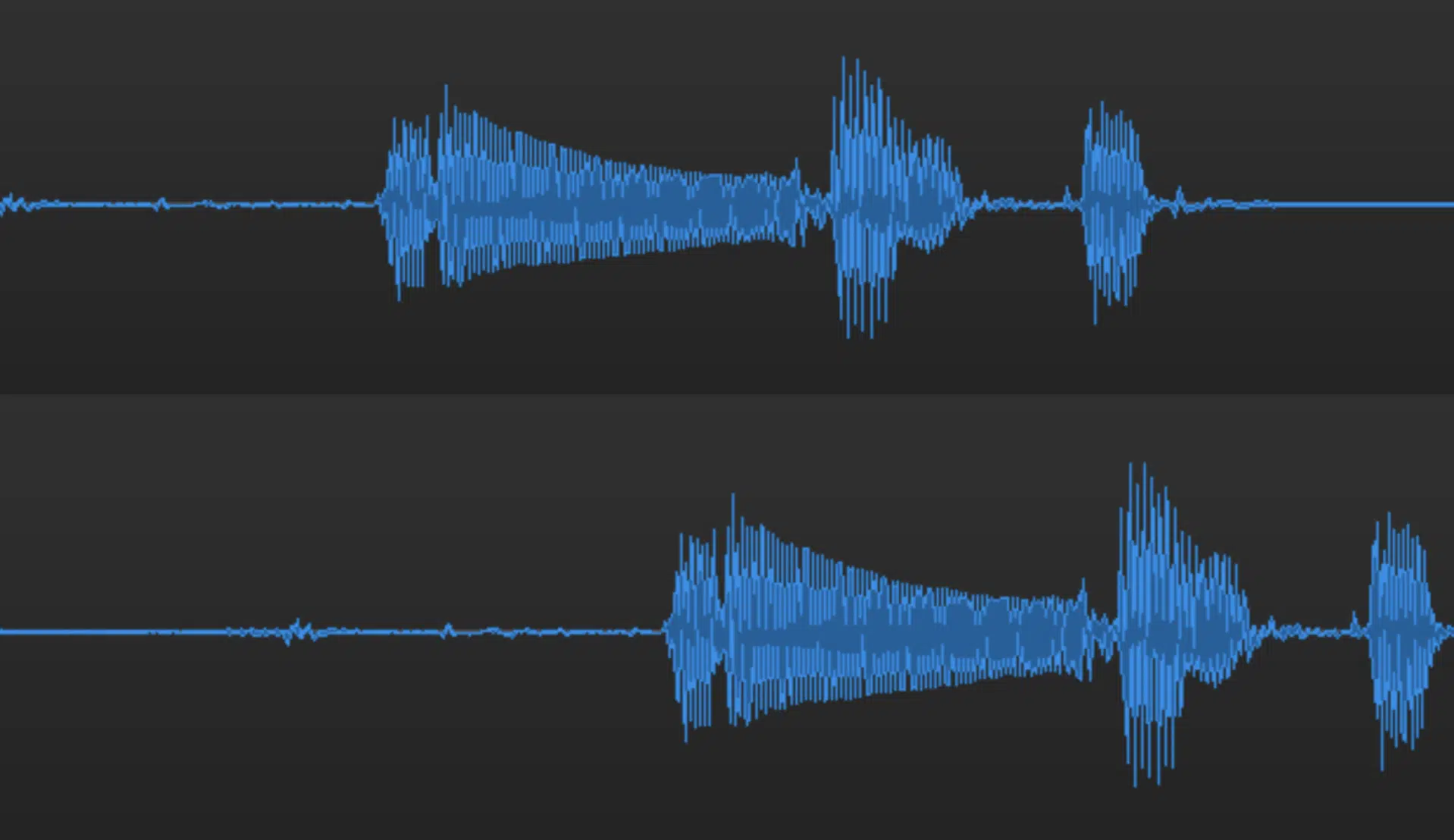

During the recording process, audio latency can cause noticeable delay between a musician’s performance and what they hear back in their headphones.

This can cause major problems when layering multiple tracks or when precise timing is mandatory (which it pretty much always is).

For example, a drummer recording to a click track might find it challenging to keep time if there’s noticeable latency.

The resulting recordings can feel out of sync 一 affecting the overall groove and tightness of your music.

To manage latency in recording settings (recording latency), you can:

- Lower the buffer size in your DAW

- Use an audio interface with low latency figures

Additionally, employing direct monitoring allows the producer or artist to hear their performance directly from the audio interface without the signal having to go through the DAW.

Meaning, it bypasses any potential delay caused by digital processing.

Audio latency can significantly affect the quality and efficiency of music production processes, so make sure to always be checking it out.

-

Pro Tip: Latency and Live Performance

In live performance scenarios, latency needs to be minimized to ensure that the output audio is in real-time with the performers’ actions.

High latency can disrupt the performers’ timing, especially when monitoring through headphones or in-ear monitors.

For example, a keyboardist that’s using virtual instruments might experience a delay between pressing a key and hearing the sound.

This can be super disorienting during something like a live performance.

Solutions for reducing latency during live performances include using dedicated hardware synthesizers or drum machines that do not rely on computer processing.

And, optimizing the audio interface settings for the lowest possible latency, of course.

Additionally, some live performance software offers specialized low-latency modes designed to prioritize real-time audio processing.

Technical Aspects of Audio Latency

Understanding the technical aspects of what is audio latency is key to effectively managing and reducing it in your projects.

-

Buffer Size: Explained

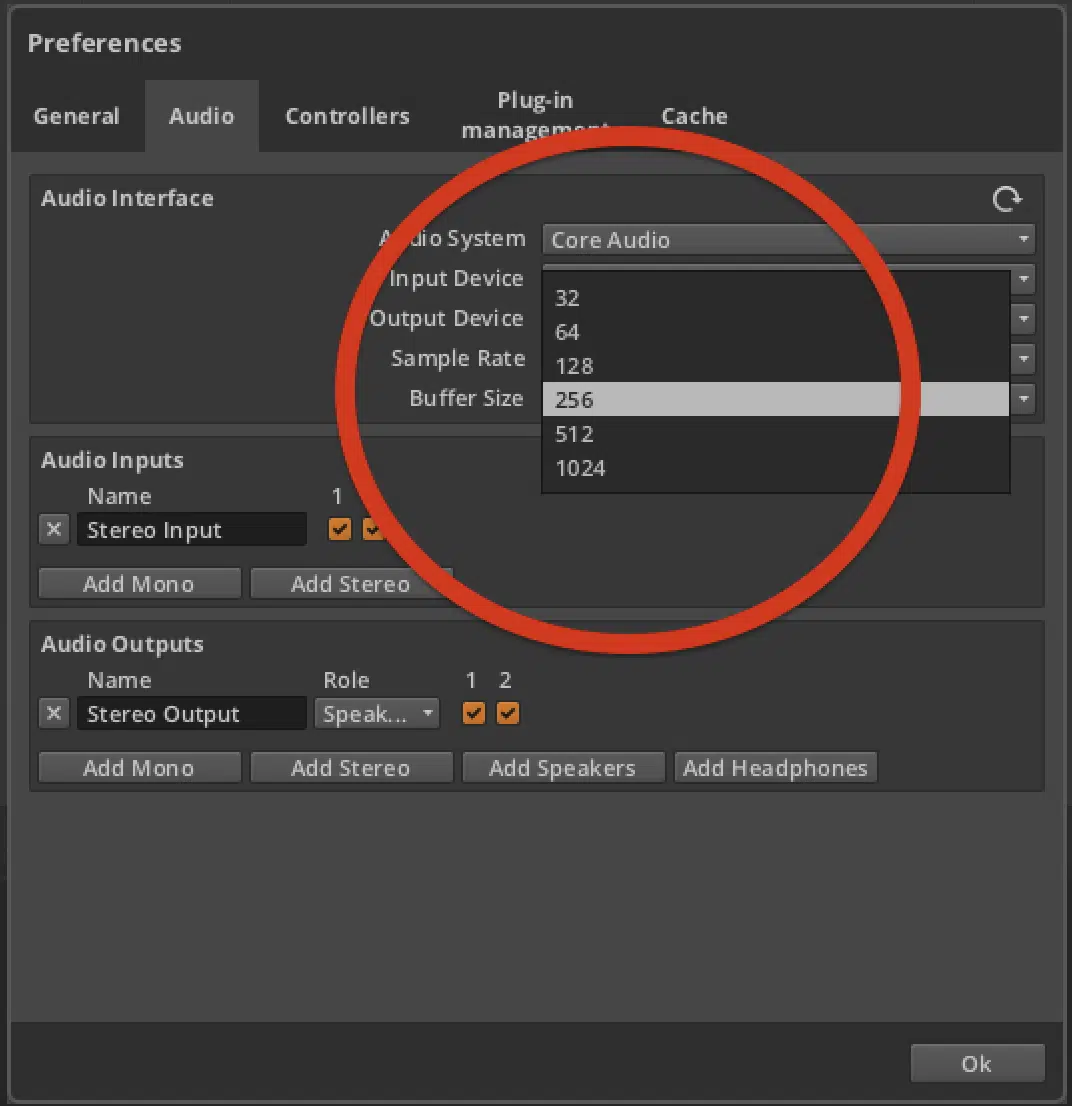

Buffer size is a critical setting in managing audio latency.

It determines the amount of audio data that the computer processes before sending it to the audio interface.

A smaller buffer size means that the audio is processed in smaller chunks, which leads to lower latency.

For example, setting the audio buffer size to 64 samples might offer near-instantaneous audio feedback, which is ideal for recording or playing a virtual instrument live.

Keep in mind that smaller buffer sizes require more processing power from your computer’s CPU load.

If the audio buffer size is set too low without sufficient processing power, it can lead to:

- Audio dropouts

- Clicks

- Pops

This is because the computer struggles to process the audio quickly enough.

Balancing audio buffer size and processing power is crucial for maintaining low latency without compromising audio quality.

The optimal buffer size depends on your specific needs and the capabilities of your system.

For recording and monitoring purposes, a lower buffer size is preferable as opposed to a larger buffer size.

On the other hand, for mixing and processing tracks with multiple plug-ins, a larger buffer size can be used to give the CPU load more time to process the complex signal chain.

This is because, in that scenario, real-time monitoring becomes less critical.

NOTE: In optimizing different settings (in your DAW), adjusting the buffer size of your playback engine can have a significant impact on performance.

This is because the playback engine itself is central to how audio is processed and heard in real-time.

Just remember, adjusting the buffer size can reduce the time delay experienced from when the signal enters the system to when it’s heard, as a smaller buffer size decreases the time difference.

It allows for audio processing in a significantly short period.

-

The Role of Your Audio Interface

An audio interface is a pivotal element in the signal chain of digital audio production.

It acts as the bridge between an analog signal (from microphones or instruments) and the digital world of your computer and DAW.

A high-quality audio interface can significantly reduce audio latency, ensuring that the input signal is converted to digital audio with minimal delay.

NOTE: Interfaces that offer Thunderbolt or USB-C connectivity can provide lower latency figures compared to older USB 2.0 models because of their faster data transfer rates.

Additionally, audio interface latency is not just about the hardware 一 it’s also about the software that drives it.

Audio interface drivers, such as ASIO driver (Audio Stream Input/Output) for Windows or Core Audio for Mac, are optimized to manage audio data efficiently, minimizing the delay time.

Making sure your audio interface drivers are up to date is key for achieving the lowest latency possible.

This, paired up with the correct audio interface settings, can make a substantial difference in your audio production workflow and quality.

-

Sample Rate and Its Effects on Latency

Sample rate plays a key role in determining the latency of your audio system.

A higher sample rate means that more audio samples are processed per second, which can result in higher sound quality.

However, this also increases the workload on your audio system, potentially leading to increased digital audio latency.

For instance, recording at a sample rate of 192kHz, while offering superior audio fidelity, requires more processing power and can increase the latency compared to recording at 44.1kHz or 48kHz.

Choosing the right sample rate is a balance between achieving the desired sound quality and managing latency.

NOTE: For most applications in audio production, a sample rate of 44.1kHz or 48kHz is sufficient and helps keep latency low.

When high-definition audio is required, and your audio system is capable, opting for higher sample rates like 96kHz or even 192kHz is possible.

However, always be mindful of the impact on latency.

Tips, Tricks, and Techniques to Reduce Latency

Effective strategies and adjustments can significantly mitigate the effects of digital audio latency in your music production process. So, let’s break some tips, tricks, and techniques to help you reduce latency and get that ideal sound.

-

Optimizing Audio Interface Settings

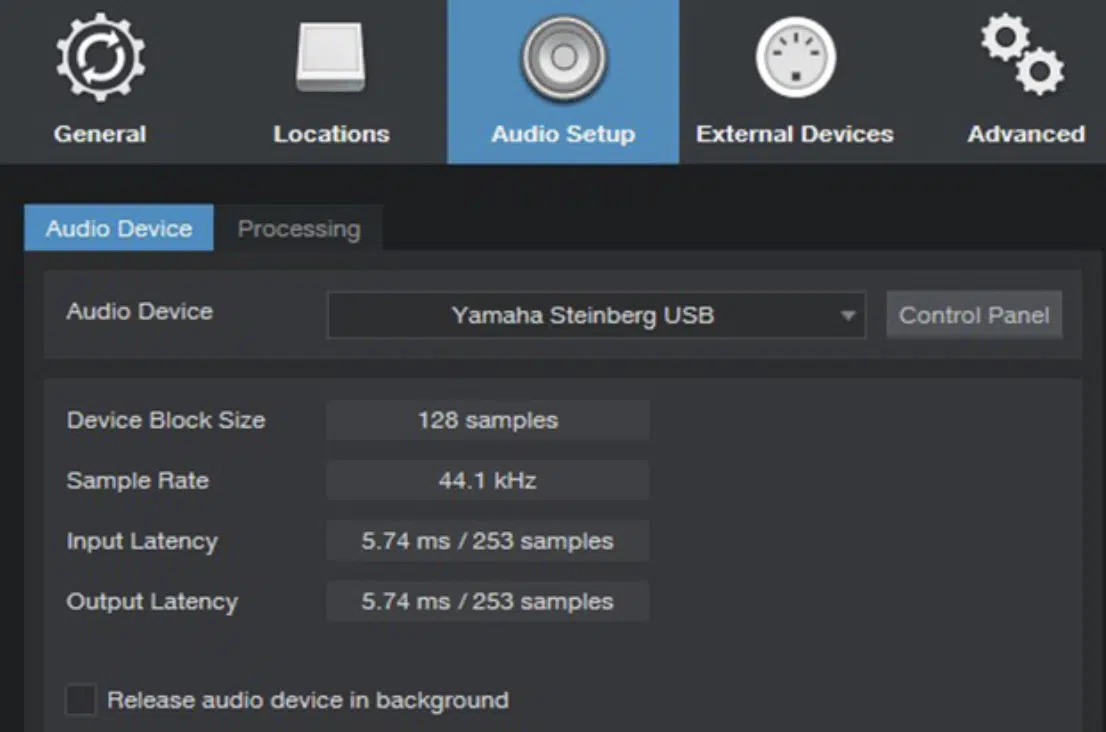

One of the first steps to reduce latency is to optimize your audio interface settings.

This involves adjusting the buffer size and sample rate in your DAW to find the perfect balance for your current project.

NOTE: For audio recording and live performance, where low latency is crucial, setting a lower buffer size can help.

However, it’s important to monitor the performance of your audio system to avoid overloading your CPU load, which can lead to audio dropouts.

Another aspect to consider is the audio interface’s own software control panel, where you can:

- Adjust the device block size.

- Monitor the latency time directly.

Some interfaces also offer zero-latency monitoring features 一 allowing you to hear the input signal directly before it goes through your DAW.

This eliminates latency entirely for the performer and recording.

-

Leveraging Digital Signal Processing (+ Zero Latency Monitoring)

Digital signal processing (DSP) can be utilized to reduce the perceived effects of latency.

Some high-end audio interfaces come equipped with built-in DSP.

It enables effects like reverb or compression to be applied to the input signal without introducing additional latency.

This means that artists can monitor their performance with effects in real-time 一 enhancing the recording experience without affecting the latency.

Zero-latency monitoring is a feature found in many modern audio interfaces that bypasses the computer’s CPU load, routing the input signal directly to the headphones or speakers (MIDI input, etc.).

This allows musicians to hear themselves without the delay caused by digital signal processing.

It’s crucial for maintaining timing and performance quality during recording sessions.

-

Managing System Resources

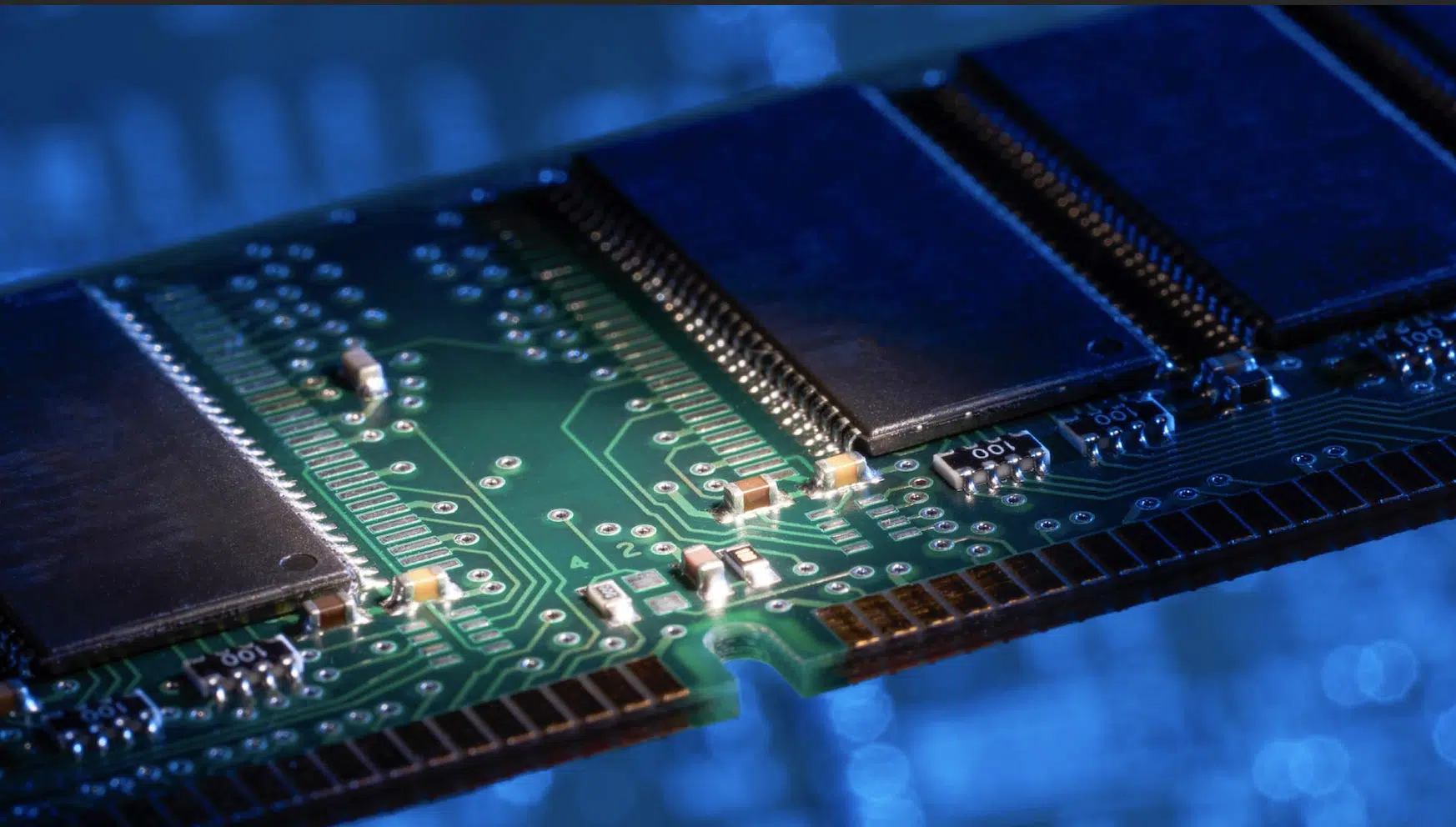

The overall performance of your music production system also affects latency.

Ensuring that your computer has sufficient processing power and RAM can help manage the demands of low buffer sizes and high sample rates (essential for reducing latency).

Closing other programs running in the background that are not necessary for your audio recording session can free up more processing power for your DAW and audio plugins.

Upgrading your computer’s hardware (such as installing a faster CPU or more RAM) can also contribute to lowering latency.

When working with particularly demanding projects, which might include multiple instances of virtual instruments/extensive use of digital signal processing, this is key.

It ensures your audio system is optimized for audio production is key.

-

Using Direct Monitoring

Direct monitoring allows you to hear the incoming signal from your audio interface directly, without it being processed by the DAW, effectively removing any noticeable delay.

This is particularly useful during recording sessions.

It helps performers to hear themselves in real-time 一 ensuring a more natural and responsive playing or singing experience.

Many audio interfaces offer direct monitoring controls, either:

- On the hardware itself.

- Through their software control panels.

Adjusting these different settings to enable direct monitoring can significantly enhance the audio recording experience.

For example, when a guitarist is laying down a track, using direct monitoring means they can hear their guitar’s output latency (not to be confused with input latency) instantly, without any disorienting delay.

It helps you set up for a performance that feels more connected and immediate.

Software Solutions and Plug-ins

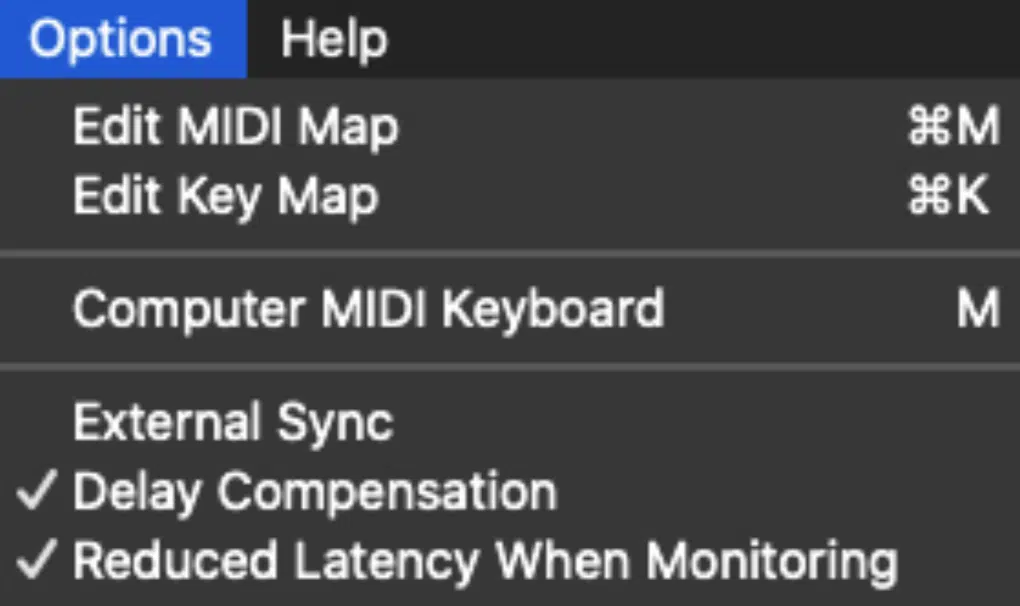

Ableton Reduced Latency When Monitoring

In the world of digital audio production, the choice of music production software and plug-ins can significantly impact latency.

Leveraging the right tools not only enhances the creative process but also ensures that latency is kept to a minimum.

Digital Audio Workstations (DAWs) like Ableton Live, Pro Tools, and Logic Pro offer built-in optimizations specifically designed to manage latency.

For instance, Ableton Live features a ‘Reduced Latency When Monitoring’ option 一 allowing for real-time audio recording and playback engine with minimal delay.

Similarly, Pro Tools offers a ‘Low Latency Monitoring’ mode that bypasses plug-ins that add significant latency.

This ensures that the audio recording process is as immediate as possible.

Utilizing these different settings effectively can help maintain a smooth workflow, even in sessions that demand extensive audio processing.

Plug-ins play a vital role in the music production process; from shaping the sound to adding effects.

However, not all plug-ins are created equal when it comes to latency, so opting for plug-ins designed with efficient processing in mind is crucial.

For example, Universal Audio’s UAD plug-ins, renowned for their high quality, run on external DSP hardware to reduce the load on your computer’s CPU 一 minimizing additional latency.

Similarly, Native Instruments offers a range of virtual instruments and effects known for their efficiency and low latency, perfect for real-time performance and audio recording.

Pro Tip

Another strategy involves the use of ‘freeze’ and ‘bounce’ functions within your DAW.

These functions render tracks with plugin effects into audio files, reducing the need for real-time plugin processing 一 consequently, lowering the overall latency of the project.

This is particularly useful in dense projects with numerous tracks and effects.

Bonus: Common Digital Audio Latency Myths Debunked

A common myth in the audio world is that zero latency is achievable in all situations.

While zero-latency monitoring can be achieved through direct hardware monitoring, it’s important to understand that some degree of latency is always present when processing digital audio.

The goal is to reduce this latency to levels that are imperceptible or manageable, rather than to eliminate it entirely (low audible latency).

So, remember to measure latency constantly and tweak to desire.

Another myth is that higher sample rates always result in better sound quality and, therefore, are worth the increased latency.

While it’s true that higher sample rates can improve audio fidelity, the benefits must be weighed against the increased demand on your system and the potential for increased latency.

In many audio production scenarios, a sample rate of 44.1kHz or 48kHz is perfectly adequate and helps keep latency at a manageable level.

What is Audio Latency: Final Thoughts

Latency is not something that should ever hinder your creative process or the quality of your songs.

Instead, it’s a technical aspect that, when understood and managed, will completely enhance the quality of your tracks and overall performance.

So, using the techniques we discussed today, you’re now able to minimize (or, completely destroy) disruptive delays and navigate your DAW like a professional.

Since we talked about latency settings in Ableton and Logic, here’s an invaluable bonus for all my innovative and inspired music producers: these legendary Free Project Files.

They contain 3 free project files; each available in Ableton, FL Studio, and Logic Pro.

You don’t have to worry about starting from scratch with these project files 一 you’ll have access to the best way for you to see exactly how professional-quality tracks are made.

This allows you to know precisely how to improve your own music.

And yes, all the files are cleared for personal and commercial use, so you can use them in your music however you please, giving you a real head start in achieving the sound you’re after.

With the knowledge you’ve gained about audio latency and the addition of these legendary Free Project Files, you’re about to take your music production skills to a whole new level.

Remember, mastering latency is a key step in refining your sound and ensuring your tracks stand out, so don’t let a tiny detail make your tracks sound unprofessional.

Until next time…

Leave a Reply

You must belogged in to post a comment.